Apple on Tuesday was assigned patent rights to a projection-based 3D mapping solution won from its acquisition of Israeli company PrimeSense, suggesting the company is moving forward on development of advanced body gesture control for Mac, Apple TV and possibly iOS.

The U.S. Patent and Trademark Office issued U.S. Patent No. 8,908,277 for a "Lens array projector" to Apple in one of the first reassignments from the tech giant's purchase of PrimeSense, a small Israeli firm specializing in 3D motion sensing and machine vision.

PrimeSense, scooped up by Apple last year in a deal rumored to be worth between $345 million and $360 million, was founded in 2005 and went on to develop silicon and middle-ware for motion sensing and 3D scanning applications. The firm gained notoriety in 2010 when Microsoft licensed its infrared motion-tracking, depth-sensing chip for use in the Kinect sensor for Xbox 360.

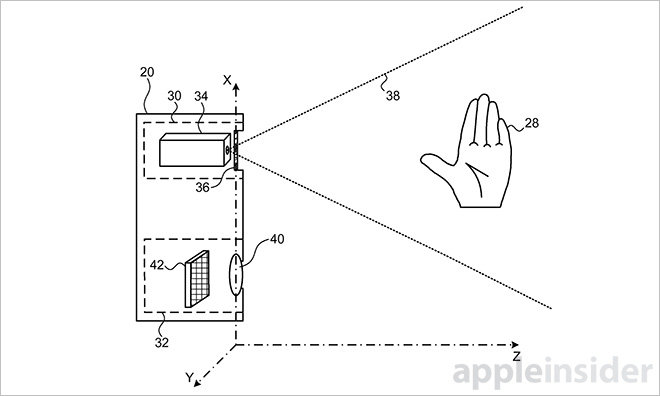

The patent assigned on Tuesday outlines basic optical components of PrimeSense's "light coding" technology, which derives a scene's spacial and depth information using patterned infrared light. More specifically, Apple's '277 patent provides a better solution for creating uniform patterned light in an optical projection system, offering more accurate results.

In an optical projection system, beams of light are transmitted onto a patterning element, which in turn emits the resulting pattern of light onto a subject surface. An imaging sensor records the pattern as it falls across volumetric shapes and feeds data to a processor that calculates distortion and other metrics. Outputting is represented as a three-dimensional map of the scene.

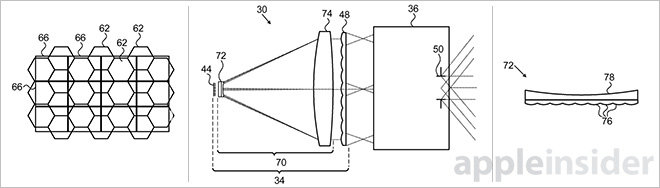

Due to the system's comparison-based setup, it is highly sensitive to the smallest of deviations. For example, in laser-based projectors, laser speckle can offset readings to the point of degrading a final 3D-mapped image. To ensure high accuracy, Apple's patent starts at the source where a matrix of light beams is homogenized, averaged and collimated (collected and made parallel) before being transmitted to a subject for assessment

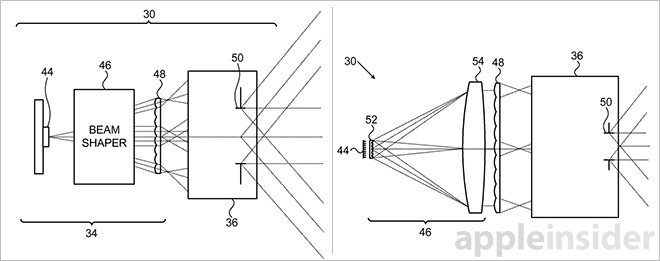

Called a beam homogenizer, Apple's invention receives input from a matrix of light sources, such as laser diodes or similar components, that are arranged in a predetermined, uniform fashion. When light reaches the homogenizer, it passes through a first microlens array with lenses disposed at a pitch equal to the light source matrix. A second microlens element constructed with a different pitch receives and focuses light from the first array, passing it on to a collection lens for collimation.

When light passes through two microlens arrays with different pitches, and different geometric arrangements, it mixes due to the offset to produce a final beam that averages out deviations like laser speckle.

In another embodiment, the second microlens array is replaced with a diverging lens that passes light on to a collection lens for collimation. This design allows for the microlens array and divergent lens to be produced as one optical element, yielding a more compact beam homogenizer and overall setup possibly small enough to fit into an Apple TV set-top streamer, or even a mobile device like an iPhone.

It remains unclear what Apple has planned for PrimeSense and its substantial patent and tech assets, but today's reassignment offers a look at what could be Cupertino's take on the next-generation of control interfaces. It was previously rumored that the next-gen Apple TV would employ 3D mapping as a gesture-based control scheme, much like Microsoft's Kinect.

Earlier this year, AppleInsider reported on job listings suggesting Apple is indeed looking to include a camera or cameras in an upcoming Apple TV iteration. Reports, however, claim progress on a next-gen Apple streamer has been stalled due to cable companies' unwillingness to bend on content licensing.

Apple's beam homogenizer patent was first filed for in August 2011 and credits Benny Pesach, Shimon Yalov and Zafrir Mor as its inventors.

Mikey Campbell

Mikey Campbell

-m.jpg)

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Mike Wuerthele

Mike Wuerthele

11 Comments

Will be nice to see how Apple actually implements this into something. Could be a good candidate for their home automation solution.

Amongst other uses I can see the Accessibility section of iOS and OS X gaining the ability to read sign language, after a personalized learning phase, and have Siri speak the gestures.

As Cook would say : "You don't have to understand all that. What it does is just give you a super precise 3D representation".

I'm not a gamer, have no interest in XBox or PS4. But when I saw some of the demos of what the XBox Kinect thing could do, it totally blew my mind. There was one demo where the guy would make facial expressions and the kinect could see his face and would show icons that best represented his expression. And as he moved his arms a fully articulated wireframe of a person's body would match his movements. It was really quite impressive. The Kinect is based on a licencing of the PrimeSense technology, IIRC. When I heard that Apple bought them, I was super excited. I'm glad to see there is some activity around this. Can't wait to see how Apple incorporates the tech into future products.

"... The firm gained [B]notoriety[/B] in 2010 when Microsoft licensed its infrared motion-tracking, depth-sensing chip for use in the Kinect sensor for Xbox 360." Notoriety? How so?