Apple is rumored to replace iPhone's Touch ID home button with alternative bio-recognition hardware when it launches an OLED version of the handset later this year, but the technology enabling that transition is so far an unknown. A patent application published Thursday, however, indicates where the company's research is headed.

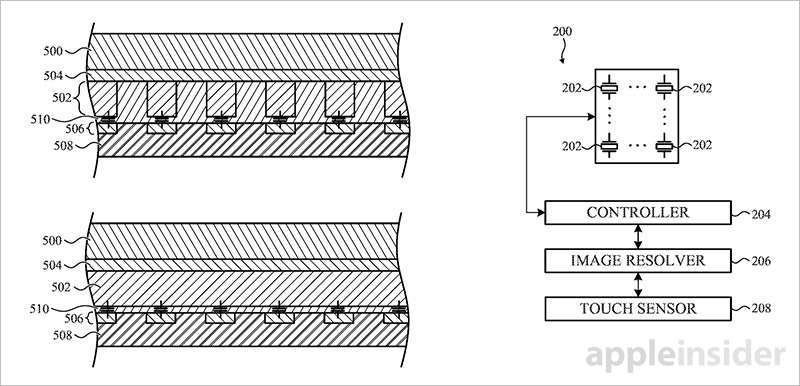

In its filing for "Acoustic imaging system architecture," Apple proposes a method by which a conventional capacitive fingerprint sensor like Touch ID might be replaced by an array of acoustic transducers laid out beneath a device display or its protective housing.

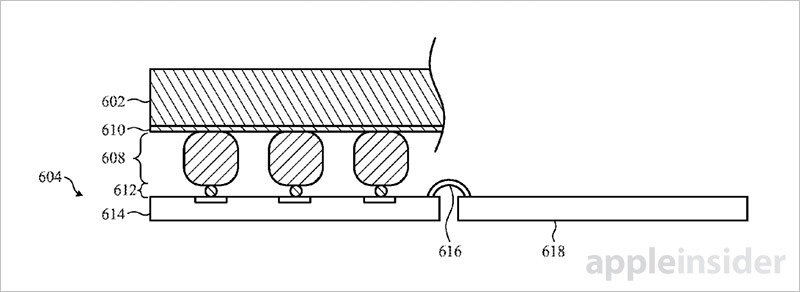

As described in some embodiments, the transducers in a first mode generate acoustic waves, or pulses, capable of propagating through a variety of substrates, including an iPhone's coverglass. Operation, or driving, of the transducers is managed by a controller.

The same transducer hardware then enters a second sensing mode to monitor reflections, attenuations, and diffractions to the acoustic waves caused by a foreign object in contact with the input-responsive substrate. Resulting scan data in the form of electrical signals is read by an onboard image resolver, which creates an approximated two-dimensional map.

Applied to biometric recognition solutions, Apple's acoustic imaging system might be configured to read a user's fingerprint. According to the filing, ridges in a finger pad introduce an acoustic impedance mismatch that causes the mechanical waves generated by a transducer to reflect or diffract in a known manner.

Like other biometric security solutions, the digital maps obtained by an acoustic imaging system are ultimately compared against a database of known assets to authenticate a user.

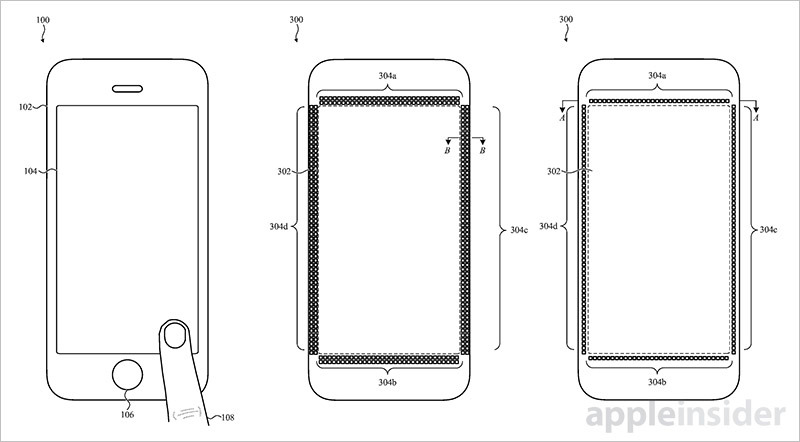

Thanks to its design, the acoustic system can be installed practically anywhere in a device chassis, including directly under a display. Other potential points of integration include a screen's perimeter or bezel, around buttons and in non-input areas of a housing, like a rear chassis. The latter coupling method would allow an example system to sample a user's entire handprint.

Indeed, in some cases the system might be configured to scan for particular body parts like a user's ear or a skin pattern in order to determine how the device is being held. Depending on the implementation, an acoustic imaging system might also serve as a robust replacement for other legacy components like an iPhone's proximity sensors.

The patent application goes on to cover system details like ideal materials, transducer placement, controllers, drive chips, component layouts and more.

Whether Apple plans to incorporate acoustic imaging technology in this year's "iPhone 8" is unknown. Noted KGI analyst Ming-Chi Kuo predicts the company will ditch existing Touch ID technology in favor of a dual — or perhaps two-step — bio-recognition solution utilizing an optical fingerprint reader and facial recognition hardware.

Kuo further elaborated on Apple's face-recognizing technology in a note to investors this week, saying Apple plans to incorporate a "revolutionary" front-facing camera that integrates infrared emitters and receivers to enable 3D sensing and modeling.

Apple's acoustic imaging patent application was first filed for in August 2016 and credits Mohammad Yeke Yazandoost,Giovanni Gozzini, Brian Michael King, Marcus Yip, Marduke Yousefpor, Ehsan Khajeh and Aaron Tucker as its inventors.

Mikey Campbell

Mikey Campbell

-m.jpg)

Andrew O'Hara

Andrew O'Hara

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Chip Loder

Chip Loder

Christine McKee

Christine McKee

-m.jpg)

13 Comments

Very interesting.

So this might replace touch ID as well as facial recognition? Which is it ... or both? I can't keep up with the rumors. ;)

A high fidelity sonar imaging system capable of discerning fingerprints and human appendages usefully and without false detections would require an incredible amount of signal processing for all but the most rudimentary level of functionality. This abstract concept has massive overkill potential for the perceived benefit, i.e., solution in search of a problem. Massive engineering challenge due to signal processing and energy requirements. On the other hand, I can see a much lower fidelity implementation used to help solve some very basic challenges like allowing touch screens to work effectively with gloved fingers and detecting very basic non-contact hand gestures. Likewise, using a sonar imaging system to augment or work in concert with other sensor systems like optical and capacitive may be useful.

If there was a requirement that patents be backed by working models, even of just the core claims, these far reaching abstract concept patents would have more credibility and perhaps we'd see real results in less than a decade or two. I'd love to see the patent office require that abstract concepts presented in patents be brought to market or implemented in a real product or working model within a reasonable time frame, say 5 years max, or else the patent is revoked. IMHO, the vagueness of abstract concept patents and the lack of required implementation is one of the root causes of so many of the patent related lawsuits we've been seeing for the past couple of decades. The patent owner ends up sitting on the concept and when someone actually builds something that vaguely resembles the concept they get sued for infringement. Doesn't make sense if one of the purposes of patents being enforced at the societal level is to incite innovation that benefits both the inventors and society at large.