Apple's first publicly issued academic paper, research focusing on computer vision systems published in December, recently won a Best Paper Award at the 2017 Conference on Computer Vision & Pattern Recognition, one of the most sought after prizes in the field.

Considered one of the most influential conferences in the field according to the h-index, a metric for scholarly works, CVPR in July selected Apple's paper as one of two Best Paper Awards.

According to AppleInsider reader Tom, who holds a PhD in machine learning and computer vision, the CVPR award is one of the most sought after in the field.

This year, the conference received a record 2,680 valid submissions, of which 2,620 were reviewed. Delegates whittled down that number to 783 papers, granting long oral presentations to 71 entrants. Apple's submission ultimately made its way to the top of the pile, an impressive feat considering it was the company's inaugural showing.

CVPR's second Best Paper Award went to Gao Huang, Zhuang Liu, Laurens van der Maaten and Kilian Q. Weinberger for their research on "Densely Connected Convolutional Networks." Research for the paper was conducted by Cornell University in collaboration with Tsinghua University and Facebook AI Research.

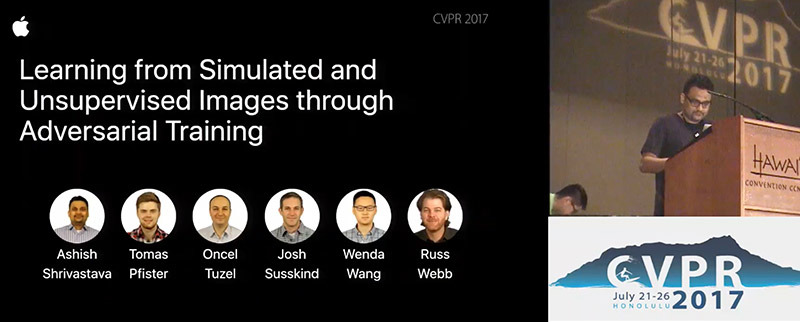

Titled "Learning from Simulated and Unsupervised Images through Adversarial Training," Apple's paper was penned by computer vision expert Ashish Shrivastava and a team of engineers including Tomas Pfister, Oncel Tuzel, Wenda Wang, Russ Webb and Apple Director of Artificial Intelligence Research Josh Susskind. Shrivastava presented the research to CVPR attendees on July 23.

As detailed when it saw publication in December, Apple's public research paper describes techniques of training computer vision algorithms to recognize objects using synthetic images.

According to Apple, training models based solely on real-world images are often less efficient than those leveraging synthetic data cause because computer generated images are usually labeled. For example, a synthetic image of an eye or hand is annotated as such, while real-world images depicting similar objects are unknown to the algorithm and thus need to be described by a human operator.

As noted by Apple, however, relying completely on simulated images might yield unsatisfactory results, as computer generated content is sometimes not realistic enough to provide an accurate learning set. To help bridge the gap, Apple proposes a system of refining a simulator's output through SimGAN, a take on "Simulated+Unsupervised learning." The technique combines unlabeled real image data with annotated synthetic images using Generative Adversarial Networks (GANs), or competing neural networks.

In its study, Apple applied SimGAN to the evaluation of gaze and hand pose estimation in static images. The company says it hopes to one day move S+U learning beyond to support video input.

Like other Silicon Valley tech companies, Apple is sinking significant capital into machine learning and computer vision technologies. Information gleaned from such endeavors will likely enhance consumer facing products like Siri and augmented reality apps built using ARKit. The company is also working on a variety of autonomous solutions, including self-driving car applications, that could make their way to market in the coming months or years.

"We're focusing on autonomous systems," Cook said in a June interview. "It's a core technology that we view as very important. We sort of see it as the mother of all AI projects."

Mikey Campbell

Mikey Campbell

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Andrew O'Hara

Andrew O'Hara

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Bon Adamson

Bon Adamson

-m.jpg)

24 Comments

Quite a compliment. Well done Apple.

Apple is soooo far behind in AI. DOOOMMMMEEEEDDDD!!!!

/s

But but but -- people online said Apple doesn't understand AI and are too far behind Facebook and friends!

Presumably patents had already been applied for this, so the paper presented wouldn't constitute the ceding of a competitive advantage.