The HomePod stands to be an audiophile's ideal speaker when it ships on Feb. 9, in no small part due to the considerable effort Apple has put into audio processing for the device. Audio expert Matt Hines of iZotope explains some of the technical hurdles Apple and other smart speaker producers have to overcome to provide a high-quality audio experience in a wide variety of environments.

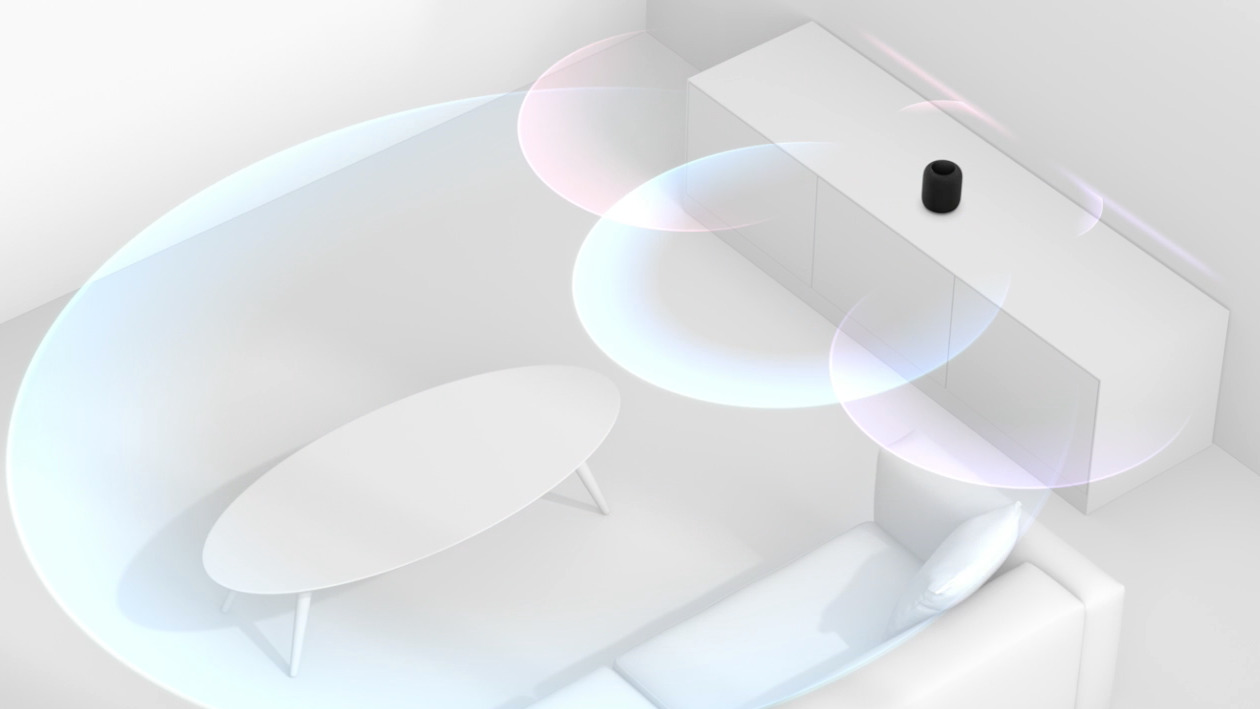

The first problem Apple and similar firms face when creating an intelligent speaker, like the HomePod, is to ensure there is an optimal audio experience for the user, regardless of where they are in the room. Hines, product manager of Spire by iZotope and guest on this week's AppleInsider Podcast, says the exact position of the user can dramatically affect the sound from a normal speaker, with the problem compounded by local acoustics.

Moving a speaker around a room alters the sound reflections in a dramatic way, Hines suggests, such as a loss of bass frequencies when moving towards the corner of a room. While bouncing around the room, the reflections can multiply the sound in different ways, potentially causing some resonant frequencies to become inconsistently louder, an effect that is particularly noticeable for longer wavelengths.

"Depending on where the ear is, you'll hear sudden, violent variations in frequency levels, which is why bass might disappear in the corner of a room," advises Hines. "It also helps explain why you'll argue with your family about whether the TV is too quiet or loud enough — and you could all be right — just sat in juxtaposed locations within a room."

Items located within the local environment can also change sound quality, with soft furnishings absorbing some noise while harder surfaces have a tendency to reflect it. This is why places relating to audio production or audio consumption, like a recording studio or a high-end home cinema room, carefully select the materials they place on their walls, floors, and ceilings to shape the sound to the user's needs.

In the case of Apple, it's using array of six microphones and a digital signal processor (DSP) to understand the environment based on its acoustics, and adapt the device's output to better suit its physical location and the room's audio profile. Considering the multiple speakers and the microphone array, it is possible for such a system to customize the output of each speaker to allow for a similar sound to be heard through as much of the environment's space as possible.

If HomePod is running a realtime DSP that can alter the sound emitted from each of the device's seven tweeter speakers, it can constantly change the profile even if the environment itself changes, such as the mass of the listener moving to a different location in the same area. The experience is consistent regardless of position, with Hines adding the consistency will make listening to music "more seamless and more immersive."

The DSP and this adaptive acoustic processing system will also help when the HomePod gains its stereo audio feature in a future update, one that will offer stereo audio split across two HomePods.

In a typical stereo audio setup, "adjusting the listening position even an inch will have a very material impact on the arrival time of the audio to your ear," Hines stresses. "The crosstalk between speakers is now different and the room reflections are too."

This raises an issue for the HomePod if it plays straight stereo without any adaptations, as the audio will almost certainly be affected by the position of the user. "A true stereo setup for HomePod would be a bad user experience, which Apple aren't in the business of offering."

By using this audio adaptation, which Apple has attempted to patent, it means that the HomePods are able to take each other into account, so the stereo experience will still be present and enjoyable for those near enough to them to hear it, while still keeping the audio listenable in other areas of the room.

The microphone array, DSP, and speaker collection also helps the HomePod in other ways, aside from playing audio. As the HomePod has voice-enabled features, such as those used by Siri, the system also helps with its reception of speech from users.

The beamforming technology allows the HomePod to determine the position of the user relative to the device, and in turn adjust its microphone settings to better acquire audio from that direction. In effect, the audio feeds from all of the microphones in the array are analyzed for speech, with any extra noises picked up by the array used to remove extraneous sounds from the sound of the voice, making it clearer.

"A beam-form mic array means that while there are multiple mics pointed in every direction, they can weight the signal received by each mic differently depending on a sort of correlation matrix," Hines explains, continuing that the processing "helps reject environmental surrounding ambience, and highlight only the more transient, desired speech material."

Hines illustrates the difference in audio quality via an example of typical speakerphone systems, which use a single microphone that degrades in audio quality if the user moves further away or turns to speak in a different direction. While using an array of microphones pointed in all directions would provide more than enough coverage of a room to pick up the user's voice wherever they are, it would also pick up unwanted sounds from around the room that would need processing to remove.

"This weighting is a constant computational process, so as you walk around a HomePod, those microphones are smart enough to know about it, and to ensure that you sound just as clear."

This system also enables acoustic echo cancellation, significantly reducing the potential for feedback, an issue commonly endured when a speaker is placed too close to a connected microphone, with the resulting audio loop usually causing a high-pitched whine. Hines notes HomePod is intelligent enough to recognize occasions where the sound its making is being fed back to itself, such as a reflection from a wall or other nearby flat surface, allowing it to cancel that sound from the audio signal.

The echo cancellation and beamforming technology will help with more than the ability to hand off calls from an iPhone to the HomePod. Providing as clean an audio feed as possible will help improve Siri's voice recognition accuracy, making it less likely to misinterpret a command or a query.

"Simply put, there is a ton of stuff happening automatically in order to offer you a smart speaker that sounds good and works well regardless of where you are in your home," Hines declares. "Elegant simplicity is the hardest thing to build by far."

Hines notes all of this audio processing, as well as other features like AirPlay 2, Siri, and HomeKit, is performed using the A8 processor, as used in the iPhone 6. Apple's need to use such a powerful chip to perform all of these functions and more, which Hines suggests could be seen by users as seemingly doing "less" than the usual workload of an iPhone is a "testament to how much is actually going on inside" the HomePod.

Malcolm Owen

Malcolm Owen

-m.jpg)

-m.jpg)

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Andrew Orr

Andrew Orr

Christine McKee

Christine McKee

Andrew O'Hara

Andrew O'Hara

88 Comments

Very nicely done article! Thank you.

I am extremely curious how the HomePod will respond to other speakers playing music. I’m planning on using an airplay receiver to pair (eventually) a couple HomePods to a top tier subwoofer and other high end speakers. My guess is that the audio analysis and beam forming capabilities of the HomePod will pair up perfectly to meet offer some truly astounding audio quality and sound stages.

The HomePod is in some respects better than Stereo. The DSP respects the intent of the original stereo mix. For example, if it detects backing vocals mixed toward the left channel it will send these more toward the left tweeters. At the same time lead vocals will be beamed to the center of the room. This creates a sound stage intelligently from the stereo mix and it allows each of the 7 tweeters to be dedicated to reproducing a smaller number of sounds.

There is just one woofer in a HomePod but this fact does not completely destroy the stereo effect because the human ear and brain are not as good at detecting the direction of very low frequency sounds. Nether-the-less when two HomePods are able to work together they will do an even better job, especially if a track has a bass guitarist mixed to one side.

I can’t wait to hear it. I just listened to The Accidental Podcast which contained an awesome deep dive into the technical implementation of AirPlay 2. However, the guys on there seemed to base their expectations of the HomePod audio output on it’s size - this is kind of like thinking the camera in iPhone won’t be as good as a physically larger 1990’s era digital camera. Computational audio will enable HomePod to punch way above it’s size and it is a quantum leap forward compared to anything else in this price range.

Tell all that to Brian Tong at CNET. Man that dude is an Apple hater disguised as a self proclaimed fan. His shtick is saying he loved the Apple of the past and everything today is dog shit, while praising and loving all things google and Alexa and amazon. Hahah he’s entertaining but not very well versed in tech. Not like the guys on the Apple Insider podcast, for example.

Hurry up Apple and get that stereo firmware update to us so I have an excuse to purchase a second HomePod.