Apple's cloud-based Dictation feature, currently supported on the new iPad and as part of the broader Siri voice assistant feature of iPhone 4S, converts speech to text virtually anywhere.

It works by sending audio recordings of captured speech to Apple's servers, which respond with plain text. While it doesn't go as far as the more intelligent Siri, Dictation does intelligently cross reference the names and assigned nicknames of your contacts in order to better understand what you are saying.

Similar to Siri or Dictation on the new iPad, Dictation on Macs running OS X Mountain Lion pops up a simple mic icon when activated, which listens until you click or type the key to finish.

Just as with Siri or dictation on the new iPad, Dictation under Mountain Lion is quite fast and highly accurate, but does require a network connection to function. If you don't have a network connection, the Dictation input icon will simply shake, indicating that it is not available.

Anything you say can be used to improve your dictation

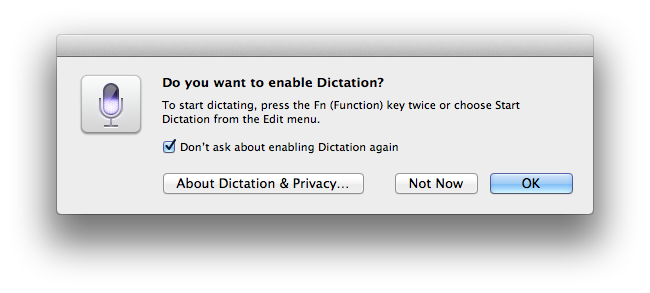

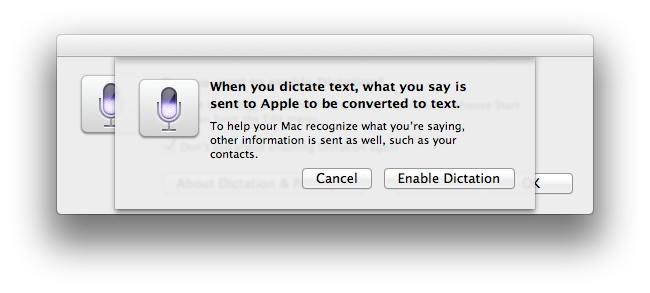

Apple appears to be exercising great caution in highlighting the privacy issues related to using Dictation. The service is turned off by default, and turning it on from System Preferences requires clicking through a notice that various types of local data, including Contacts, are sent to Apple's servers in order to recognize the speech you're trying to convert to text.

Privacy on parade

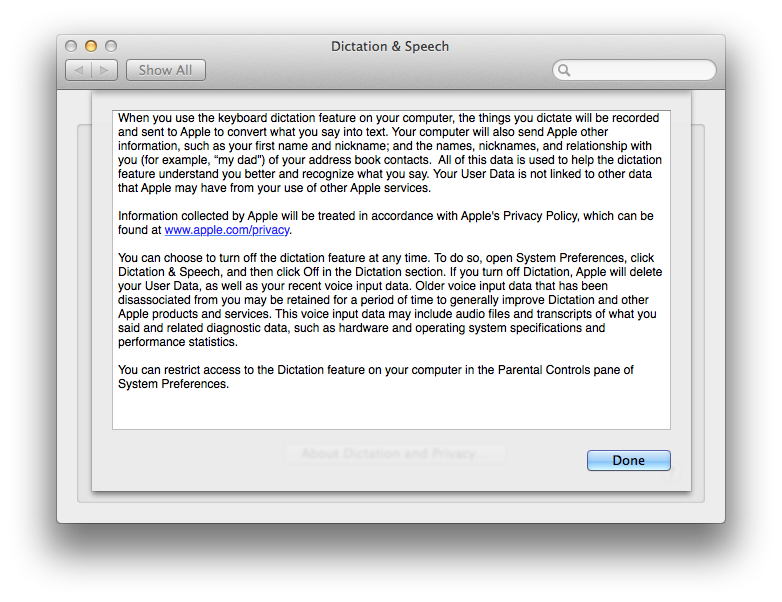

For an even longer discussion of what's involved, you can click the "About Dictation & Privacy" button, which presents the following explanation:

"When you use the keyboard dictation feature on your computer, the things you dictate will be recorded and sent to Apple to convert what you say into text. Your computer will also send Apple other information, such as your first name and nickname; and the names, nicknames, and relationship with you (for example, “my dadâ€) of your address book contacts. All of this data is used to help the dictation feature understand you better and recognize what you say. Your User Data is not linked to other data that Apple may have from your use of other Apple services.

"Information collected by Apple will be treated in accordance with Apple's Privacy Policy, which can be found at www.apple.com/privacy.

"You can choose to turn off the dictation feature at any time. To do so, open System Preferences, click Dictation & Speech, and then click Off in the Dictation section. If you turn off Dictation, Apple will delete your User Data, as well as your recent voice input data. Older voice input data that has been disassociated from you may be retained for a period of time to generally improve Dictation and other Apple products and services. This voice input data may include audio files and transcripts of what you said and related diagnostic data, such as hardware and operating system specifications and performance statistics.

"You can restrict access to the Dictation feature on your computer in the Parental Controls pane of System Preferences."

Unlike the virtual keyboard on iOS devices, your Mac has no ability to sprout an extra mic key just to initiate Dictation. However, you do have a little used key that Apple has assigned by default to serve as a conveniently accessible way to begin Dictation.

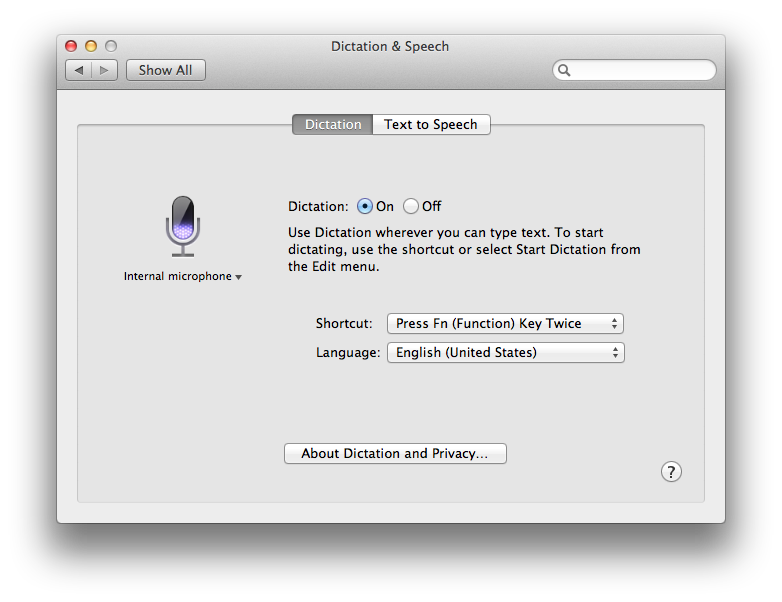

Once activated, the new Dictation feature can be activated by hitting the lower left Function (fn) key twice. This brings up a microphone popup at the insertion point in the current text field, whether in a document, a Finder search field, within a web page, or any other standard region for entering text.

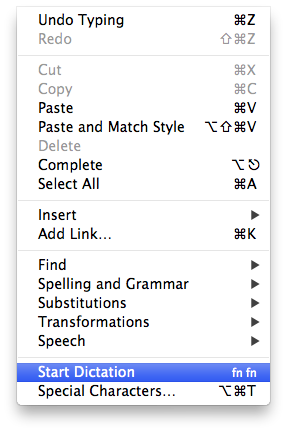

Alternatively, you can also select Start Dictation from the Edit menu within the active app. Below is the Edit menu from TextEdit, showing the shortcut of hitting the function key twice.

You can also assign either the right or left Command (Apple's "propeller" key), or both, to serve as the double-tap signal to begin Dictation, or you can enter some arbitrary other set of keys to trigger the event.

You can also assign Dictation to use either the internal microphone or a plugged in mic, or leave it to its default setting, which is automatic. Plug in an iPhone-style pair of headphones with an integrated mic or connect a dedicated USB microphone or line-in mic, and Dictation will automatically begin using it as the most appropriate input device unless you specify otherwise.

Siri on the horizon?

The new iPad got the Dictation subset of Siri features when it arrived at the beginning of the year, but by the end of 2012, it will join iPhone 4S in getting the full Siri experience, thanks to iOS 6.

This suggests that Mountain Lion Macs will also eventually get an upgrade from basic Dictation to the full Siri feature set, although many of the features of Siri may seem more useful in a mobile device.

Apple is also working to improve upon Siri's bag of tricks for iOS users, having promised new sport scores, movie information with reviews, expanded restaurant responses including table reservations, integration with updating Facebook and Twitter feeds, and the ability to launch apps by name.

The expansion potential for Siri on desktop computers would likely benefit from a different set of features aimed more at voice control of the desktop, such as commands to invoke Mission Control or perform a Spotlight search.

Speech Recognition replaced with Dictation

Apple previously focused on voice control of the desktop environment, rather than accurate voice dictation, in the feature set currently presented in OS X Lion as "Speech Recognition."

Apple's feature set of "speakable items" that could be used to navigate menu bar items and switch between applications was first made part of the Mac system software back in 1993 on the Macintosh Quadra AV, part of an ambitious, pioneering effort to deliver advanced speech recognition under the program known as "PlainTalk."

That was almost 20 years ago, at a time when even the fastest desktop computers lacked the resources needed to rapidly and accurately decipher speech into text. Apple focused on a highly resource efficient design that focused on commands to invoke tasks rather than turning natural voice into paragraphs of text.

In bringing iOS-style Dictation to the Mac, Apple has discontinued the seldom used, rather outdated "Speakable Items" system, which was complex to configure, navigate and use. Dictation is, in contrast, incredibly simple. However, unlike the previous Speech Recognition system, Dictation relies on Apple's cloud services to work. This leaves open an opportunity for dedicated, specialized voice recognition systems that work locally and don't require a network link to function.

Text to Speech

In the other direction of turning text into synthesized speech, Mountain Lion retains the same default System Voice of Alex, which was first introduced in OS X 10.5 Leopard in late 2007. Alex replaced Vicki, the previous default voice that had introduced natural sounding speech in OS X 10.3 Panther in late 2003.

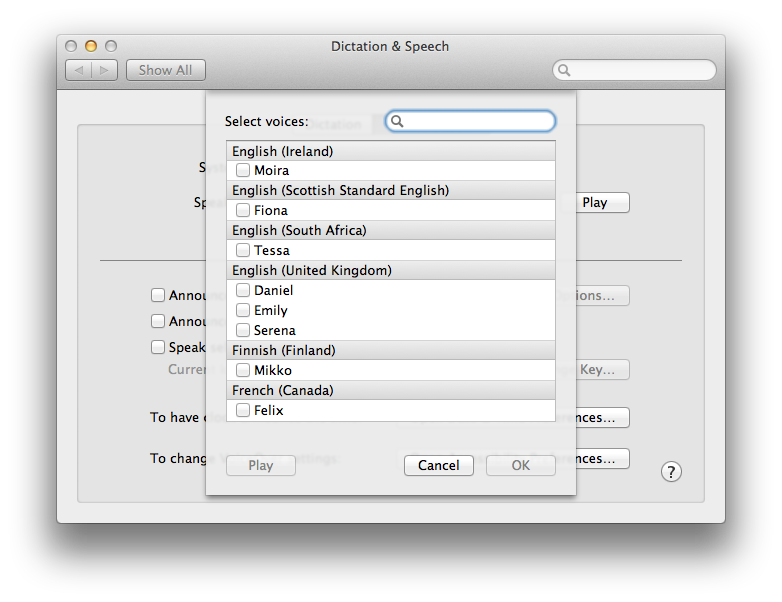

The current release of OS X Lion introduced a series of new, very high quality voices in both American English and other English accents, from British to Australian and Irish, as well as 21 other languages. AppleInsider first broke news of these new optional voices, which can be downloaded from Apple as desired from the System Voice/ Customize popup window.

Among the new voices are are Tom and Jill, both very natural sounding American English voices. As with Dictation, Text to Speech is turned off by default. It is also invoked with the more clumsy Option+Esc key sequence, or the Speech menu hidden away in most apps' Edit menu. Ironically, one very useful task Siri on the Mac could provide is to allow users to convert selected text to high quality speech, using their voice.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Chip Loder

Chip Loder

Marko Zivkovic

Marko Zivkovic

-m.jpg)

62 Comments

This is a big feature for my wife, she is dyslexic and finds it far easier to type an email or document via speech. We tried DragonDictate but it was not nearly as accurate as the dictation feature built into the iPad.

This is a big feature for my wife, she is dyslexic and finds it far easier to type an email or document via speech. We tried DragonDictate but it was not nearly as accurate as the dictation feature built into the iPad.

DragonDictate is a Nuance product. The Nuance speech recognition engine is a learning system. The more you use it, the more accurate it gets. Since Apple's use of the engine is through their servers, it of course would be far more accurate after the millions and millions of translations it has performed since last October.

I am amazed at how much better it has become since its release; it almost always recognizes what I say.

Why recognition is done on the Apple Servers iso at home is beyond me.

The only thing I'm worried about (even tho i do use dictate on my iPad & iPhone & soon on my macs) how will this compete in the broader market, now that google has introduced offline dictation in Jelly Bean.

I hope that at some point we have the option to have an offline dictate, even if it isn't as accurate as being online, id like it to be there.

The only thing I'm worried about (even tho i do use dictate on my iPad & iPhone & soon on my macs) how will this compete in the broader market, now that google has introduced offline dictation in Jelly Bean.

I hope that at some point we have the option to have an offline dictate, even if it isn't as accurate as being online, id like it to be there.

Will now Apple make itself incompatible with Dragon? I do NOT want to have the choice forced between "no voice recognition" and "Internet-based voice recognition". I want Dragon, with its ondisk voice database trained to my voice... besides, it wasn't exactly free, so I'd be annoyed to lose ability to use it.

Apple already has used that kind of annoying tactic. I just hope I'm needlessly scared.