The latest HomePod firmware leak suggests that Apple is planning to introduce new machine learning capabilities that will allow the iPhone's camera to recognize objects and types of scenes in real time, and adjust settings accordingly for the best-possible photo.

For years, iOS has used artificial intelligence to recognize seemingly anything through the native Photos app. As detailed by AppleInsider earlier this year, this little-known feature identifies items with great specificity and surprising accuracy, from common scenic queries like "beaches" to something as specific as "avocados."

Those same machine learning capabilities may be coming in real-time to the iPhone's camera, perhaps with the flagship "iPhone 8," based on new details unearthed by developer Guilherme Rambo. Specifically, they reveal a new feature called "SmartCam" that will presumably adjust the settings of the camera based on a detected scene.

Apple and virtually every other camera on the market already employ similar capabilities with face detection technology. But the details unearthed from Apple's own HomePod firmware suggest the new "SmartCam" capabilities will go well beyond that.

Types of scenes identified in the code include a photo of a baby, a pet, the sky, snow, sports, or a sunset. Others include fireworks, foliage, or a document.

The specificity of some of the options — such as a bright stage — suggests that the capabilities of Apple's AI could be akin to what the company already offers through the Photos app. In fact, baby, snow, sport, sunset, fireworks and document are already searchable categories within the Photos app, suggesting that Apple may be leveraging some of the same on-the-fly calculations already found in iOS to apply to pictures taken in real time.

Considering the so-called "SmartCam" feature was not announced by Apple at its Worldwide Developers Conference, it seems unlikely that it will be available in iOS 11 to legacy hardware. Instead, given that it remains a secret, it's possible that "SmartCam" could be exclusive to new handsets coming this year, referred to as the "iPhone 8" and "iPhone 7s" series.

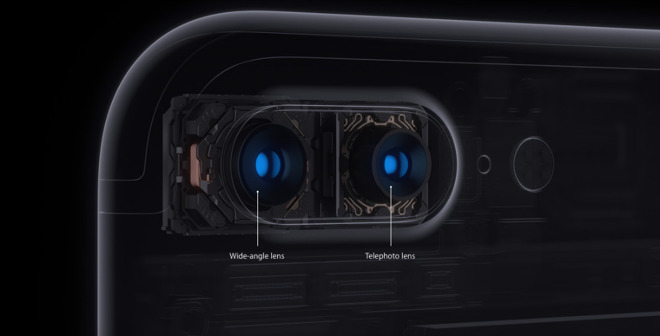

Apple's "iPhone 8" is expected to include a new vertical camera array, featuring two lenses akin to the iPhone 7 Plus. Apple is also expected to unveil a 5.5-inch "iPhone 7s Plus with dual cameras in a horizontal appearance, just like on the iPhone 7 Plus.

Using dual lenses allow for new capabilities such as the Portrait mode currently exclusive to iPhone 7 Plus, which blurs the background around a subject to simulate the kinds of shots that were previously only available with larger format cameras. Apple's smaller 4.7-inch "iPhone 7s" is expected to continue to have just a single lens.

Even Apple's critics are known to heap praise on the company's camera technology. Earlier this week, former Android chief Vic Gundotra declared that the iPhone 7 Plus camera is years ahead of competing Android options, thanks to Apple's marriage of hardware and software developed in-house.

Neil Hughes

Neil Hughes

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

1 Comment

New Cameras + Machine Learning + AR = some incredible stuff coming our way soon.

Every time I think I have a good idea of the range of possibility with AR I see a new example that floors me.