After years of controversies over Consumer Reports' assessments of Apple products, AppleInsider paid a visit to the organization's headquarters for an inside look at the testing process.

Over the last few years, there's been something of a cold war between many Apple enthusiasts and Consumer Reports, the influential magazine that conducts tests and publishes rankings and assessments of products across a wide variety of categories. Some Apple fans see a Consumer Reports as either unfair to Apple's offerings, or even biased in favor of Apple's competitors.

The first major tiff between Apple fans and Consumer Reports was the Antennagate brouhaha in 2010, which ultimately had a lot to do with a misunderstanding related to which information was and wasn't behind Consumer Reports' paywall.

There was a similar controversy related to the 2016 MacBook Pro release when, on its initial assessment of the computer, Consumer Reports denied a recommendation to a new MacBook Pro release for the first time ever, citing a battery life issue, although following a few weeks of back-and-forth, CR later granted its recommendation.

Other Apple/CR controversies in recent years have included the iPhone X falling behind both the Galaxy S8 and iPhone 8 series last December, and the HomePod speaker ranking behind Google and Sonos offerings upon its initial review in February.

Following some often-contentious back-and-forth between Consumer Reports and AppleInsider, an invitation was extended to visit the Consumer Reports offices, tour their testing facilities, and speak with some of the organization's decision-makers. On May 17, we took them up on it, spending about two hours at Consumer Reports' headquarters in Yonkers, N.Y.

On the visit, we got some answers from Consumer Reports about their process, their standards, their relationship with Apple, and exactly what happened in those various controversies.

Touring the facilities

While located in a nondescript industrial building in a drab industrial area just off the Saw Mill Parkway about 20 miles north of New York City, the interiors of Consumer Reports' offices are exceptionally sleek and modern, with a beautiful atrium and wide hallways. It's much more aesthetically pleasing than most magazine offices that I've visited in the past. And inside those offices is a dedicated area for product testing.

Consumer Reports tests audio products in a small room with a couch in the middle and a speaker setup directly in front of it. The speaker being tested is placed in the middle of a shelving unit, surrounded by reference speakers.

"We're trained to hear how it's supposed to sound," Elias Arias, Consumer Reports' chief speaker tester, said. "We train the panelists to discern characteristics of the sound."

The same sounds, including a particular jazz CD, are used for every test. Also, the tests are not conducted blindly; testers always know which speakers they are testing.

Audio tests are conducted by project leader Arias, and an additional tester. A third person is called in, in the case of a tie.

Also part of the audio testing is an anechoic chamber, a completely soundproof room that is used for measuring frequencies. The chamber, technically, isn't even part of the Consumer Reports building — it has its own foundation, and even its own fire alarm.

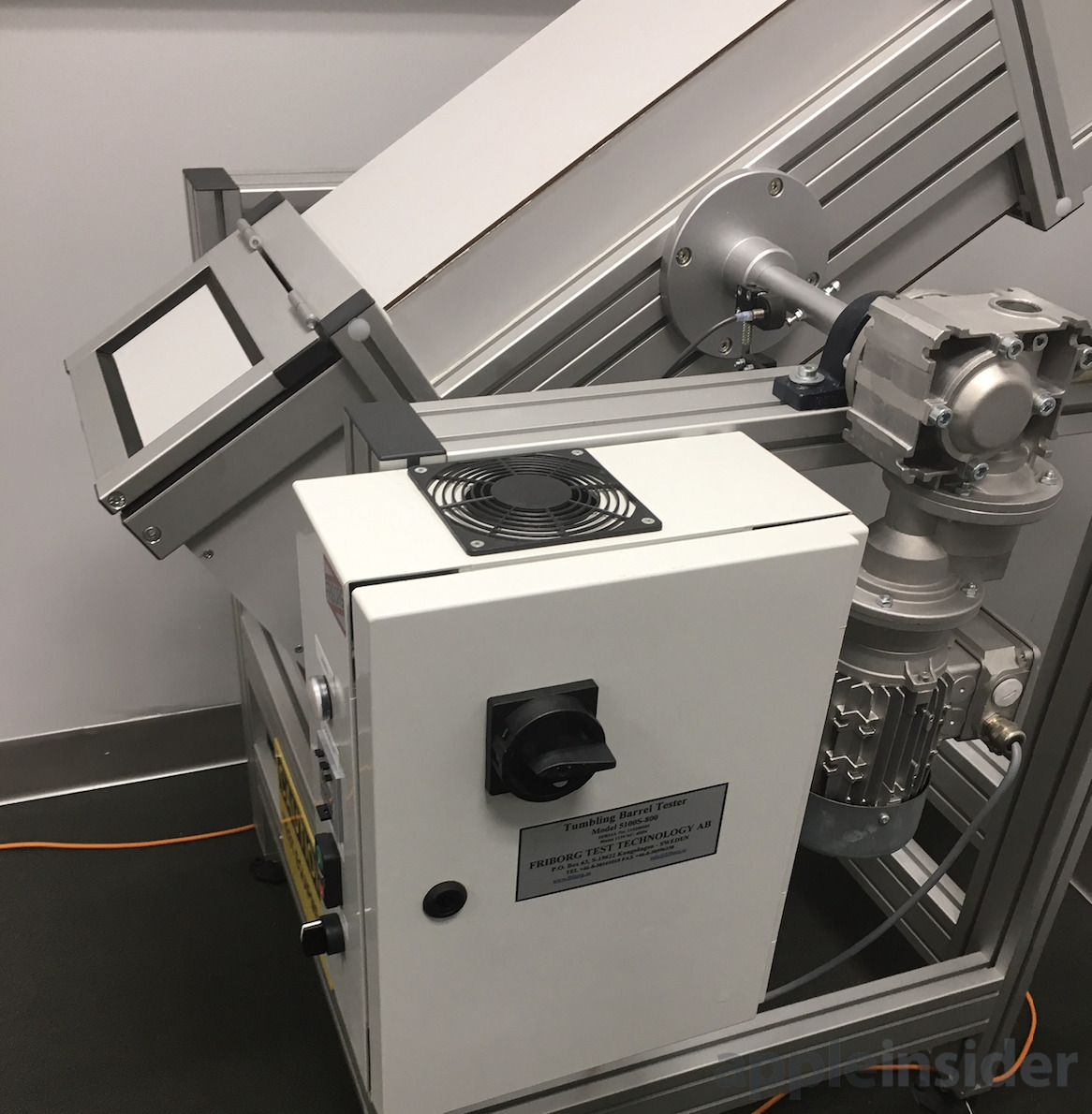

The phone-testing area consists of a device used for the drop and tumbling regimen, the same machine you may have seen in AppleInsider's past coverage. Other drop tests are performed in other locations in the facility, onto carpet and wood.

There's also a water immersion test cylinder that the company purchased from a SCUBA supply company.

The laptop testing had testing in progress during our visit, so we didn't get that great a look at that part of the facility. At least some portion of the testing was literally in the dark, as they ushered me through, the lights were off, presumably to test what the screens looked like in a darkened room. Other aspects of the laptop testing includes battery tests and other measures of laptop quality.

Theories of testing

In our meetings with Consumer Reports decision makers, we discussed how the organization conducts its testing. They had some things to say about their values when it comes to testing: It's scientific, analytical, and done according to the numbers.

They described how the testing process begins.

"We have market analysts that look at what products we should be testing. And they will look at what products are unique and what products are widely available in the market," Maria Rerecich, director of electronics testing team, said. "So they make a decision about products in that category we should test. We have shoppers that buy it anonymously, but we buy it in the store and buy it at the website, so we buy it when it's available to consumers. We rate products that we have purchased in regular retail channels. We bring those in, and we do our test."

In some cases, Rerecich added, Consumer Reports will arrange to have a product loaned to them for testing purposes, but they never accept free products permanently.

"Now we've already developed the test protocol, because we want to test every product in that category the same way, so we have defined protocols and procedures," Rerecich said. "So once we bring the product in we will use those protocols and procedures to test it in that way. And whatever test we do on it, we do on it, and then we roll up scores for each of the attributes we've tested, create ratings as a result and that's what the ratings become."

"We have to do comparative testing," Rerecich added. "Testing has to be equal. We can't just look at one product and go 'hey, it's good,' we just say, 'well compared to these other four, it's better for this, it's worse for that.'"

Who the testers are

All of the product testers are employees of Consumer Reports, with many of them on the electronics side having some background in electrical engineering or computer science, and sometimes the specific technology that they're testing. Mark Connelly, the organization's overall director of testing, has a P.E. degree, while Rerecich holds a masters in engineering from MIT and worked for nearly thirty years in the semiconductor industry, including as a chip designer.

"Our testers have all kinds of different experiences," Rerecich said. "The smartphone team knows about cellular bands, and they have a very strong grounding of cell phones and how those work. Just as the TV tester knows how TVs work, and they know from the technologies of 4K, and HD."

She added that while Consumer Reports will occasionally hire someone away from the industries whose products are being tested, it more often goes the other way, with testers being hired away by industry from Consumer Reports.

Manufacturer relationships

The team revealed that they do a great deal of outreach to manufacturers, both before, during and after the publication process.

Consumer Reports will loop in manufacturers during testing when they have questions, or "things don't add up," Connelly, the director of testing, said. And the communications with manufacturers are with all sorts of personnel- engineers, technical people, PR people and even lawyers. Consumer Reports also often keeps lines of communication open with manufacturers even when they're not testing current products, to give the manufacturers the opportunity to "explain new and upcoming products and technology."

The Consumer Reports official most responsible for this process is Jennifer Shecter, the senior director of content impact and outreach.

"We have manufacturers will come in and tell us what products are coming down the pike. And so that we know what their new product lines are so that we know what's happening so we can plan for that," Shecter said. "And then while we're testing the product, if we have questions we'll contact the manufacturer. And if we get results where we're going to call up the manufacturer in any sort of way, we will contact them beforehand, and get their comment and have a robust back and forth."

Testing for electronics products typically takes three to four weeks. However, Consumer Reports will sometimes run "First Look" features, sharing their first impressions of certain high-profile products, often published within a day of the organization receiving the product.

As for CR's overall mission, the officials say serving the consumer comes first. And this includes their policy outreach work, such as a meeting in March with Commissioner Elliot F. Kaye of the U.S. Consumer Product Safety Commission, which was attended by Shecter and others from Consumer Reports They're also at work on The Digital Standard, a nascent effort to establish "a digital privacy and security standard to help guide the future design of consumer software, digital platforms and services, and Internet-connected products," much of them associated with the Internet of Things.

"We're a mission-focused organization, we're a nonprofit organization, and our mission is to serve our consumers," said Liam McCormack, Consumer Reports' vice president of research, testing and insights. "Clearly, we do that in particular through things like safety and other issues. But clearly we need to be a sustainable organization, so we need successful products that will appeal to Americans that they will pay for, and clearly we must serve those customers as well."

We had questions about some of the controversies of the past, as well as whether Consumer Reports has been historically fair to Apple. We'll tackle those answers in part two on Wednesday.

Stephen Silver

Stephen Silver

-m.jpg)

Andrew O'Hara

Andrew O'Hara

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Sponsored Content

Sponsored Content

30 Comments

I like most of what they had to say about their practices, except this: "Also, the tests are not conducted blindly; testers always know which speakers they are testing." This just seems crazy to me for any sort of "non-biased" critical review of speakers. Obviously all features can't be conducted blindly (voice assistants, for example) but SURELY the sound quality can be. Disappointing.

Consumer Reports hates Apple. They have been completely wrong in every single one of their reviews.

Deja vu all over again. Not seeing the part where CR has figured out the metrics for a valid test of an active speaker with essentially 360 degree sound in the horizontal plane. If the test is treating an active speaker, like the HomePod, as a conventional two way speaker, CR is doing it wrong. Still, if my instincts are correct and competitors are working on similar active systems, it will only be a short time until the consumer and the market realize that these aren't conventional speakers, and figure out some better test regimes.

So AI shamed CR into telling everyone how they are testing.

In all honesty, doing the wide range of testing CR does is extremely hard to be a subject matter expert on so may topics, I would put CR testing in the range or user monkey testing (just randomly doing things to see if something goes wrong) and experts testing (where you have complete understanding of the technology and real work uses and failures). I have lots of product testing experience and one thing I have told people about this kind of testing, any test engineer can design a test which every product passes or fails, however, this taught you nothing about the products performance. The hardest thing to do it replicate real world use cases and results. This take years to understand and the only way to do this, is you have to have real world experience and data from products in the field which CR does not have, they only have anecdotal information.

Case and point, CR said they "train the panelists to discern characteristics of the sound" you can not train people to do this, please refer to the whole Yanny/Laurel debate.

People's hearing is influence by too many things, what you hear is not always what other people hear, this is why engineer use test equipment to determine how a speaker performs in a specific environment. I had friend who was an audiophile, loved his music and his equipment, he would go through great lengths to explain what he was hearing and why it was important and I could not hear what he was hearing or he could not explain it to me. But hook it up to test equipment and we both could see it in the data.

Their data is good at telling you whether you may or may not have an issue, and how you end experience may be, but it not going to tell you if you have good or bad product.