Apple has come up with a way it thinks could provide headphone and earphone users the optimal listening experience, using a variety of signal processing techniques to make the sound seem like it is being heard without the use of headphones at all.

Granted by the U.S. Patent and Trademark Office on Tuesday and initially filed on September 22, 2016, the patent for "Spatial headphone transparency" describes ways a pair of headphones could adjust the sound from an audio source, changing the way the user hears it.

Apple uses the term "acoustic transparency," which it also refers to as transparent hearing or a "hear through mode," where a headset picks up ambient sound from the local environment of the wearer then, after processing, includes it in the audio feed that is played to the wearer. If performed correctly, this could make the user's listening experience "as if the headset was not being worn."

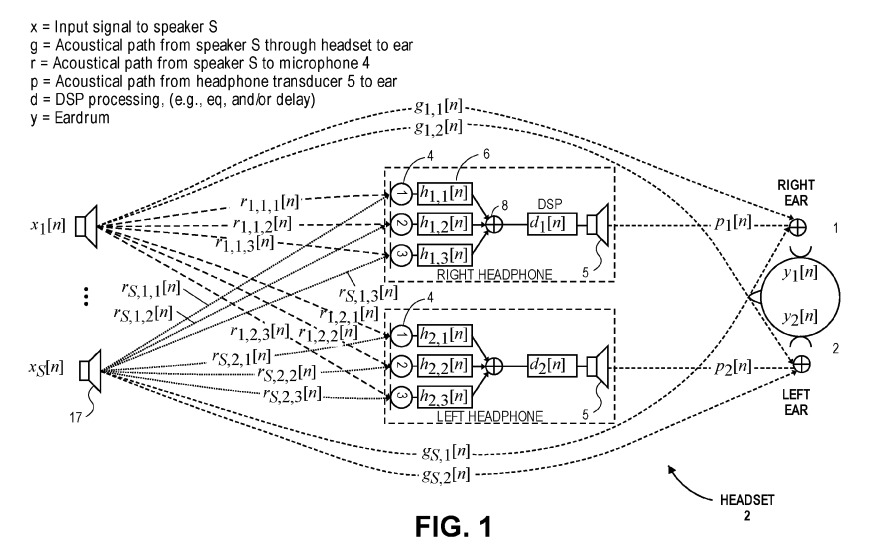

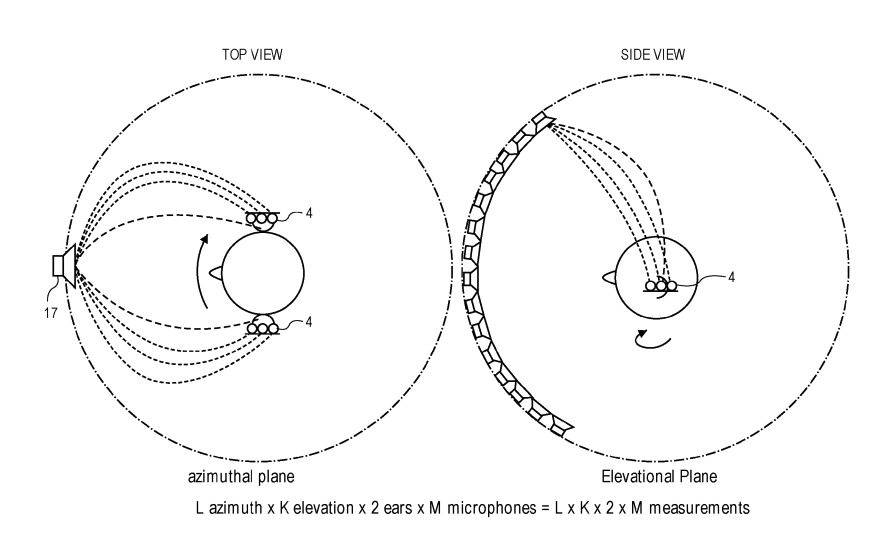

In one section, the patent suggests the use of microphones to pick up sounds that the user would have heard if they were not wearing headphones, then for the left and right sides separately processing them through an acoustic transparency filter, before being combined with the audio the user is listening to. The composite audio is then played through the left and right speaker drivers.

The filter in question will attempt to preserve any spatial filtering effects that would occur naturally, such as how the user's head, shoulder, and other anatomical features can affect the timbre and other acoustic elements of the ambient sound. The filter will also attempt to avoid coloring the speaker driver signal, such as reducing resonances at higher frequencies, to accentuate the effect.

In some embodiments, Apple also attempts to use the system to eliminate the effect of headphone-based music being heard "within the user's head," instead making it sound as if it's stemming from speakers positioned directly above the user. This is thought to help reduce any acoustic occlusion with ambient sounds, namely making it easier for the user to identify nearby noises without it clashing with the audio track.

Apple does have experience with audio processing that could potentially make such a system a reality. The adaptive audio function of the HomePod is capable of detecting the environment and optimizing its output to fill a room with sound, regardless of nearby obstacles.

Apple is also rumored to be working on a new headphone project separate from the Beats line, one that could provide noise-cancellation abilities. Thought to be a premium audio peripheral, the headphones are currently speculated to arrive no earlier than the holiday shopping period at the end of 2018.

Malcolm Owen

Malcolm Owen

-m.jpg)

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Thomas Sibilly

Thomas Sibilly

Andrew O'Hara

Andrew O'Hara

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

18 Comments

About 30 years ago Sanyo had a portable cassette player that had an excellent mic to incorporate ambient speech into the music you were listening to.

The music would continue playing but at a distinct volume and the speaker's voice came through crystal clear. You had to manually flick a switch of course.

To a degree, this patent is the hi-tech digital version of the same idea.

This sounds a lot like Sonic Holography that was patented by Bob Carver and found on the lineup of Carver Pre-Amps and Receivers during the 80-90's. Essentially, Bob injected a portion of the Left channel (180 degrees out of phase), onto the signal going into the right speaker, and then simultaneously injected an portion of the right channel, (180 degrees out of phase) onto the signal going to the Left channel.

The idea was that any listener, on axis (ie. between the two speakers) would experience the effect that your right channel would be uniquely coming from the right speaker, as the left channel would "cancel" out. In other words, as we are basically predators, our right ear hears both speakers, so our brain "targets" the speaker source. By using wave cancellation, the right ear would theoretically only hear the right speaker, and the left ear would only hear the left speaker. As long as you remained "somewhat" on axis between the speakers.

On a decent stereo system, the effect was quite impressive, as the true "sound stage" was accurately reproduced, you "heard" sounds coming outside from the physical speaker placement. Blindfolded and given a ping-pong ball gun, people would think that the speaker was 10+ feet to the right, of the right channel. Using Sonic Holography and a set of omni-directional speakers; you could "hear" that the voice was coming from a point to the right of the speaker about 3 feet, and originating about 5 feet off the ground - as if they were in the room playing, live.

So, I'm pretty excited to see where Apple takes this. It's been many years since Bob Carver shook up the audio world; first with his Magnetic Field amplifiers, then with Sonic Holography, then with an asymmetrical tuning system for his FM tuner, and some other stuff that dealt with vinyl records. Bob was quite the genius.

I don’t know, having the sound appear to come from above your head doesn’t seem much better than coming from within ones head. But perceiving the music to be coming from in front of you, like when you listen to normal stereo speakers...now that would be the holy grail, by providing an accurate sound stage with natural perspective.

This could also be use very useful for gaming applications.

Heck maybe for some uses we can ditch the headphones altogether before too long:

https://www.businessinsider.com/noveto-focused-audio-technology-could-make-headphones-obsolete-2018-6

Between the new bluetooth and wifi standards, focused audio tech, and now potential Apple audio changes its seems like a bad time to make a big investment in expensive headphones or smart-speakers. They could end up as quaint and almost abandoned old-school within just a very few years.