A new Apple patent describes a system of registering when a user is or is not touching a real object in a virtual space, and doing so with depth measurement from fingertips and calculated from camera angles.

Future Augmented and Virtual Reality systems and updates to ARKit from Apple could display virtual objects alongside real ones, and also allow for the manipulation of them both. The difficulty so far has been in assessing when the user is actually touching such an object, or is merely very close to doing so.

A new patent application from Apple, "Depth-based touch detection," US Patent No 10,572,072, details the problems inherent in exclusively using virtual camera positions to calculate whether a touch has occurred.

"Using cameras for touch detection [does have] many advantages over methods that rely on sensors embedded in a surface," says the patent. It describes Face ID-like systems where "vision sensors [or] depth cameras" provide a measurement of distance between the lens and the touch surface.

"[However, one] approach requires a fixed depth camera setup and cannot be applied to dynamic scenes," it continues. Another approach works by identifying the user's finger then marking surrounding pixels until so many are recorded that effectively the finger must be touching the surface. "However... this approach... can be quite error prone."

These existing systems rely on what Apple calls "predefined thresholds," calculated points where a touch is assumed or not. "They also suffer from large hover distances (i.e., a touch may be indicated when the finger hovers 10 millimeters or less above the surface; thereby introducing a large number of false-positive touch detections)," it continues.

While not trying to replace these systems, Apple's patent application describes also using depth detection. "[A] measure of the object's "distance" is made relative to the surface and not the camera(s) thereby providing some measure of invariance with respect to camera pose," says the patent.

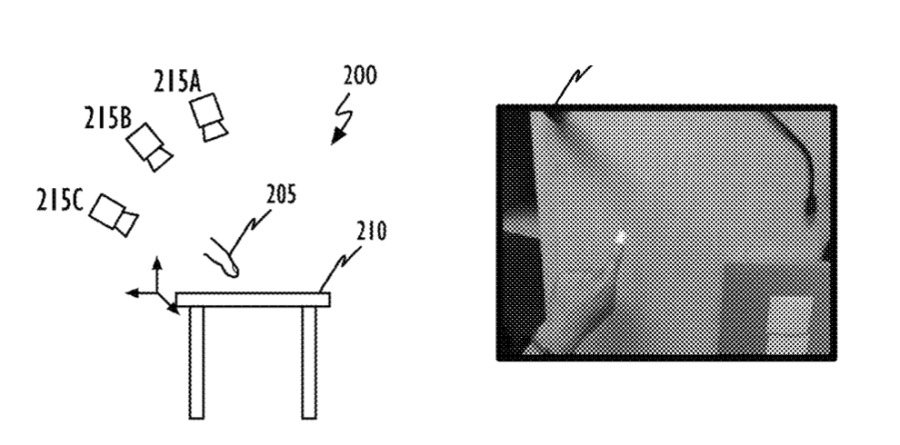

Detail from the patent showing a drawing of a multi-camera system and (right) a photo of a fingertip being detected

Detail from the patent showing a drawing of a multi-camera system and (right) a photo of a fingertip being detectedApple's system would first identify an object such as "a finger, a stylus or some other optically opaque object." Next, it would identify the "planar or non-planar" surface.

This detection would continue as the user moves, and this gives the system a series of readings over time, or what Apple refers to as a "temporal sequence of outputs."

The purpose of the system is to detect touch where there may not be anything on the surface material to react to that contact. "Computer functionality can be improved by enabling such computing devices or systems to use arbitrary surfaces (e.g., a tabletop or other surface) from which to get input instead of conventional keyboards and/or pointer devices (e.g., a mouse or stylus)," says the patent.

"Computer system functionality can be further improved by eliminating the need for conventional input devices; giving a user the freedom to use the computer system in arbitrary environments," it continues.

The patent is credited to two inventors, Lejing Wang and Daniel Kurz. Between them, they have around 60 patents, which include many related ones such as "Method and system for determining a pose of camera," and "Augmented virtual display."

This patent follows many AR and VR ones from Apple, most recently including one utilizing fingertip and face mapping tools to also help with touch and interaction.

William Gallagher

William Gallagher

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

2 Comments

One might imagine a near-future where houses are decorated with AR artwork and virtual home decorations, the latest large screen TV is merely an AR object and one’s experience of the real world outside is enhanced with floating pin markers for locating cars or businesses. All viewable through Apple’s all-day battery powered Glasses.

My (non-expert) reading of the patent is that it has very little to do with AR/VR and a lot to do with virtual keyboards and other input devices. There is nothing in the patent that mentions AR or VR. The only time "virtual" appears is in a reference to another article/patent about virtual keyboards.

Imagine being able to use your iPad (or iPhone) with a full keyboard--without a physical keyboard. The iPad sensors can tell when your fingers are touching the non-existent keys.