Future iPhones could use thermal or infrared imaging to supplement regular vision cameras, making it easier to use Apple AR in dark or busy environments.

Apple really wants us to get away from the idea of AR involving us covering our heads with giant goggles and standing on the spot as games move around us. Instead, it's been looking at less obtrusive headsets like "Apple Glass," and now it is also investigating how to solve the extra problems that movement brings.

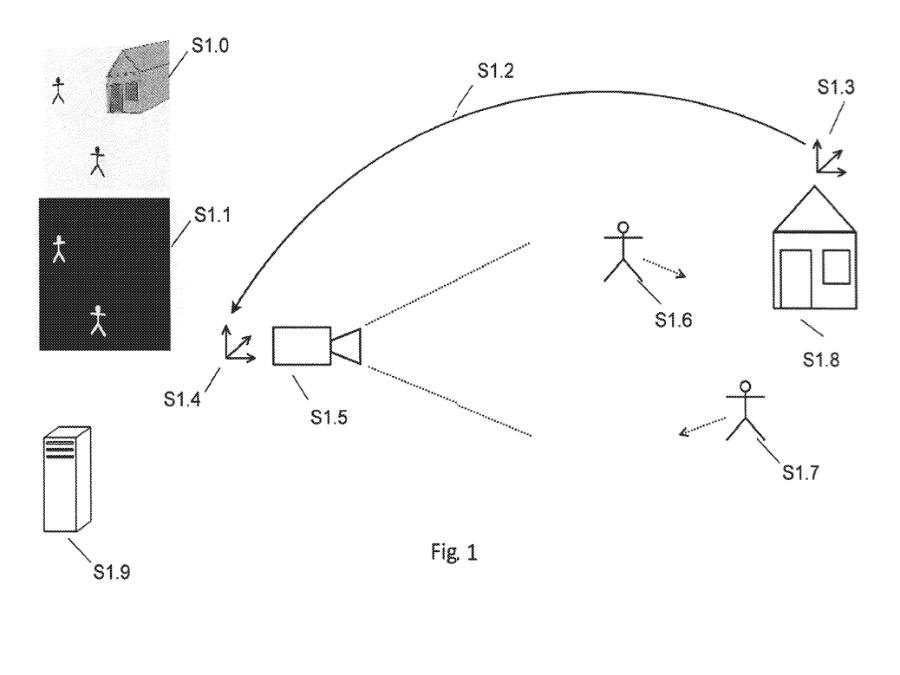

"Method and System for Determining at least one Property Related to at least Part of a Real Environment," is a newly-revealed patent application which at 22,000 words is only slightly longer than its title. While it does go into great and specific detail about multiple methods of achieving its aims, though, it is all focused on the same problem.

"Computer vision methods that involve analysis of images are often used, for example, in navigation, object recognition, 3D reconstruction, camera pose estimation, and Augmented Reality applications, to name a few," outlines the patent application.

"[However, when] a camera pose estimation, object recognition, object tracking, Simultaneous Localization and Tracking (SLAM) or Structure-from-Motion (SfM) algorithm is used in dynamic environments where at least one real object is moving, the accuracy of the algorithm is often reduced significantly," it says, "with frequent tracking failures, despite robust optimization techniques employed in the actual algorithms."

The clear, resulting problem is that AR can get things wrong when it's trying to position a virtual object within the real environment. Apple says that actually the problem lies a little deeper than that, and so the solution must as well.

"This is because various such computer vision algorithms assume a static environment and that the only moving object in the scene is the camera itself, which pose may be tracked," it continues. "This assumption is often broken, given that in many scenarios various moving objects could be present in the camera viewing frustum."

So any AR system will have all possible positioning details about itself — the headset or the camera on the headset — but tracking everything else is difficult. And it's difficult for a combination of issues, including the processing of data.

"There exist in the current state of the art many algorithms for detection and segmentation of dynamic (i.e. moving) objects in the scene," says Apple. "However, such approaches are usually computationally expensive and rely on motion segmentation and/or optical flow techniques."

Those techniques reportedly require a "large number of frames is necessary to perform reliable moving object detection." Apple describes several other alternatives, but says that each involves "increased complexity and computational cost."

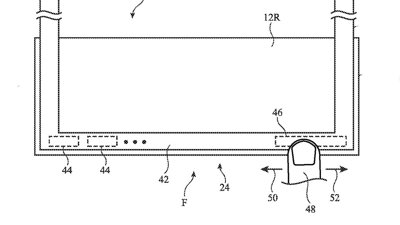

Apple's solution is to augment the AR visual cameras with ones that rely on thermal imaging or infrared. "[Thermal] properties of real objects in the scene could be used in order to improve robustness and accuracy of computer vision algorithms (e.g. vision based object tracking and recognition)," it says.

If the system can know the "thermal properties" of unrecognized or what Apple calls "unreliable objects," then "a thermal imaging device could be utilized to detect... objects."

While the patent application almost exclusively describes using these methods to improve accuracy in busy, moving environments, there is another element. The use of thermal imaging means that AR won't be as dependent on being used in perfectly lit situations.

The application is credited to Darko Stanimirovic and Daniel Kurz. The latter has a previous patent credit on a method for using AR to turn any surface into a display with touch controls.

William Gallagher

William Gallagher

-xl-m.jpg)

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

Mike Wuerthele

Mike Wuerthele

1 Comment

Cool does this mean we get Predator vision.