Touch screens become impractical when that screen is next to your eye in something like 'Apple Glass,' so Apple is investigating how you could manipulate virtual controls in the real world.

A recently revealed patent application showed Apple proposing a way to use Apple AR to display information on what would appear to anyone but the owner, as a blank screen. Now in a separate application, it's looking at making any surface appear to "Apple Glass" wearers as a control panel, with buttons and a display.

"Method and device for detecting a touch between a first object and a second object," filed in 2016 but only this week revealed, points out that this way of presenting controls superimposed on real-world objects is going to be necessary.

"A natural way for humans to interact with (real) objects is to touch them with their hands," it says. "[Screens] that allow detecting and localizing touches on their surface are commonly known as touch screens and are nowadays common part of, e.g., smartphones and tablet computers."

"[However, a] current trend is that displays for AR are becoming smaller and/or they move closer to the retina of the user's eye," it continues. "This is for example the case for head-mounted displays, and makes using touch screens difficult or even infeasible."

Your "Apple Glass" might show you information, then, but you can't do a lot with it unless you take the glasses off and poke the lens with your finger. Since AR already maps virtual objects onto the real world around you, though, it could be extended to make it appear as if there were buttons and controls conveniently located where you could touch or tap them.

In that case, your eyes would see the virtual object because "Apple Glass" is showing it to you, but your fingers could touch whatever is really in that spot in the real world. If the AR can determine that you've touched what you think is a button, it can react as if you actually have tapped a control.

Unfortunately, that's harder than it sounds. The way that Apple's privacy screen idea can work is because the real-world object is something like an iPad. Even if that iPad is not really showing you anything at all on its screen, it can recognize where you tap.

Apple's new proposal involves recognizing such taps or touches on any surface at all. And to do so without either making the user wear fingertip sensors, or use occlusion.

"The most common approach is to physically equip the object or the human body (e.g. fingertip) with a sensor capable of sensing touch," says the application. But it isn't impressed with that or electrical versions that make the body part of a circuit: "The limitation of such kinds of approaches is that they require modifications of the object or the human body."

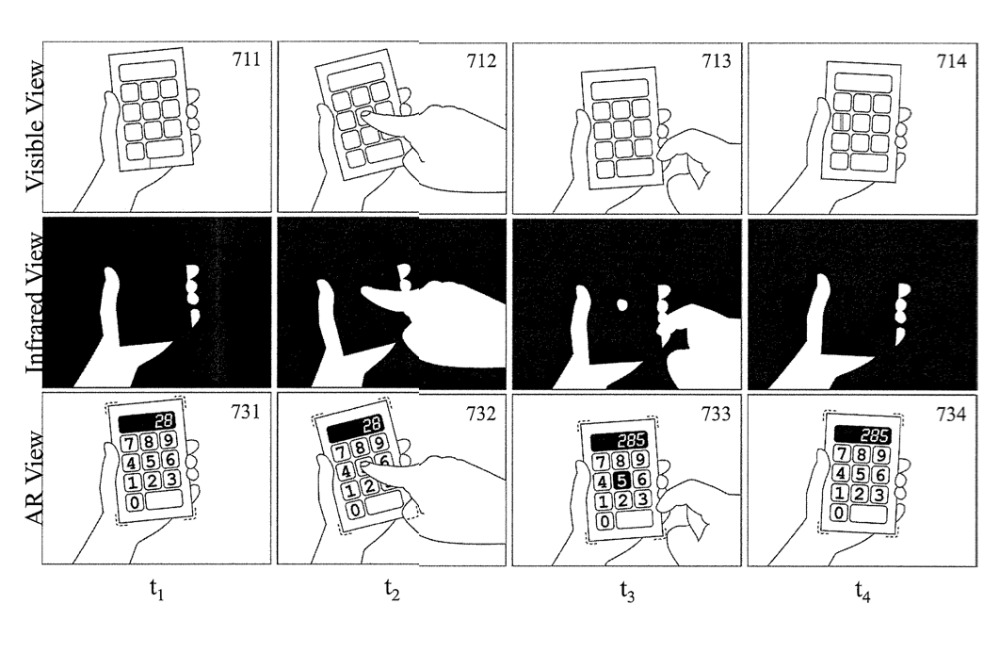

With occlusion, a camera could watch where a user's fingers tap. Especially in AR, that could well work because the system will have mapped the whole environment and know that, for instance, there is a tabletop surface under the user's fingers.

It could, therefore, map buttons onto that surface. However, the patent application points out, effectively, that they'd have to be pretty big buttons. The killer problem, according to Daniel Kurz, the credited inventor of Apple's patent application, is that occlusion cannot ever be precisely accurate.

"With virtual buttons it is impossible to trigger a button, e.g. No. 5 out of an array of adjacent buttons on a number pad, without triggering any other button before," it argues, "because the button No. 5 cannot be reached without occluding any of the surrounding buttons."

You could skirt around this by having even bigger buttons, or perhaps fewer of them, but even then, what such systems really detect is the occlusion. They detect whether a finger has passed in front of a "button," not whether that button has actually been pressed.

Apple's proposed solution is heat. "If two objects at different temperatures touch," says the application, "the area where they touch will change their temperature and then slowly converge back to the initial temperature as before the touch."

"Therefore, for pixels corresponding to a point in the environment where a touch recently occurred," it continues, "there reveals a slow but clearly measurable decrease or increase in temperature."

So whatever your finger touches, there is going to be a transfer of heat. It's minuscule, but it's definitely there. "Smooth changes in temperature can be indicative of a touch between two objects that recently happened at the sampled position," says the patent.

The application discusses different methods of detecting heat changes, such as through thermal imaging, but it is chiefly concerned with the precision that can be achieved.

"It can distinguish touches or occlusions caused by a human body (that happened on purpose) from touches or occlusions by anything else (that might happen by accident)," it says. "It is capable of precisely localizing a touch and can identify touch events after they occurred (i.e. even if no camera, computer or whatsoever was present at the time of touch)."

That last depends on the detection being performed pretty swiftly after the touch, but for example, it could be that a user taps a surface and the thermal imaging happens when they take their finger away. The application includes an example photograph showing a recent fingertip tap on a surface.

Along with the related idea of displaying of data to solely the "Apple Glass" wearer, this is all part of Apple's wide-ranging work on AR. It shows the company looking deeply — and for a long time, as this patent application was filed four years ago — into the practical applications of augmented reality rather than just the visual side.

Among Apple's many related patents in the field of AR, there is another by the same Daniel Kurz to do with accurate handling of real and virtual objects together.

William Gallagher

William Gallagher

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Andrew O'Hara

Andrew O'Hara

Mike Wuerthele

Mike Wuerthele

Bon Adamson

Bon Adamson

-m.jpg)

11 Comments

So yeah...

Put this one in the non-trivial/non-obvious pile. I hope it works.

Spectacular engineering needed to create another spectacular game changer. Hope it shows up in my lifetime. You go Apple!