Apple AR endeavors originally included a glasses or headset-free version that projected data onto real-world objects, including one concept where the user wore the projector itself.

People generally consider augmented reality to consist of the application of a digital image to overlay one from the real world, giving the illusion of an object in the world that isn't really there. In the vast majority of cases, this would involve some sort of display mechanism that would sit between the user's eyes and the real world, but in one particular case, there's no screen used at all.

In a patent granted to Apple by the US Patent and Trademark Office on Tuesday titled "Method of and system for projecting digital information on a real object in a real environment," Apple does away with screens, in favor of applying the digital image onto the real-world object itself.

While part of the patent description seems fairly simple, namely projecting an image onto a physical surface using some form of projector. However, to correctly map the digital image to the object, the system also uses other sensors to detect the environment and object.

The system captures an image of the real-world object it is to map the projection to, with the image data combined with information from a depth sensor to create a depth map of the item. This is used to calculate a spatial transformation between the virtual data and the real object, which can then be used by applications to plan out what pixels are to be projected where on the object.

Apple proposes a camera system with a depth sensor alongside a projector for real-world screenless AR.

Apple proposes a camera system with a depth sensor alongside a projector for real-world screenless AR. Apple doesn't mention exactly what kind of depth sensor is used but mentions the use of an "infrared light projector and an infrared light camera," which would be similar to the TrueDepth camera array's functionality in modern iPhones.

The depth mapping also includes estimating a 3D position of the object and where the digital information is to be applied on the object, in relation to the projector and the user. This would enable the generation of 3D effects on the overlaid image, which would ideally be calculated based on the perceived user's viewing position.

By generating subsequent images and depth maps, the system will be able to counter any shakes or movements of the projector, allowing the projected illusion to remain intact despite such changes.

While this would feasibly involve object recognition and tracking via 3D depth map changes, there are also suggestions of using visual markers on the environment to further assist object and movement tracking. Knowing the distance of the intended object adds in the ability to inform users the subject is too far away, too close, or to even change the brightness of the projection based on distance.

There is also mention of there being the ability for the user to interact with the surface of an object's projection, such as a keyboard projected onto a surface.

While the idea of projecting light onto an object isn't new, with the idea having been used for artistic and commercial purposes for many years, Apple's patent largely deals with the need to calibrate the projection repeatedly, allowing the projection to be as accurate as possible.

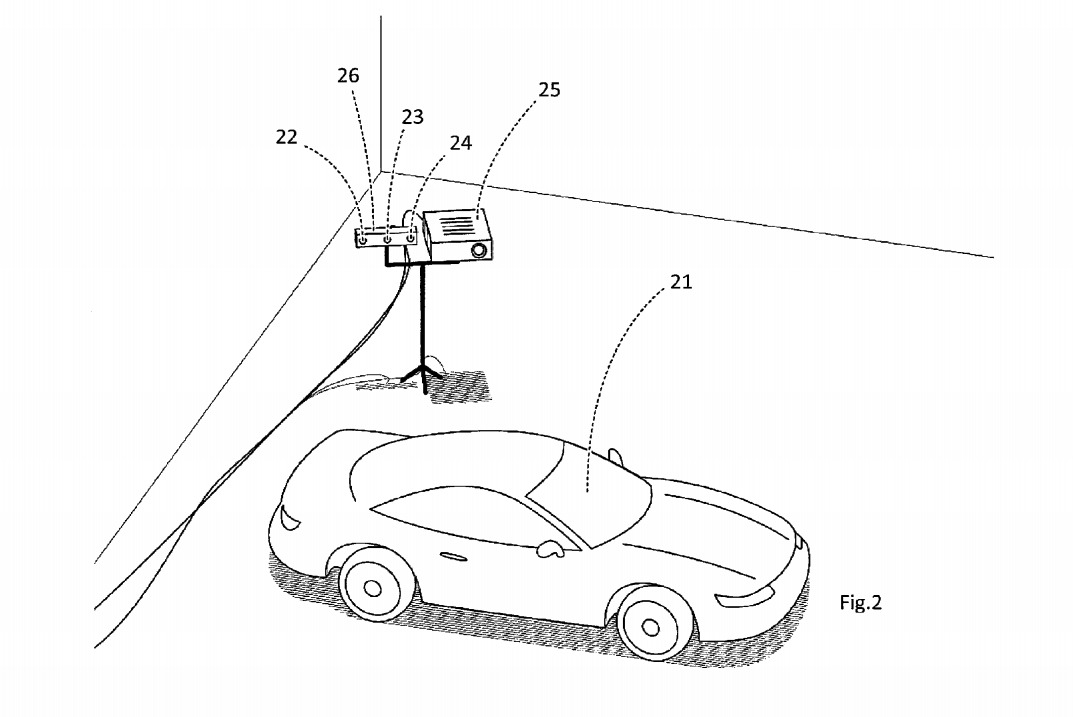

It is suggested this could include a projection of data within a car onto a surface, such as a speedometer on a windscreen, which would ideally need constant calibration to remain readable by the user.

The description also references other calibration systems for indoor scenes that are based on a "laser pointer rigidly coupled with a camera" in a robotic pan-tilt apparatus, though Apple reckons it is expensive and not ideally accurate due to the need for a "very precise hand-eye calibration of the pan-tilt camera."

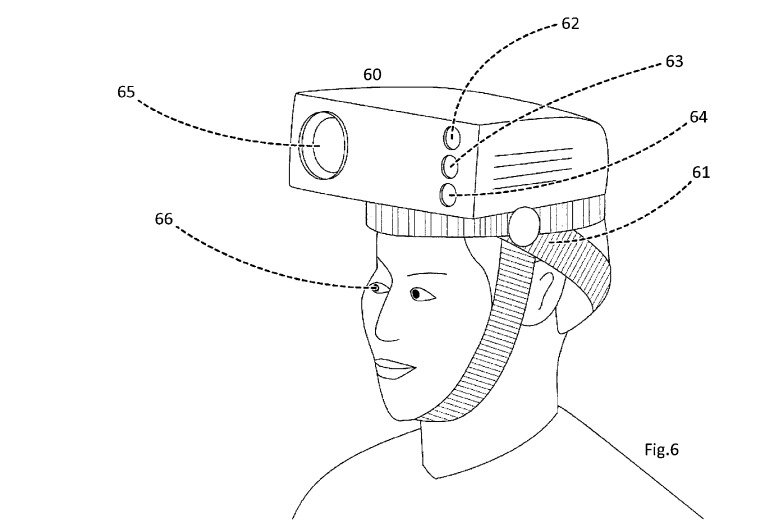

Apple's system is suggested to be capable of being used in motion. Drawings suggest the use of a system where the projector and sensing hardware has a handle for manual movements without a fixed location, while another exaggerated version shows the same arrangement placed on top of a user's head, akin to a hat rather than a headset.

The invention lists its inventors as Peter Meier, Mohamed Selim Ben Himane, and Daniel Kurz. Unusually, the original filing for the patent was on December 28, 2018, meaning it took almost 8 years to be accepted by the USPTO, though it is likely to have been delayed by Patent Cooperation Treaty (PCT) filings made at the same time.

The existence of a patent filing does indicate areas of interest for Apple's research and development teams, but don't guarantee the idea will appear in a future product or service.

The bulk of Apple's AR work has largely been through the more typical display-based AR, without surface projection. What's currently available is ARKit, Apple's framework for developers to use AR in iOS and iPadOS apps, viewable on an iPhone or iPad display

Apple has also been rumored to be working on some form of smart glasses or an AR and VR headset, with speculation that Apple Glass will arrive sometime in 2021.

Malcolm Owen

Malcolm Owen

Charles Martin

Charles Martin

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->