Rather than concentrating on any one hardware aspect of iPhone photography, Apple's engineers and managers aim to control how the company manages every step of taking a photo.

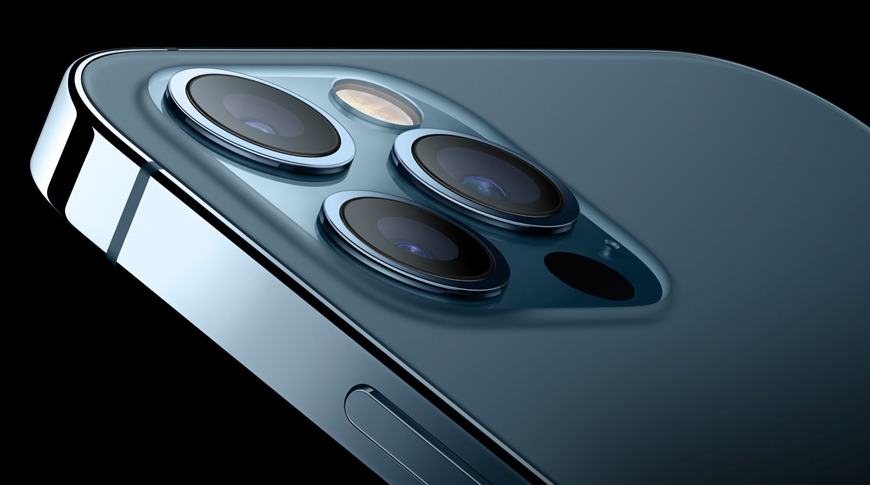

With the launch of the iPhone 12 Pro Max, Apple has introduced the largest camera sensor it has ever put in an iPhone. Yet rather than being there to "brag about," Apple says that it is part of a philosophy that sees camera designers working across every possible aspect from hardware to software.

Speaking to photography site PetaPixel, Francesca Sweet, product line manager for the iPhone, and Jon McCormack, vice president of camera software engineering, emphasized that they work across the whole design in order to simplify taking photos.

"As photographers, we tend to have to think a lot about things like ISO, subject motion, et cetera," Job McCormack said. "And Apple wants to take that away to allow people to stay in the moment, take a great photo, and get back to what they're doing."

"It's not as meaningful to us anymore to talk about one particular speed and feed of an image, or camera system," he continued. "We think about what the goal is, and the goal is not to have a bigger sensor that we can brag about."

"The goal is to ask how we can take more beautiful photos in more conditions that people are in," he said. "It was this thinking that brought about Deep Fusion, Night Mode, and temporal image signal processing."

Apple's overall aim, both McCormack and Sweet say, is to automatically "replicate as much as we can... what the photographer will [typically] do in post." So with Machine Learning, Apple's camera system breaks down an image into elements that it can then process.

"The background, foreground, eyes, lips, hair, skin, clothing, skies," lists McCormack. "We process all these independently like you would in [Adobe] Lightroom with a bunch of local adjustments. We adjust everything from exposure, contrast, and saturation, and combine them all together."

This isn't to deny the advantages of a bigger sensor, according to Sweet. "The new wide camera [of the iPhone 12 Pro Max], improved image fusion algorithms, make for lower noise and better detail."

"With the Pro Max we can extend that even further because the bigger sensor allows us to capture more light in less time, which makes for better motion freezing at night," she continued.

Nonetheless, both Sweet and McCormack believe that it is vital how Apple designs and controls every element from lens to software.

"We don't tend to think of a single axis like 'if we go and do this kind of thing to hardware' then a magical thing will happen," said McCormack. "Since we design everything from the lens to the GPU and CPU, we actually get to have many more places that we can do innovation."

The iPhone 12 Pro Max is now available for pre-order. It ships from November 13.

William Gallagher

William Gallagher

-m.jpg)

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

53 Comments

That’s great, but I believe that most iPhone photographers, as opposed to iPhone snapshot shooters, would prefer the same sized sensors for each camera, as well as equal quality lenses, the wide already had higher quality because of a larger sensor and a better lens. Now, that disparity will get larger. I know that some will say that we take most of our pictures with that lens, and that’s why. Be that as it may, we don’t expect wide angle and tele lenses for our other cameras to be of lesser quality because we may not use them as much.

maybe someday, Apple will have a 2.5 to 3 times zoom for the wide to tele, or a 2 times for the super wide to wide. If so, they could eliminate one camera, and have room, and less expense to have both cameras with the same, large, sensor with IBIS for both.

A marketing exercise but completely understandable.

What they are saying is what most vertical manufacturers do (and have been doing for years).

Apple is slowly catching up with photography but the harsh truth of the matter is that phone cameras are great and have been for years now. That won't change any time soon. Where phone cameras have branched out over the last two years is in versatility and Apple missed several opportunities to add that. Strangely so.