Apple's augmented reality plans could involve creating a 3D model of a user's fingers for interacting with virtual touchscreens, while the use of infrared optical markers may make it easier for AR systems to adjust what is seen on an iPhone or iPad's screen.

Apple is believed to be working on some sort of virtual reality or augmented reality hardware, and is also thought to be working on Apple Glass AR smart glasses. While the products haven't been launched yet, Apple has laid the ground work with extensive research and development in the field.

However, while AR has been around for a while, there are still a number of problems and issues that need to be dealt with before it can become a mainstream technology.

In a pair of patents granted to Apple by the US Patent and Trademark Office on Tuesday, Apple seeks to improve AR in two areas.

Device markers

One of the ways that AR can provide a benefit is in showing a user a virtual display practically anywhere within their field of vision. Such virtual displays could easily be superimposed onto walls or physical items, or even left to hang in mid-air.

The use of an AR headset may cause issues for users expecting to be able to use their smartphones and tablets at the same time, as there's no guarantee that the display will actually be viewable from within the headset.

There's also the possibility of an AR headset taking advantage of the presence of a mobile device as an interaction method for users, potentially replacing what is on the display of the device with different graphics, or a more easily visible version. It could even be feasible for the display to be completely seen within the AR headset with the device's screen turned off completely, while leaving touch capabilities and other functionality intact.

In the patent titled "Electronic devices with optical markers," Apple believes it could improve interoperability between smartphones and AR headsets by making it easier for AR systems to detect that a smartphone is nearby. In the patent, this simply involves adding visual markers that the headset can use.

The idea is that there are markers attached to the outside of the device that could be read by cameras and other sensors on an AR headset. By seeing the markers, this could allow the AR system to work out the kind of device it is, its orientation, where the display is, and other factors.

These markers could provide positioning data, along with other information like a unique identifier, provided via a two-dimensional barcode like a QR code. This unique identifier could even be the use of markers with a "spectral reflectance code."

While the markers could be visible to the user, Apple proposes those markers could actually be invisible to users completely. Potentially formed from photo-luminescent particles in a polymer layer, or with a retroreflective layer using transparent spheres, the markers could be made visible only via infrared light.

The patent lists its inventors as Christopher D. Prest, Marta M. Giachino, Matthew S. Rogers, and Que Anh S. Nguyen. It was originally filed on September 27 2018.

3D finger modeling

The ability for an AR system to show screens onto any surface may be useful in most cases, but not necessarily where interaction is expected. An AR system may intend for a virtual display applied to a wall to operate as a touchscreen, but as there are no sensors on the wall to detect touches, it cannot detect a touch.

It could be feasible to detect a touch of a virtual display on a wall using a glove or other input mechanism, but doing so without the glove is a tougher problem.

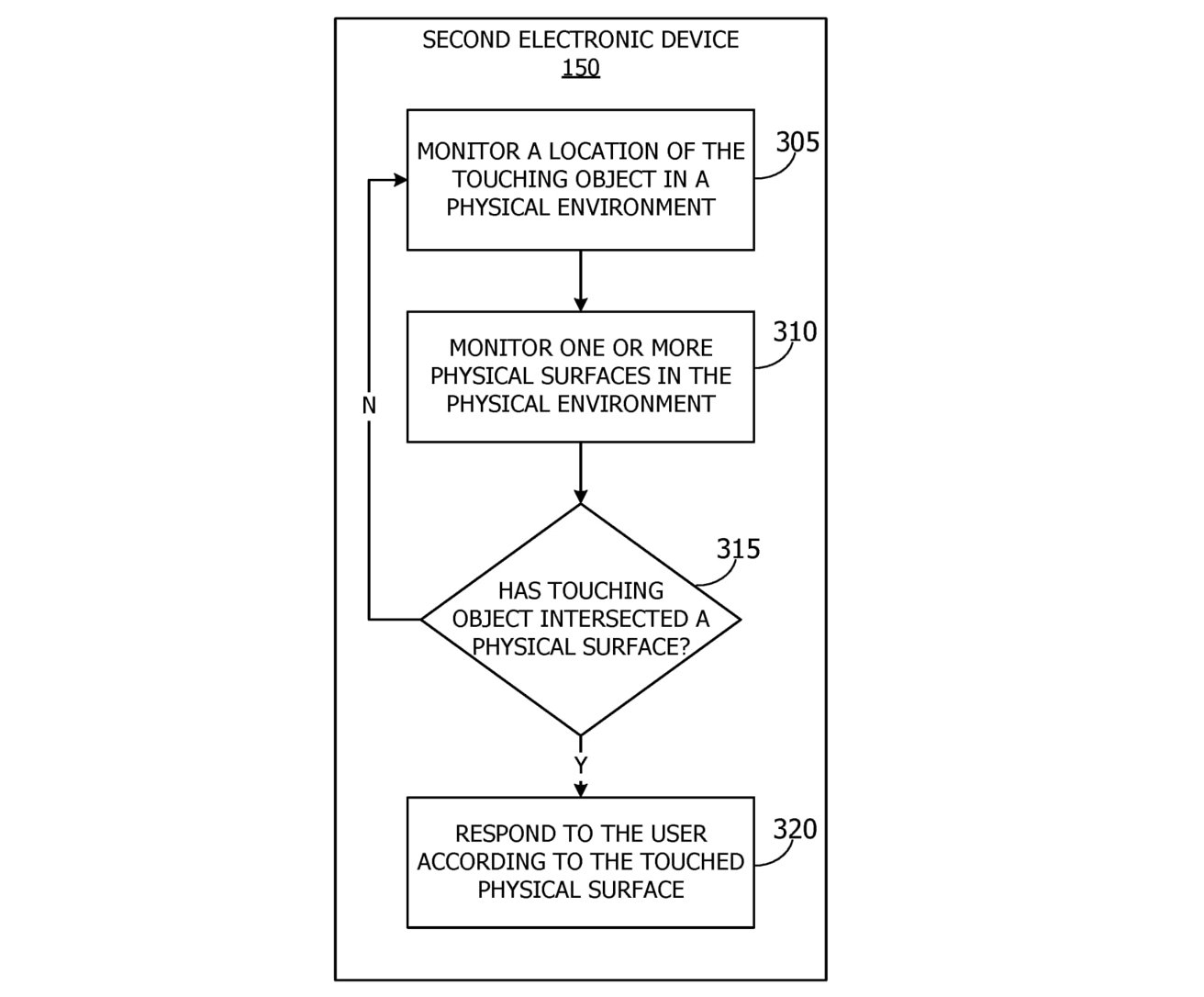

The second patent titled "Generating a 3D model of a fingertip for visual touch detection" attempts to solve this issue.

As the name details, the patent revolves around a system creating a 3D model of a user's fingertip, finger, or hand. Once a model has been generated, the model can then be examined by the system analyzing imaging sensor data, in order to detect purposeful touches of virtual displays.

It is thought that the model can be generated by monitoring the user's hand when it is touching an actual touch-sensitive surface. Using multiple cameras or imaging sensors, the finger or hand could be captured from multiple angles at the time of touching an object.

This visual data could be used to form a 3D model of the finger at the point of a touch event. Subsequent touches of a touch-sensitive surface can result in more images being generated, which can refine the 3D model further.

Once the model has been generated, the system can then use the data to detect the user's finger or hand, including instances where it interacts with a physical surface that isn't touch-sensitive but is being used for AR purposes.

The patent was invented by Rohit Sethi and Lejing Wang, and was filed on September 24, 2019.

This is not the first time Apple has attempted to solve the touch-detection problem.

In July 2020, a patent application surfaced proposing the use of thermal imaging to detect places on a device where the user touched. Small amounts of heat transfer from a user's fingers would feasibly be picked up by the sensors, and in turn used to detect exactly where on a surface the user touched.

Other ideas have largely revolved around gloves and controllers, including one from March 2016 where a hand-held controller had elements that extended over the fingers to detect finger movements, and even if a user touches the surface.

Malcolm Owen

Malcolm Owen

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Andrew O'Hara

Andrew O'Hara

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

1 Comment

I think it would be really cool if Apple could create a virtual AR keyboard which can be projected on any flat surface and the users can type on it with their fingers just like on a physical keyboard. Imagine doing your normal work anywhere without needing a laptop! This would be even more appealing on the "Apple Glass".