Apple employees are voicing concern over the company's new child safety features set to debut with iOS 15 this fall, with some saying the decision to roll out such tools could tarnish Apple's reputation as a bastion of user privacy.

Pushback against Apple's newly announced child safety measures now includes critics from its own ranks who are speaking out on the subject in internal Slack channels, reports Reuters.

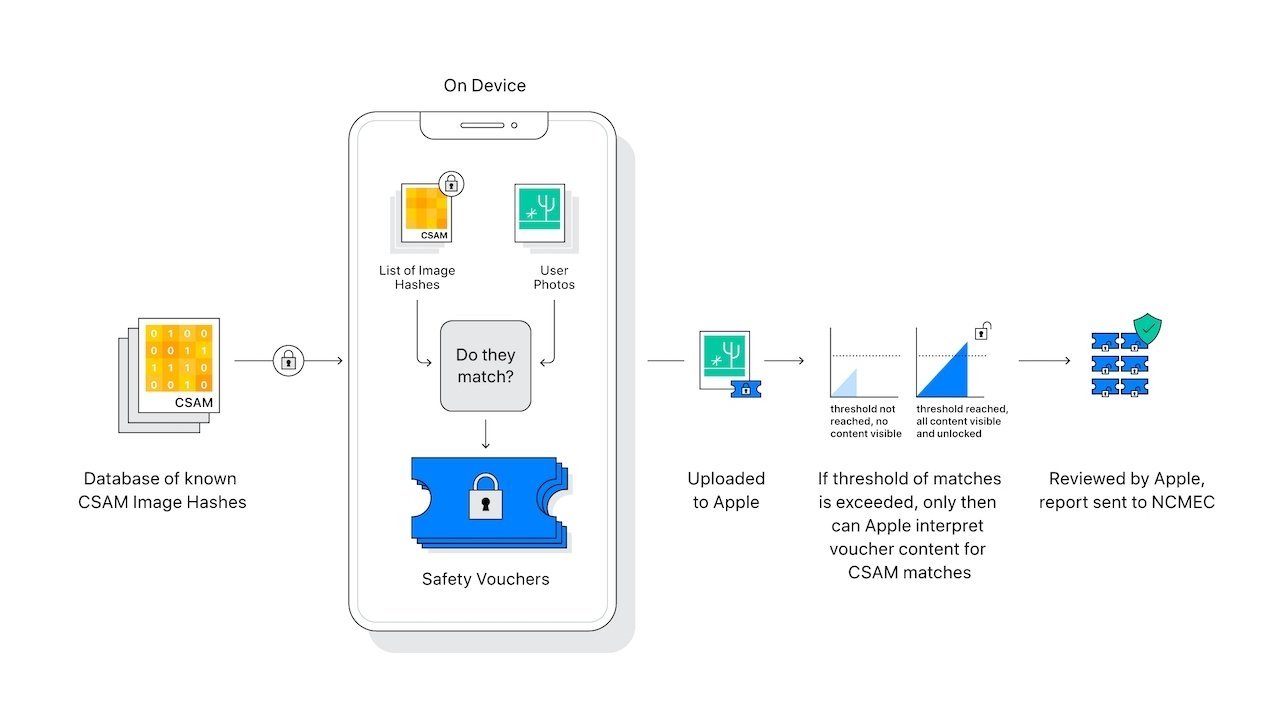

Announced last week, Apple's suite of child protection tools includes on-device processes designed to detect and report child sexual abuse material uploaded to iCloud Photos. Another tool protects children from sensitive images sent through Messages, while Siri and Search will be updated with resources to deal with potentially unsafe situations.

Since the unveiling of Apple's CSAM measures, employees have posted more than 800 messages to a Slack channel on the topic that has remained active for days, the report said. Those concerned about the upcoming rollout cite common worries pertaining to potential government exploitation, a theoretical possibility that Apple deemed highly unlikely in a new support document and statements to the media this week.

The pushback within Apple, at least as it pertains to the Slack threads, appears to be coming from employees who are not part of the company's lead security and privacy teams, the report said. Those working in the security field did not appear to be "major complainants" in the posts, according to Reuters sources, and some defended Apple's position by saying the new systems are a reasonable response to CSAM.

In a thread dedicated to the upcoming photo "scanning" feature (the tool matches image hashes against a hashed database of known CSAM), some workers have objected to the criticism, while others say Slack is not the forum for such discussions, the report said. Some employees expressed hope that the on-device tools will herald full end-to-end iCloud encryption.

Apple is facing down a cacophony of condemnation from critics and privacy advocates who say the child safety protocols raise a number of red flags. While some of the pushback can be written off to misinformation stemming from a basic misunderstanding of Apple's CSAM technology, others raise legitimate concerns of mission creep and violations of user privacy that were not initially addressed by the company.

The Cupertino tech giant has attempted to douse the fire by addressing commonly cited concerns in a FAQ published this week. Company executives are also making the media rounds to explain what Apple views as a privacy-minded solution to a particularly odious problem. Despite its best efforts, however, controversy remains.

Apple's CSAM detecting tool launch with iOS 15 this fall.

Mikey Campbell

Mikey Campbell

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

-m.jpg)

66 Comments

Sadly, even with this new tool, Apple is still the best game in town as far as user privacy is concerned. I’m a big fan of the company and have been using their products since the very first computer I’ve owned (anyone remember the Apple IIgs?). However, if they continue in this trajectory, I may have to start researching other options.

The folks working in privacy and information security were probably told to dummy up.

So Apple scans our photo library for a hit on known child porn. Somebody at some point along the chain had to watch it and establish the library.