A whistleblower who leaked a selection of internal documents from Facebook has revealed her identity at the same time as criticizing the social network's track record, claiming "Facebook over and over again, has shown it chooses profit over safety."

A cache of documents from within Facebook were leaked to the Wall Street Journal by a whistleblower, prompting an exhaustive probe into the social network. Following the publishing of initial investigatory efforts into the documents, the whistleblower has come forward in an interview, explaining more about the tech giant's workings, and why she chose to release the data.

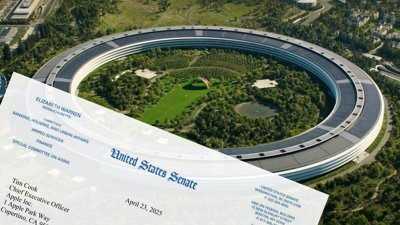

That data release led to a series of reports, including one that revealed Facebook allegedly knew that Instagram was bad for the wellbeing of teenagers. That report led to a hearing with the Senate Commerce Committee's consumer protection subcommittee on mental health in teenagers.

In an interview with 60 Minutes on Sunday, Frances Haugen doubled down on the document trove seemingly proving Facebook cared more about its algorithms than dealing with hate speech and other toxic content.

Haugen was formerly a product manager at the company, working in its Civic Integrity Group, but departed after the group was dissolved. Before her departure, she copied tens of thousands of pages of internal research, which she claims demonstrates Facebook lies to the public about its progress against hate, violence, and misinformation.

"I knew what my future looked like if I continued to stay inside of Facebook, which is person after person after person has tackled this inside of Facebook and ground themselves into the ground," said Haugen over her decision to release the documents. "At some point in 2021, I realized Ok, I'm gonna have to do this in a systemic way, and I have to get out enough that no-one can question that this is real."

According to the data scientist, who had previously worked for Google and Pinterest, "I've seen a bunch of social networks and it was substantially worse at Facebook than anything I'd seen before."

The documents included studies performed internally by Facebook about its services, with one determining that Facebook had failed to act on hateful content. "We estimate that we may action as little as 3-5% of hate and about 6-tenths of 1% of [violence and incitement] on Facebook despite being the best in the world at it," the study reads.

Haugen tells of how she was assigned to Civic Integrity, which was meant to work on misinformation risks for elections, but following the 2020 U.S. election, it was dissolved.

"They basically said Oh good, we made it through the election. There wasn't riots. We can get rid of Civic Integrity now.' Fast forward a couple of months, we got the insurrection," recounts Haugen. "And when they got rid of Civic Integrity, it was the moment where I was like I don't trust that they're willing to actually invest what needs to be invested to keep Facebook from being dangerous."

By the algorithm picking the content to show to users based on engagement, Haugen says the system now is optimized for engagement or to gain a reaction. "But its own research is showing that content that is hateful, that is divisibe, that is polarizing, it's easier to inspire people to anger than it is to other emotions."

"Yes, Facebook has realised that if they change the algorithm to be safer, people will spend less time on the site, they'll click on less ads, they'll make less money," she adds, before insisting that safety systems introduced to reduce misinformation for the 2020 election were temporary.

"As soon as the election was over, they turned them back off, or they changed the settings back to what they were before, to prioritize growth over safety," Haugen claims. "And that really feels like a betrayal of democracy to me."

Haugen's documents release was not just to the Wall Street Journal. In September, lawyers working on Haugen's behalf filed at least 8 complaints with the Securities and Exchange Commission. The filings were on the theory that Facebook must not lie to investors or withhold material information, something that its public claims about progress against hate speech and reality may prompt closer examination by the regulator.

Malcolm Owen

Malcolm Owen

-m.jpg)

William Gallagher

William Gallagher

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

-m.jpg)

9 Comments

I say it again, the world would have been a better place without Facebook.

You don’t say…this can not be accurate! /s

My reaction: yawn

Delete post