OpenAI has released GPT-4, an upgraded language model for ChatGPT that is more accurate — but still flawed.

ChatGPT is a chatbot that anyone can use to generate stories, articles, and other forms of text. The long-awaited GPT-4 update can accept images as inputs but still generates text for the output.

OpenAI calls it "the latest milestone in its effort in scaling up deep learning" in its Tuesday announcement and claims it has shown human-level performance on various professional and academic benchmarks. For example, it passed a simulated bar exam with a score around the top 10% of test takers.

The company has rebuilt its deep learning stack over the past two years and worked with Microsoft Azure to design a supercomputer for workloads. GPT-3.5 was the first test run of the new system.

While the new system is more accurate than its predecessor, it still makes factual errors. For example, in one of the examples that OpenAI provides it referred to Elvis Presley as the son of an actor.

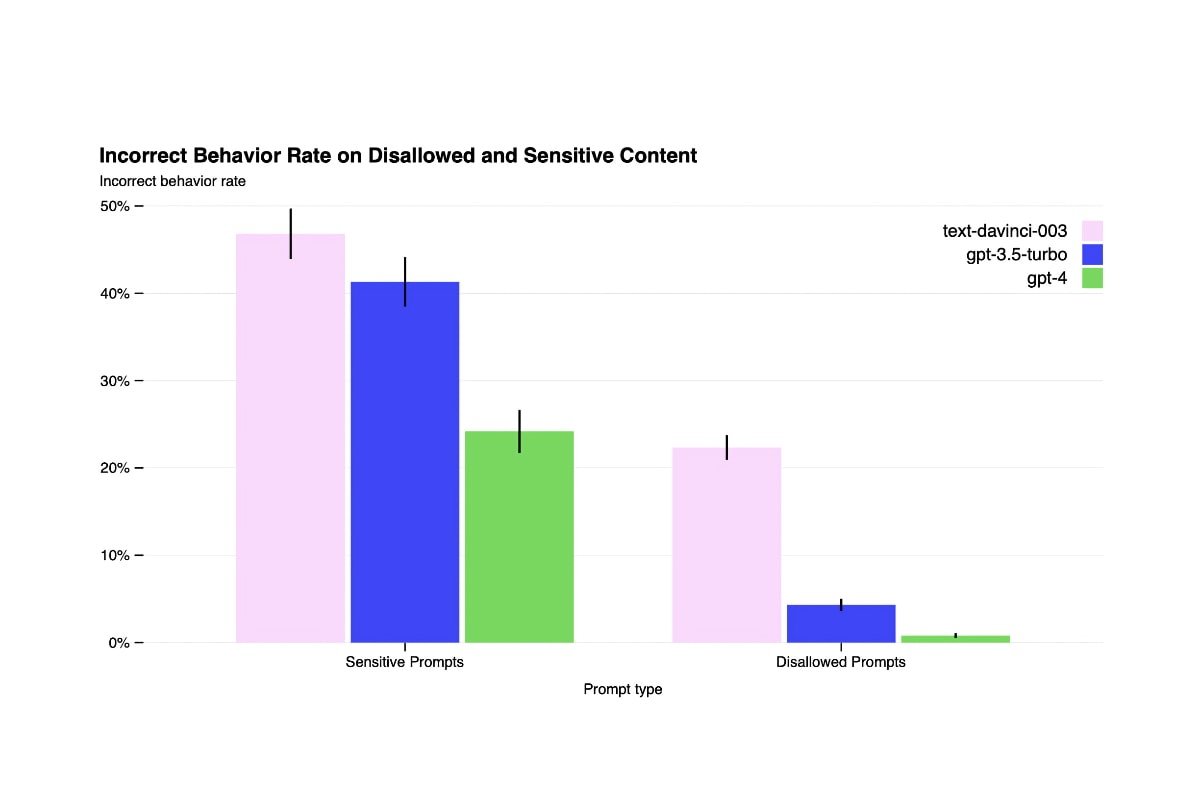

GPT-4 also lacks knowledge of events after September 2021 and can't learn from its experiences. However, OpenAI has improved many of GPT-4's safety properties.

The company decreased the model's tendency to respond to requests for disallowed content by 82% compared to GPT-3.5. And it responds to sensitive requests such as medical advice and self-harm per the company's policies 29% more often.

"We look forward to GPT-4 becoming a valuable tool in improving people's lives by powering many applications," OpenAI wrote. "There's still a lot of work to do, and we look forward to improving this model through the collective efforts of the community building on top of, exploring, and contributing to the model."

OpenAI is releasing GPT-4's text input capability into ChatGPT and its API with a waitlist. However, it's immediately available today for paying users with the ChatGPT Plus $20 monthly subscription plan.

People can also experience ChatGPT and GPT-4 in Microsoft Bing and the Edge browser, which the company announced in February.

Andrew Orr

Andrew Orr

-m.jpg)

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

3 Comments

Excellent synopsis Marvin. I think that the term “Artificial Intelligence” too often conjures up dystopian imagery in a lot of people’s minds, like the AI is going to replace humans. I think a more accurate perspective is to compare AI to robotics, which have always conjured up similar kinds of dystopian imagery in people’s minds.

Robotics in practice are employed at very many levels of granularity and functionality. All robots are constrained in what tasks they are optimized to perform. Some robotics applications perform complex tasks autonomously (once programmed to do so), some do somewhat minor but repetitive tasks at rates and levels of precision that are unsustainable by humans, and some work cooperatively alongside humans with very constrained protocol and well defined hand-offs. But a general purpose, universally functional, and massively adaptable robot that can learn to perform tasks on its own, much less self replicate and attain mastery over humans, is still science fiction.

I think we’ll see AI being applied with similar degrees and levels of granularity and specialization that we see with robots, but across a much wider range spectrum of functionality because AI isn’t limited by the physical constraints and dynamics that automated machines must contend with. In general I think we do need to find ways to employ AI at practical levels that offset some of our cognitive limitations, especially those involving capacity and speed, in much the same that robots help us offset some of our physical limitations.

I’m not dissuaded at all about the potential of AI based on the current limitations and shortcomings of AI that we’ve seen demonstrated so far. These are largely dog & pony shows and have a lot of opportunities for exceeding the boundaries of determinism required for practical and pragmatic applications of AI. The practical and pragmatic applications of AI will be much less flashy and will largely go unnoticed.

One last thing. Widespread use of AI will require overrides, security, guards, and safety features in much the same way that robots incorporate similar requirements to ensure or minimize the robots ability to do harm to humans. People have been killed by robots, but mostly when the person bypasses or circumvents the robot’s safety features or guards. These latter requirements for safety, security, guards, etc., have not been openly discussed around the AI demonstrations that have been shown thus far. These missing pieces really need to be incorporated into the baseline, not added on after the fact.