Apple later this year hopes to make real-time audio transcription and summarization available system-wide on many of its devices, as the iPhone maker looks to harness the power of AI in delivering efficiency boosts to several of its core applications, AppleInsider has learned.

People familiar with the matter have told us that Apple has been working on AI-powered summarization and greatly enhanced audio transcription for several of its next-gen operating systems. The new features are expected to enable significant improvements in efficiency for users of its staple Notes, Voice Memos, and other apps.

Apple is currently testing the capabilities as feature additions to several app updates scheduled to arrive with the release of iOS 18 later in 2024. They're also expected to make their way to the corresponding apps in macOS 15 and iPadOS 18 as well.

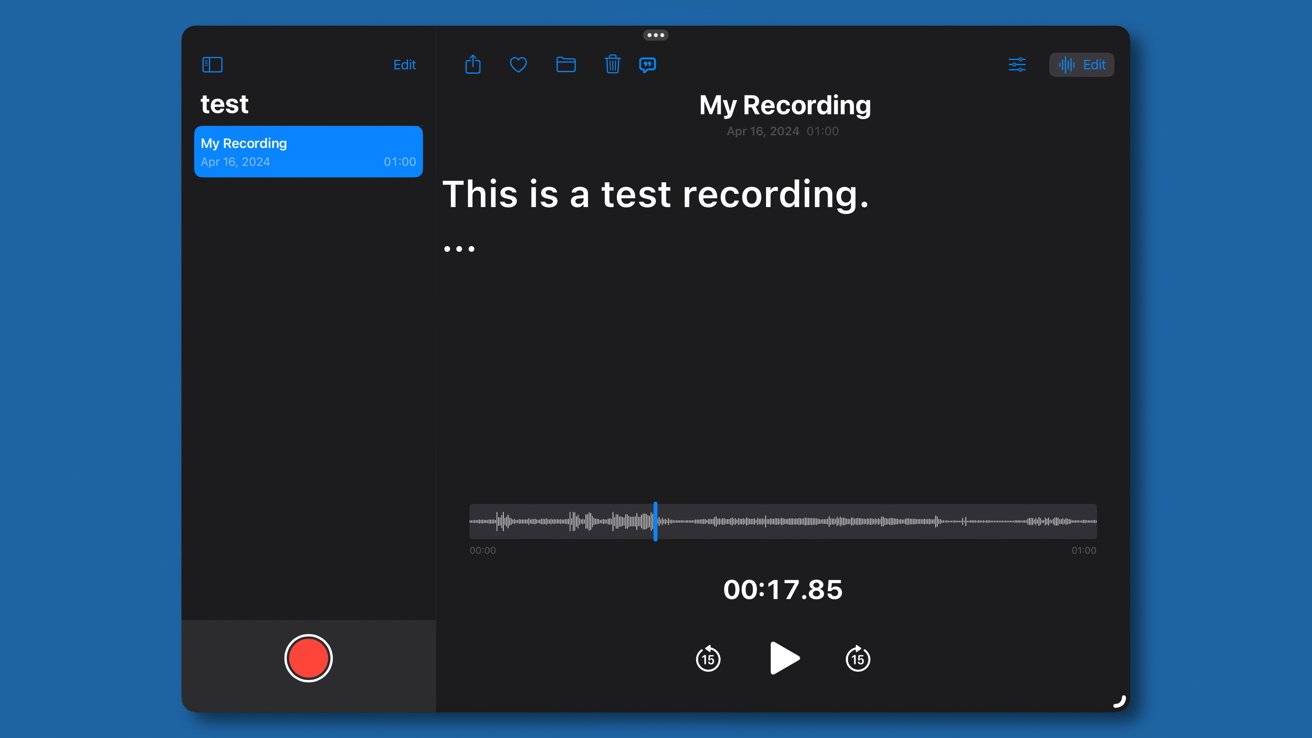

The default Voice Memos application that Apple includes across its device portfolio will be among the first to receive upgraded capabilities. Early versions of the app provide a running transcript of each audio recording, operating similarly to the company's recent Live Voicemail feature.

The transcriptions occupy the central area of the application window, replacing the larger graphical representation of recorded audio found in the existing version of the app.

Transcription is also being pulled into the next version of Notes. Pre-release versions of both apps feature a dedicated transcription button in the form of a speech bubble, according to those familiar with the software. Tapping the new speech bubble will display a transcription of audio recorded within the app.

The transcription tool will go hand-in-hand with — and provide new context to — upcoming audio recording features in Notes, which were first detailed by AppleInsider in April. Specifically, the update will add an option for AI-generated summarization of recorded audio that instantly provides a basic text summary of the key focal points and action items.

The AI summarization feature, coupled together with the new in-app audio recording and real-time transcription options, is expected to make Apple's built-in Notes app a true powerhouse. The trio of features will serve to benefit a wide array of practical applications, taking on the heavy lifting of processing large amounts of data down to key focal points. This all translates to convenience and clarity at a glance for users.

Students would be able to easily record lectures and classes without relying on third-party tools. If recording from the new Notes app, there's an option to include a transcription and summary within a note, alongside other media such as images, links, and data structures like tables.

The features will also pay dividends for professionals who regularly attend conference calls, virtual business meetings, or seminars as part of their line of work. Such events often divulge large amounts of information, various statistics, detailed business plans, dates, and schedules that Apple's AI technology will analyze and reorganize into properly structured summary briefs.

The same applies to classes or lectures at more advanced levels that frequently include an assortment of information, such as definitions, explanations of complex ideas or theoretical principles, illustrative examples, and much more.

Meanwhile, journalists would gain an extremely efficient way of transcribing and summarizing lengthy interviews. Creatives such as authors and screenwriters could easily record key ideas and look through them later, without having to playback and listen to the majority of the recordings simply to isolate key data points.

Although Apple has gone to great lengths to ensure that its transcription and summarization features generate accurate results, mistakes are inevitable. Thus, maintaining the original audio alongside the transcript and AI-generated summary assures that none of the source information is lost in the transcription or summarization process.

Summarization is only part of a larger Apple AI effort

The new transcription and summarization features will be part of Apple's broader AI push by Apple this year. Similar summarization features are also expected to make their way to Safari 18 via Intelligent Browsing, and to the built-in Messages app — through integration with Apple's on-device AI software.

The use cases and overall purpose of AI-powered summarization features in Safari and Messages are completely different. Whereas Notes will give users the option to summarize meetings, conference calls, and lectures, Safari will allow for webpage summarization, while Messages will offer a condensed version of message contents.

Apple's AI software could also serve to protect its users' privacy, as certain AI features are expected to function entirely on-device. In the case of audio transcription and advanced AI summarization, however, server-side processing may be required for the time being.

By incorporating summarization and audio transcription into its system applications, the company looks to demonstrate some of the best use-case advantages of deploying AI to tackle real-world scenarios. The goal of Apple's AI endeavors is to provide developer features that promise to empower its customers to be more efficient and successful in their daily tasks.

At the same time, the company is hoping to better position itself against the proliferation of competitive third-party applications now utilizing AI technology, several of which have seen healthy adoption rates as consumers weave them into their digital lives.

The Otter application, for example, is another recipient of Apple's Editors' Choice Award. It offers similar functionality to the features discussed in this article. With it, users can record, transcribe, and summarize meetings via generative AI, all in one app.

Microsoft's OneNote also offers support for audio recording in the form of voice notes, serving as another potential rival for Apple's Notes and Voice Memos applications.

It's worth emphasizing, however, that not all software features that Apple tests in pre-release builds of software make it into the existing release cycle. Apple has been known to cancel projects or delay features to subsequent operating system releases and apps at the last minute, so there are ultimately no guarantees on timing and availability.

That said, the new AI summarization and real-time transcription features still appear to be on track for an expected unveiling alongside Apple's next-generation operating systems at the company's Worldwide Developers' Conference (WWDC) in June. They're expected to be joined by improved Calendar and Calculator apps, among others.

Marko Zivkovic

Marko Zivkovic

-m.jpg)

Chip Loder

Chip Loder

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

22 Comments

THIS is the sort of AI enhancement that is actually meaningful.

Sure but we’ve had many of these tools already for years- economical but not exactly innovative.

So I assume transcript is on device and summarization is server-side? Anyways i did a voice to text note when I got trained to work closings in my job and it was great during the first couple of times and I didn’t have to double check with a coworker and bother them. So a summarized transcript would have been even better during that time.

MacOS has had a decent summarize feature since quite some time. Microsoft Ofiice has had background removal from photos also since quite some time. All these AI features, while useful, are not really anything new

On the surface this would seem to support the rumor of Apple using Google's on-device Gemini GenAI. The Pixel 8's were the first smartphones to do this on-device, and it required an update that installed Gemini Nano to accomplish it.