Apple's fastest computers are the new iPad Pro, and M2 Ultra Mac Studio. Here's how, where, and when the iPad Pro can best Apple's speed-demon desktop.

Whenever Apple or any other tech company rolls out a new product, there is usually a rush to try and compare them. Pitting Apple Silicon chips against other tablets in a series of standardized tests can demonstrate the performance of a chip, and how it compares to others, for example.

While everyone can easily understand that the higher the score in a test, the better the chip performs, that's not the whole story. You also have to take into account that a benchmark may not just perform one test.

Benchmarks are generally presented in terms of single-core performance and multi-core performance. With two scores to take into account, as well as multiple core types, it's a slippery slope that could lead to confusion.

This is all you need to understand what those tests mean.

Single core, multi-core, and threads

Decades in the past, a processor would only perform one task at a time. There was no "core count" to worry about, with calculations and tasks performed fairly linearly.

Over time, the industry started to include multiple cores on a chip. One core is an individual processing unit within a CPU.

With dual-core or quad-core chips, that meant each core could work on a different task independently. As a heavily simplified example, this means one core could be in use for running processes associated with a spreadsheet, while a second core could be used to run an image editing tool.

For a large number of discrete tasks, this can help chew through the pile of work, as each core can take another task from the list once it's completed the first one.

There are some chip technologies that can bolster the performance of a CPU, such as splitting cores into multiple virtual cores as "threads." This too can help improve performance further.

In terms of a pile of discrete tasks, multi-core chips can be helpful in chewing through a workload. However, for certain types of applications, multiple cores are required to perform processing of multiple tasks simultaneously.

This is especially useful when applications need the tasks to feed into other processes, or if the required tasks need to be processed in parallel.

These tend to be highly intensive tools, like creative tools, productivity suites, and some games. In the case of many apps on an iPhone or iPad, many will only really work in a single-core way, even if other cores are available.

Performance and efficiency cores

Not only have processors evolved over the years to have multiple cores, but how those cores work has also changed.

The old-fashioned chips mentioned above would run as fast as it can. If there were more cores on the chip, they would be built to be quite identical, and would similarly run at full pelt when required.

This is referred to as symmetric multiprocessing (SMP), but many modern chips go down a different route. Asymmetric multiprocessing (AMP) processors can have multiple cores, but they are built differently.

Typically there are two different cores to consider: Performance cores (P cores) and Efficiency cores (E cores).

A processor can have different amounts of each core. For example, the M4 can have a nine-core configuration of three performance cores and six performance cores, or a ten-core version that brings the performance core count up to four.

Performance cores and efficiency cores perform similarly in that they can complete tasks in exactly the same way. However, they are made to run in different ways.

A performance core is usually larger and runs at higher clock speeds. It can complete tasks quickly, but usually at a cost to energy resources and thermals.

Efficiency cores are typically designed to be smaller, and are designed to be as power-efficient as possible.

As a visualization, consider you have to travel from one town to another. Driving a Ferrari will get you to your destination fast, but at a cost of fuel, while a minivan will be slow but economical.

Both cars will get you where you need to go, but speed and resource usage varies wildly.

Having two types of cores available on a chip offers multiple benefits. For example, by allocating background tasks to efficiency cores to save the performance ones for tougher workloads.

Doing this can also save energy usage over time, which is an essential feature for mobile devices like an iPhone or iPad.

Benchmarking and interpreting results

Benchmarking refers to using defined processes or tools to run performance checks against a chip or a piece of hardware.

This can take the form of scoring how long certain tasks take to complete. In some cases, there are benchmark suites that perform a battery of tests, and offer up an overall score.

One of the most widely used testing tools is Geekbench, with its single-core and multi-core tests often cited in reviews.

As the name implies, the single-core test has Geekbench setting tasks that are limited to one single core. This typically is a task given to a performance core, rather than an efficiency core.

Multi-core tests require the use of all available cores, regardless of if they are performance or efficiency cores.

In interpreting the results, a single-core test could be fairly similar across the board of a family of chips.

For example, the performance cores of each M-series generation are identical in construction, so you will get similar scores for single-core tests. That is regardless of if you're using an Ultra chip or one of the lower tiers.

You will find a lot more variation in multi-core testing since all cores are being used for the test. You'll often find that chips with higher core counts fare better in multi-core testing.

However, you also have to bear in mind that the test also has to take into account the number of cores, and the types of cores available. A chip with two performance cores and eight efficiency cores will fare worse in a multi-core test than one with six performance and four efficiency cores.

To the average smartphone user, the single-core performance is the most important of the two. Since it is quite hard to create programs that can benefit from multiple cores running together, most everyday apps will only really run in a single-core way.

M4 iPad Pro tops the single-core pile

Apple's introduction of a new iPad Pro brings with it a new chip, and a surprising situation when looking at benchmarks.

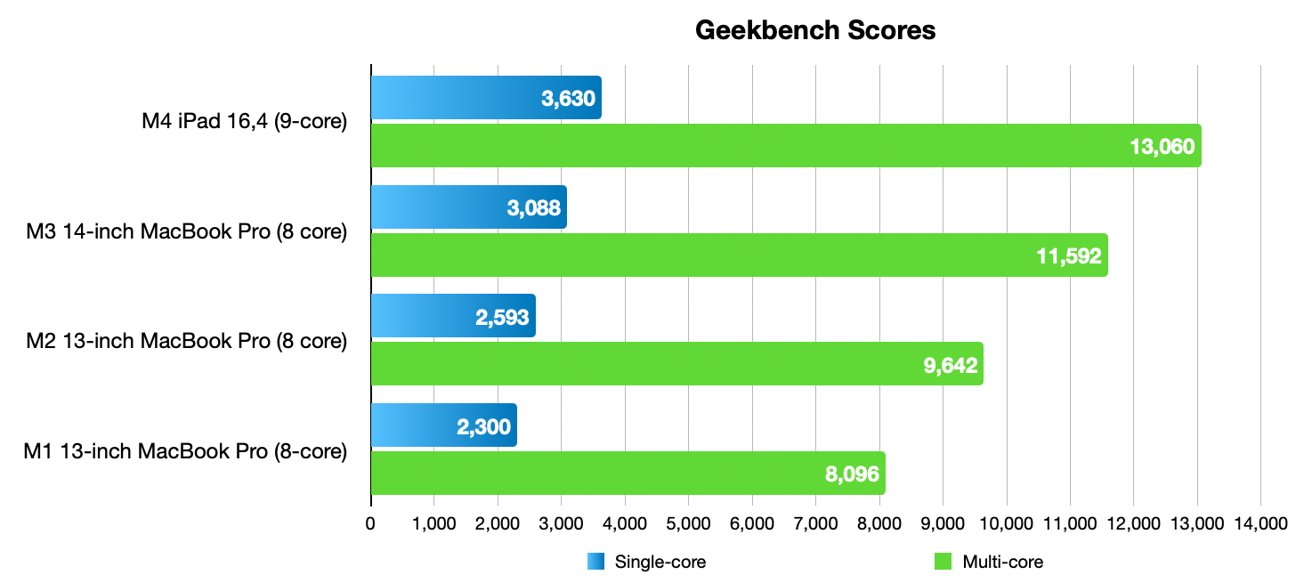

Looking at the single-core scores in the Geekbench browser, the top Mac is the 16-inch MacBook Pro with an M3 Max using 14 CPU cores. It scored 3,131 points according to the results.

The nine-core version of the M4 iPad Pro scored 3,630 in its own test.

At first glance, this does seem like the M4 in the iPad Pro is Apple's top self-designed chip. However, that's only if you look at it from single-core performance alone, as it's a bit different when looking at multi-core performance.

The aforementioned M4 iPad Pro reaches a very good 13,060 for multi-core testing. Looking at the iPhone and iPad listings, it's much faster than its nearest competitor, an M2 iPad Pro scoring 9,646.

But, on the Mac list, the M4's multi-core score is far behind a lot of Macs. The top of the list is the M2 Ultra in the Mac Studio, which has 24 GPU cores and scored 21,329.

Going down the chart, the Macs beating the new iPad Pro's M4 all have more cores. Simply put, the more cores available, the higher the multi-core score can get.

While not the top-performing multi-core chip, it's worth bearing in mind that we're talking about the M4, which should be the base model of that chip family. There will almost certainly be M4 Pro and M4 Max chips on the way, which could also have higher core counts.

If Apple brings out an M4 Ultra — and it seems likely that it will — it will certainly help give the M4 a considerable boost in the multi-core rankings in the future.

And then, that Mac will be at the top of the speed heap in every regard.

Power in practice

As far as benchmarks go, they are a good method for determining the peak performance of a computer or a mobile device. Knowing that your new shiny MacBook Pro can perform at a high level is comforting.

Knowing the true performance before purchasing is also nice to know. Equal core counts do not equal similar performance, especially if the split of performance and efficiency cores are too different.

Battery is not a factor in performance benchmarks, since they are run based on there being an abundance of power available. In reality, power consumption is a big issue, especially for mobile computing or for hardware like the iPhone and iPad.

This is where the differences in core usage come into play. You could imagine that an iPhone would err towards using the efficiency cores to be more economical in its battery usage.

Without these differences in cores, it's easy to imagine that a smartphone could burn through battery power at a much faster rate. Efficiency cores are there to ensure that you can use your iPhone for as long as possible.

Performance cores can certainly be useful in gaming, for example. Efficiency cores will at least mean you can check your email long into the night.

Malcolm Owen

Malcolm Owen

![Dual-core and quad-core chips help revolutionize the PC industry [Pexels/Sergei Starostin]](https://photos5.appleinsider.com/gallery/59732-122118-chipsSergeiStarostin-xl.jpg)

![The performance cores in the M4 are physically larger than the efficiency cores [Apple]](https://photos5.appleinsider.com/gallery/59732-122117-Apple-M4-chip-new-CPU-240507-xl.jpg)

-m.jpg)

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Wesley Hilliard

Wesley Hilliard

-m.jpg)

6 Comments

In reality the M4 is faster by roughly the same amount as the M3 was over the M2.

Nothing to see here, the M4 is a brand new chip, so it's bound to be faster, which still doesn't make the iPad better than the M3 MacBook Pro's

Sheer speed is one thing. Usability another.

I love my iPad Pro, but it'll never match the usability and flexibility of my MacBook Pro.

There’s an obsession with core performance. Multicore performance in particular is almost pointless for most people since there are relatively few apps that are designed to execute across multiple cores. For most people single core performance , RAM and memory bandwidth are the limiting factors that determine the speed experience people will observe. Apple is famous for focusing their product announcements on CPU performance, yet configuring systems with slow SSD’s or only 8 GB of RAM or slow memory bandwidth. All Macs should come with at least 16 GB of RAM and dual SSD’s. The marginal costs aren’t that much, but the performance improvements are significant. But Apple likes to gain lots of profit from low balling a base configuration that is performance constrained, requiring an owner to pay exorbitant upgrade charges to get more RAM or storage.

Heterogenous computing is inevitable. It just makes no sense to keep making cores with same speed/power when a lot of tasks only need specific repeated instructions that can be done in less time with better efficiency with smaller cores.