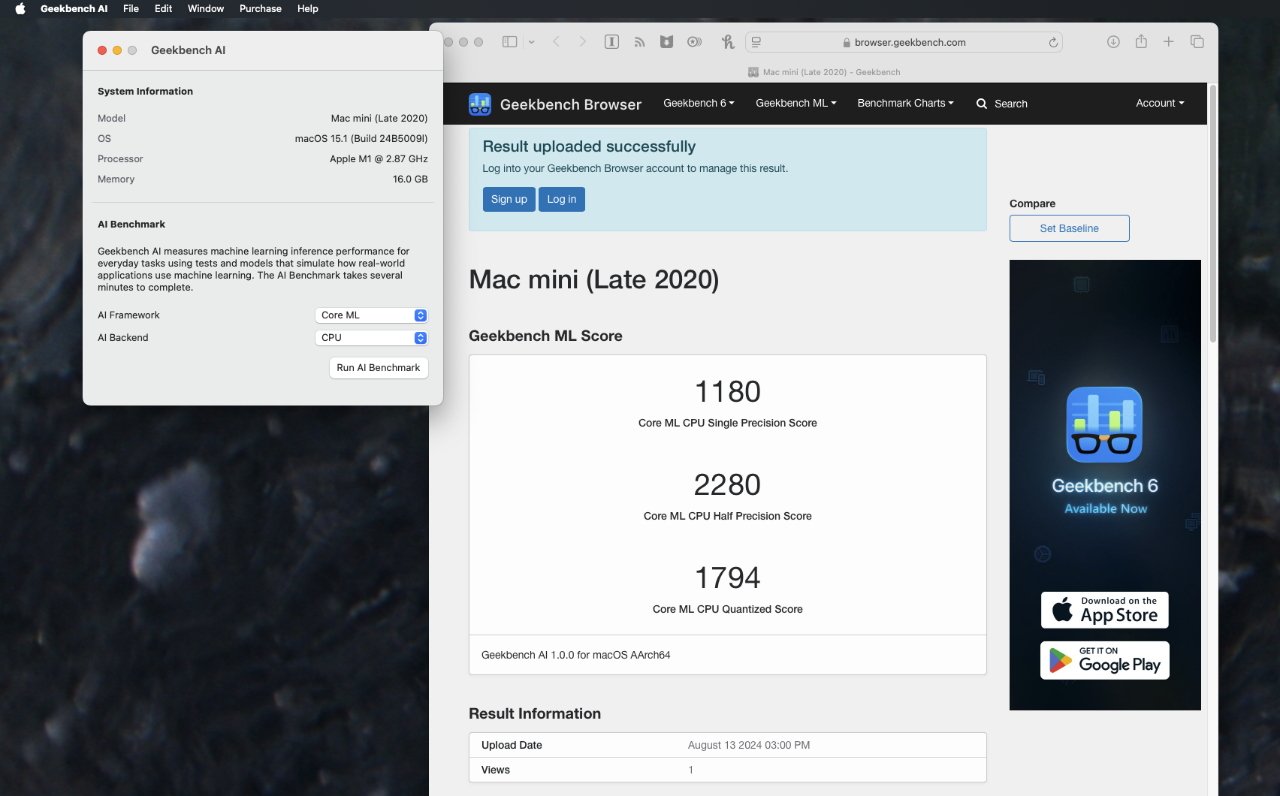

Following months of beta testing, the newly-renamed Geekbench AI 1.0 is now available with the aim of giving users the ability to make comparable measurements of Artificial Intelligence performance across iOS, macOS, and more.

Originally released in beta form under the name Geekbench ML — for Machine Learning — in December 2023, the tool allowed for comparisons between the Mac and the iPhone. Now as Geekbench AI, the makers claim to have radically increased the app's ability to usefully measure and gauge performance.

"With the 1.0 release, we think Geekbench Al has reached a level of dependability and reliability that allows developers to confidently integrate it into their workflows," wrote the company in a blog post, "[and] many big names like Samsung and Nvidia are already using it."

The regular Geekbench has long provided separate scores for single-core and multi-core performance of devices. In the case of Geekbench AI, it measures against three different types of workload.

"Geekbench AI presents its summary for a range of workload tests accomplished with single-precision data, half-precision data, and quantized data," continues the company, "covering a variety used by developers in terms of both precision and purpose in AI systems."

The firm stresses that the intention is to produce comparable measurements that reflect real-world use of AI — as wide-ranging as that is.

Geekbench AI 1.0 is available direct from the developer. As with previous Geekbench releases, the tool is free for most users, and it runs on macOS, iOS, Android and Windows.

There is a paid version called Geekbench AI Pro. It allows developers to keep their scores private instead of automatically uploading to a public site.

William Gallagher

William Gallagher

Charles Martin

Charles Martin

Marko Zivkovic

Marko Zivkovic

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

5 Comments

Scrolling through the entries so far it seems that the Neural Engine works great for the quantized version of the benchmark and so-so for half precision. But it seems Apple hardware in general is kind of lame for the single precision version (with the best hardware being the GPU in the M3 Max).

Happy to be corrected, but I take this to mean that Apple hardware is good for on-device inference but crappy for model training.

I wonder how much of the issue is CoreML needing more optimization versus Apple needing beefier GPUs...