Apple Vision Pro's eye-tracking technology offers a new way to interact with typing, but hackers are already exploiting it to steal sensitive information. Here's what you need to know to protect your data.

New technologies always come with new vulnerabilities. One such vulnerability, GAZEploit, exposes users to potential privacy breaches on Apple Vision Pro FaceTime calls.

GAZEploit, developed by researchers from the University of Florida, CertiK Skyfall Team, and Texas Tech University, uses eye-tracking data in virtual reality to guess what a user is typing.

When users don a virtual or mixed reality device, like the Apple Vision Pro, they can type by looking at keys on a virtual keyboard. Instead of pressing physical buttons, the device tracks eye movements to determine the selected letters or numbers.

The virtual keyboard is where GAZEploit comes in. It analyzes the data from eye movements and guesses what the user is typing.

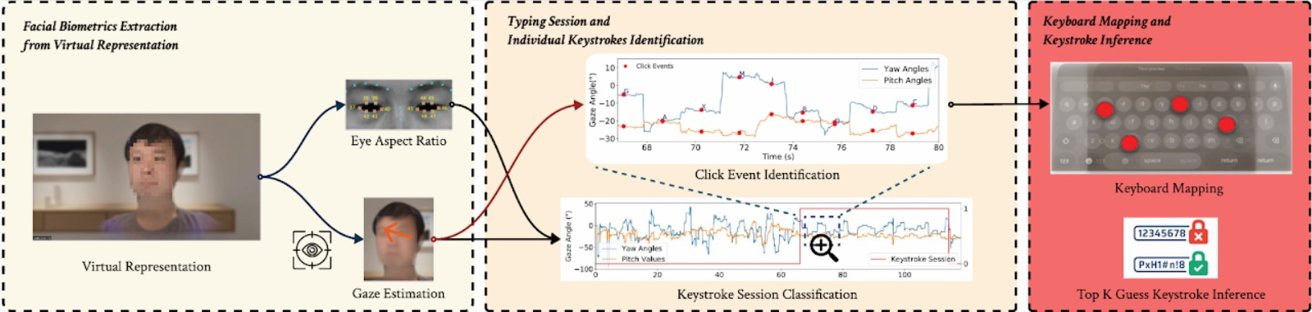

GAZEploit works by recording the movements of the virtual avatar's eyes of the user. It focuses on the eye aspect ratio (EAR), which measures how wide a person's eyes are open, and eye gaze estimation, which tracks exactly where they're looking on the screen.

By analyzing these factors, hackers can determine when the user is typing and even pinpoint the specific keys they're selecting.

When users type in VR, their eyes move in a particular way and blink less often. GAZEploit detects this and uses a machine learning program called a recurrent neural network (RNN) to analyze these eye patterns.

The researchers trained the RNN with data from 30 different people and got it to accurately identify typing sessions 98% of the time.

Guessing the right keystrokes

Once a typing session is identified, GAZEploit predicts the keystrokes by analyzing rapid eye movements, called saccades, followed by pauses, or fixations, when the eyes settle on a key. The attack matches these eye movements to the layout of a virtual keyboard, figuring out the letters or numbers being typed.

GAZEploit can accurately identify the selected keys by calculating the gaze's stability during fixations. In their tests, the researchers reported 85.9% accuracy in predicting individual keystrokes and nearly perfect 96.8% recall in recognizing typing activity.

Since the attack can be carried out remotely, attackers only need access to video footage of the avatar to analyze eye movements and infer what is being typed.

Remote access means that even in everyday scenarios such as virtual meetings, video calls, or live streaming, personal information like passwords or sensitive messages could be compromised without the user's knowledge.

How to protect yourself from Gazeploit

To protect against potential attacks like GAZEploit, users should take several precautions. First, they should avoid entering sensitive information, such as passwords or personal data, using eye-tracking methods in virtual reality (VR) environments.

Instead, it's safer to use physical keyboards or other secure input methods. Keeping software updated is also crucial, as Apple often releases security patches to fix vulnerabilities.

Finally, adjusting privacy settings on VR/MR devices to limit or disable eye-tracking when not needed can further reduce exposure to risks.

Andrew Orr

Andrew Orr

-m.jpg)

Oliver Haslam

Oliver Haslam

Thomas Sibilly

Thomas Sibilly

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

-m.jpg)

7 Comments

The method makes sense, but where is Gazepoit running? Is it on your Vision Pro (and shouldn't privacy settings limit access to the avatar?) or is it running on another device you are communicating with?

Apple already fixed this. 1.3 turns off the persona when typing.

Since Apple already manipulates one’s gaze (at least on iOS) so that it appears as though one is looking right at one’s FaceTime caller, why not just fix the pupils (or otherwise naturally animate them) while one has a virtual keyboard active?