For developers, threading is an important issue that impacts game performance. Here's how task scheduling works in Apple Silicon games.

Demands on GPU and CPUs are some of the most compute-intensive workloads on modern computers. Hundreds or thousands of GPU jobs have to be processed every frame.

In order to make your game run on Apple Silicon as efficiently as possible, you'll need to optimize your code. Maximum efficiency is the name of the game here.

Apple Silicon introduced new built-in GPUs and RAM for fast access and performance. Apple Fabric is an aspect of the M1-M3 architecture that allows access to CPU, GPU, and unified memory, all without having to copy memory to other stores - which improves performance.

Cores

Each Apple Silicon CPU includes efficiency cores and performance cores. Efficiency cores are designed to work in an extremely low-power mode, while performance cores are made to execute code as quickly as possible.

Threads, namely the paths of code execution, run automatically on both types of cores for live threads by a scheduler. Developers have control over when threads run or don't run, and can put them to sleep or wake them.

At runtime, several software layers can interact with one or more CPU cores to orchestrate program execution.

These include:

- The XNU kernel and scheduler

- The Mach microkernel core

- The execution scheduler

- The POSIX portable UNIX operating system layer

- Grand Central Dispatch, or GCD (Apple-specific threading technology based on blocks)

- NSObjects

- The application layer

NSObjects are core code objects defined by the NeXTStep operating system which Apple acquired when it bought Steve Jobs second company NeXT in 1997.

GCD blocks work by executing a section of code, which upon completion use callbacks or closures to finish their work and provide some result.

POSIX includes pthreads which are independent paths to code execution. Apple's NSThread object is a multithreading class that includes pthreads along with some other scheduling information. You can use NSThreads and its cousin class NSTask to schedule tasks to be run on CPU cores.

All of these layers work in concert to provide software execution for the operating system and apps.

Guidelines

When developing your game, there are several things you will want to keep in mind to achieve maximum performance.

First, your overall design goal should be to lighten the workload placed on the CPU cores and GPUs. The code that runs the fastest is the code that never has to be executed.

Reducing code, and maximizing execution scheduling is of paramount importance for keeping your game running smoothly.

Apple has several recommendations you can follow for maximum CPU efficiency. These guidelines also apply to Intel-based Macs.

Idle time and scheduling

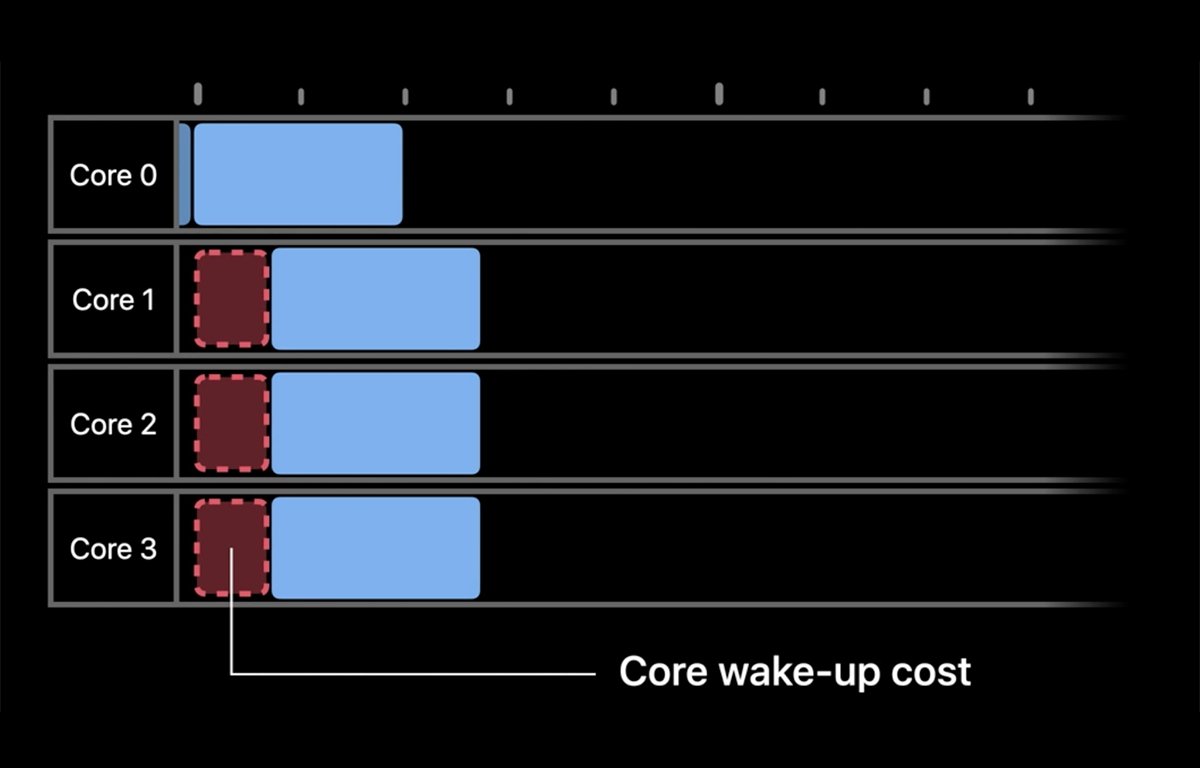

First, when a specific GPU core is not being used, it goes idle. When it is awakened for use, there is a small bit of wake-up time, which is a small cost. Apple shows it like this:

Next, there is a second type of cost, which is scheduling. When a core wakes up, it takes a small amount of time for the OS scheduler to decide which core to run a task on, then it has to schedule code execution on the core and begin execution.

Semaphores or thread signaling also have to be set up and synchronized, which takes a small amount of time.

Third, there is some synchronization latency as the scheduler figures out which cores are already executing tasks and which are available for new tasks.

All of these setup costs impact how your game performs. Over millions of iterations during execution, these small costs can add up and affect overall performance.

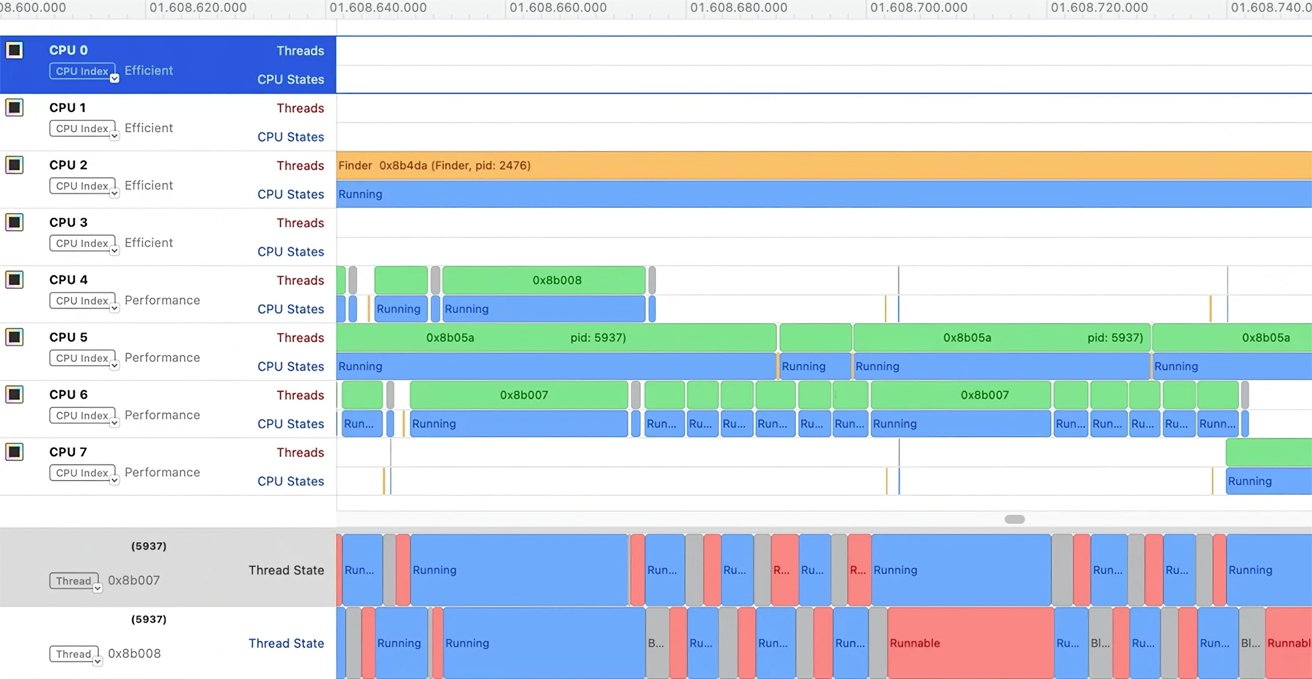

You can use the Apple Instruments app to discover and track how these costs affect runtime performance. Apple shows an example of a running game in Instruments like this:

In this example a start/wait thread pattern emerges on the same CPU core. These tasks could have been running in parallel on multiple cores for better performance.

This loss of parallelism is caused by extremely short code execution times which in some cases are nearly as short as a single core CPU wake-up time. If that short code execution could be delayed just a bit longer, it could have run on another core which would have caused execution to run faster.

To solve this problem, Apple recommends using the correct job scheduling granularity. That is, to group extremely small jobs into larger ones so that the collective execution time does not approach or exceed core wake-up and schedule overhead times.

There is always a tiny thread scheduling cost whenever a thread runs. Running several tiny tasks at once in one thread can remove some of the scheduler overhead associated with thread scheduling because it can reduce the overall thread scheduling count.

Next, get most jobs to run ready at once before scheduling them for execution. Whenever thread scheduling is started, usually some of them will run but some of them may end up being moved off-core if they have to wait to be scheduled for execution.

When threads get moved off-core it creates thread blocking. Signaling and waiting on threads in general may lead to a reduction in performance.

Waking and pausing threads repeatedly can be a performance problem.

Parallelize nested for loops

During nested for loop execution, scheduling outer loops at a coarser granularity (i.e. running them less often) leaves inner parts of loops uninterrupted. This can improve overall performance.

This also reduces CPU cache latency and reduces thread synchronization points.

Job pools and the kernel

Apple also recommends using job pools to leverage worker threads for better performance.

A worker thread in general is a background thread that performs some work on behalf of another thread, usually called a task thread, or on behalf of some higher-level part of an app to the OS itself.

Worker threads can come from different parts of software. There are worker threads that can be put to sleep so they are not actively running or scheduled to be run.

In job pools, worker threads steal job scheduling from other threads. Since there is some thread scheduling cost for all threads, job-stealing makes it much cheaper to start a job in user space than it does in OS kernel space where the scheduler runs.

This eliminates the scheduling overhead in the kernel.

The OS kernel is the core of the OS where most of the background and low-level work takes place. User space is where most app or game code execution actually runs - including worker threads.

Using job stealing in user space skips the kernel scheduling overhead, improving performance. Remember - the fastest piece of code possible is the piece of code that never has to run.

Avoid signaling and waiting

When you reuse existing jobs instead of creating new ones - by reusing a thread or task pointer, you are using an already active thread on an active core. This also reduces job scheduling overhead.

Also, be sure only to wake worker threads when needed. Be sure enough work is ready to justify waking up a thread to run it.

CPU cycles

Next, you'll want to optimize CPU cycles so none are wasted at runtime.

To do this, you first avoid promoting threads from an E-core to a P-core. E-cores run slower to save power and battery life.

You can do this by avoiding busy-wait cycles which monopolize a CPU core. If the scheduler has to wait too long on one busy core, it may shift the task to another core - an E-core if that is the only one available.

The yield and setpri() scheduling calls determine at what priority threads are run, and when to yield to other tasks.

Using yield on Apple platforms effectively tells a core to yield to any other thread running on the system. This loosely defined behavior can create performance bottlenecks which are difficult to track down at run time in Instruments.

yield performance varies across platforms and OS'es and can cause long execution delays - up to 10ms. Avoid using yield or setpri() whenever possible since doing so may temporarily send a given CPU core's execution to zero for a moment.

Also, avoid using sleep(0) - since on Apple platforms, it has no meaning and is a no-op.

Scale thread counts

In general, you want to use the right number of threads for the number of CPU cores. Running too many threads of devices with low core counts can slow down performance.

Too many threads create core context switches which are expensive.

Too few threads cause the converse problem: too few opportunities to parallelize threads for scheduling on multiple cores.

Always query the CPU design at game launch time to see what kind of CPU environment you're running in and how many cores are available.

Your thread pool should always be scaled on CPU core count, not on overall tasks thread count.

Even if your game design requires a large number of worker threads for a given task, it will never run efficiently if there are too many threads and too few cores to run them on simultaneously.

You can query an iOS or macOS device using the UNIX sysctlbyname function. The hw.nperflevels sysctlbyname parameter returns information about the number of general CPU cores a device has.

Use Instruments

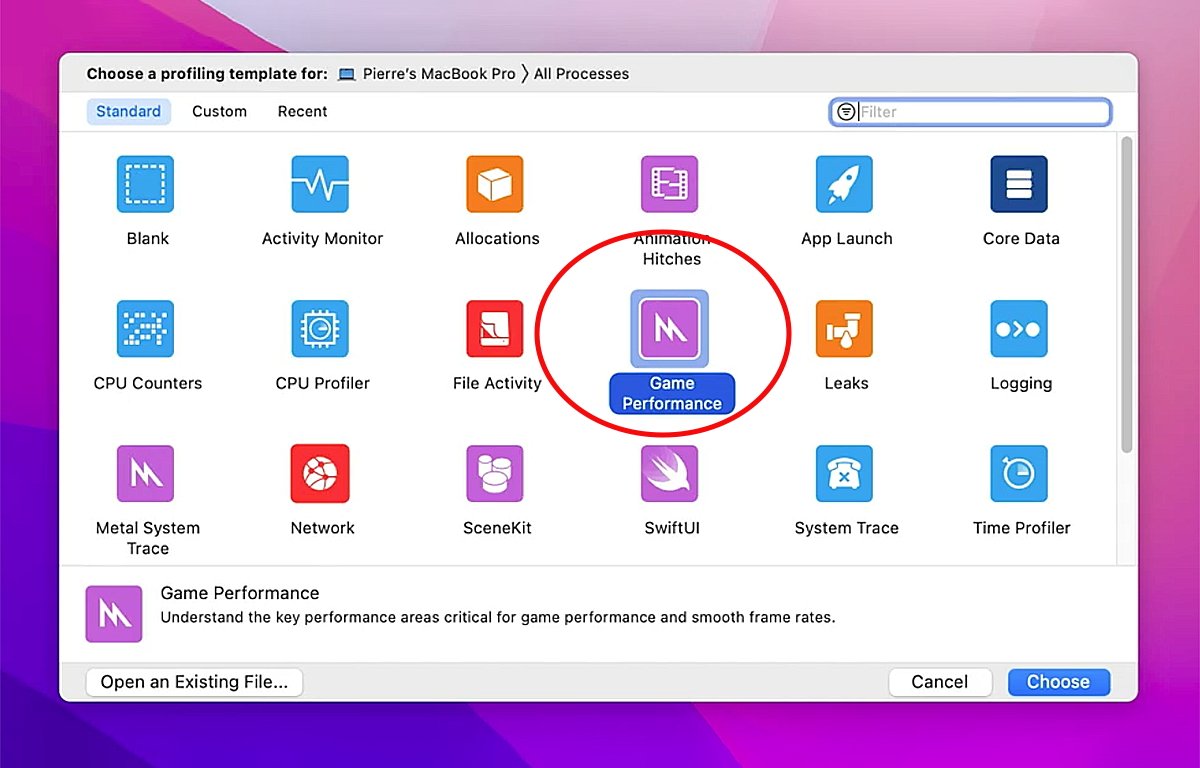

In Apple's Instruments app, there is a Game Performance template that you can use to see and measure game performance at runtime.

There is also a Thread State Trace feature in Instruments which can be used to trace thread execution and wait states. You can use TST to track down which threads go idle and for how long.

Summary

Game optimization is a very complex topic and we've barely touched on a few techniques you can use to maximize app performance. There is much more to learn - be prepared to spend several days mastering the topic.

In many cases, you'll learn best from trial and error by using Instruments to track how your code is behaving and modify it where any performance bottlenecks appear.

Overall, the key points to keep in mind for game job scheduling on multi-core Apple systems are:

- Keep tasks as small as possible

- Group as many tiny tasks as possible in single threads

- Reduce thread overhead, scheduling, and synchronization as much as possible

- Avoid core idle/wake cycles

- Avoid thread context switches

- Use job pooling

- Only wake threads when needed

- Avoid using sleep(0) and yield when possible

- Use semaphores for thread signaling

- Scale thread counts to CPU core counts

- Use Instruments

Apple also has a WWDC video entitled Tune CPU job scheduling for Apple silicon games which discusses most of the topics above, and much more.

By paying attention to the scheduling specifics of your game code, you can wring as much performance as possible out of your Apple Silicon games.

Chip Loder

Chip Loder

Charles Martin

Charles Martin

Wesley Hilliard

Wesley Hilliard

Stephen Silver

Stephen Silver

William Gallagher

William Gallagher

Marko Zivkovic

Marko Zivkovic