A pair of patents awarded to Apple on Tuesday illustrates research running the gamut of mobile tech, with one invention detailing an advanced mapping system with customizable and shareable image data, and another covering ways to convert audio data into haptic vibrations for the hearing impaired.

On the personal transportation front, Apple's U.S. Patent No. 9,080,877 for "Customizing destination images while reaching towards a desired task," as published by the U.S. Patent and Trademark Office, hints at a future Maps platform capable of sharing personalized routing information with images, video, audio and more.

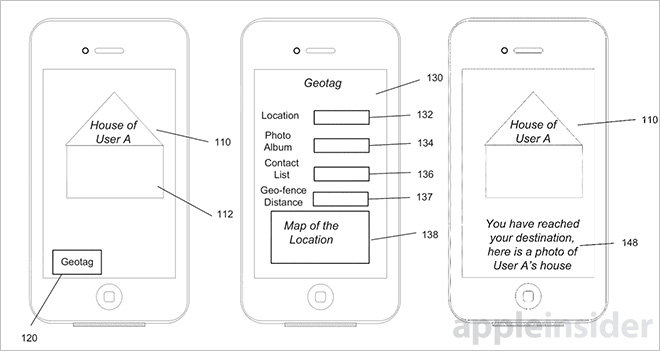

Apple argues that current turn-by-turn navigation systems lack tools by which users can easily recognize a destination location, especially if they are first time visitors, as navigation imagery provided by third-party mapping services might be inadequate. For example, a navigation system might cause undue confusion by displaying outdated street-level imagery of a particular destination, be limited to a top-down bird's-eye view or only provide a UI comprised of vector maps.

Apple proposes a means for one user to associate pictures, video and audio with a specific location, then send a media file through the cloud to another user navigating to that destination. When the second user enters a geofenced area surrounding their destination location, the provided images are pushed down and automatically displayed in a mapping app. In some cases, the mapping app provides a 360-degree view of a user's immediate surroundings.

The document outlines various user interface schemes for associating media assets captured by a user's iPhone to real world locations, including automatically assigning GPS coordinates, manually entering text tags, selecting point of interest information from a central database and more. When sharing, users can notate when, how and where the data is displayed on a second user's device.

Apple's mapping patent was first filed for in February 2013 and credits Swapnil R. Dave, Anthony L. Larson and Devrim Varoglu as its inventors.

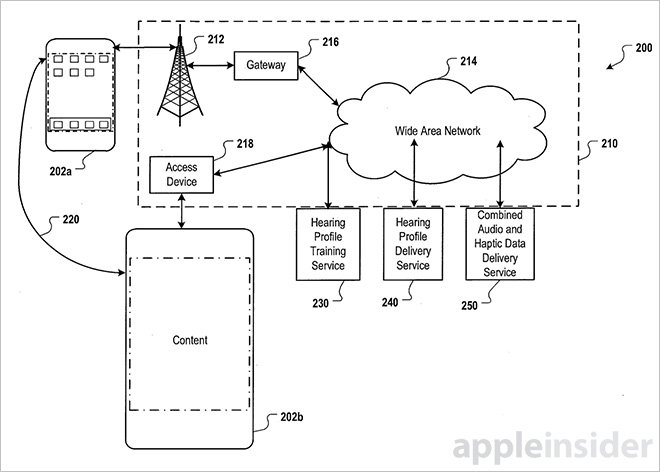

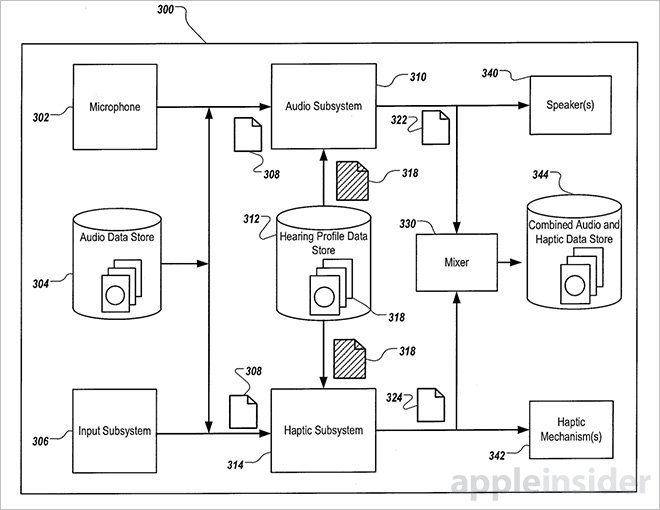

A second granted invention, U.S. Patent No. 9,083,821 aptly titled "Converting audio to haptic feedback in an electronic device," describes a method by which a computing device translates audio data, or a portion of that incoming signal, into vibratory feedback.

Specifically, Apple's invention parses an audio signal into high and low frequencies, converts one portion of the range into haptic data, shifts a second portion to a different frequency range and replays both to the user. This particular embodiment is aimed at users with hearing impairments that leave them partially deaf to select frequency ranges. For example, an elderly user unable to distinguish extreme frequencies might set a hearing profile that automatically converts high tones into haptic vibrations and low tones up into an audible midrange, granting the sensation of enjoying a dynamic musical recording.

Other embodiments allow for real-time processing of audio gathered by a device's microphone. In such cases, a user sets up listening preferences in which certain frequencies are converted into a vibration pattern, for example those associated with movie sound effects. Apple notes the invention's augmented listening experience is applicable to users without hearing impairments, but those with impairments might find it beneficial to shift dialogue down to an audible range.

Finally, an interesting application assigns unique haptic patterns to real world events detected by a mobile device, for example fire alarms, oncoming traffic, car horns, screams, phone rings and more. The device scans ambient noise for distinguishable events, compares the audio against a sound database and outputs haptic feedback based on returned results. Such accessibility aids could be life saving for some users.

Other embodiments include systems for handling musical instruments and recorded audio.

Apple's audio-to-haptic conversion patent was first filed for in September 2011 and credits Gregory F. Hughes as its inventor.

Mikey Campbell

Mikey Campbell

-m.jpg)

Malcolm Owen

Malcolm Owen

Amber Neely

Amber Neely

Christine McKee

Christine McKee

Chip Loder

Chip Loder

Marko Zivkovic

Marko Zivkovic

4 Comments

Apple map needs to run on Windows platform and once map on desktop is routed through your favorite POI than provide "send" feature to send from desktop to iphone. This way, you can just get in car and follow that route all along. Of course, map would suggest alternate route in case traffic or accident. But, once pass that detour, you should be back to original "send" map.

The haptic vibration use discussed in the patent makes it more clear why the haptic engine hardware would be included in the iPhone/iPad/iPod touch(?) devices. IMHO, the firm press did not replace or extend the long press gesture to warrant including the haptic engine hardware in the iPhone just to display context menus.

Helping the audial impaired is a great use case which embraces and extends Apple's accessibility support. Another use case would be support for the visually impaired by translating text into brail-like vibrations.

SOS could make a MAJOR come back on the Apple Watch and iPhone!

But I thought Apple didn't innovate.

They don't. All those features are already available on other platforms