Apple patents hover-sensing multitouch display

After the release of 3D Touch force-sensing input on iPhone 6s, Apple continues its push for the perfect input technology, most recently winning a patent that uses inline proximity sensors to detect non-contact hover gestures.

Granted by the U.S. Patent and Trademark Office on Tuesday, Apple's U.S. Patent No. 9,250,734 for "Proximity and multi-touch sensor detection and demodulation" details methods by which photodiodes, or other proximity sensing hardware, work in tandem with traditional multitouch displays to essentially shift the user interaction area beyond the screen. In some ways the invention is similar in scope to 3D Touch, but measures input in an opposite direction along the z-axis relative to an iPhone's screen.

Non-contact user interfaces have long been studied as a means of user input, though recent work in the area has concentrated on long-range implementations that rely on cameras and other specialized optical systems. The 3D motion capture technology Apple acquired with its 2013 purchase of PrimeSense, an Israeli firm that worked on first generation Xbox Kinect hardware, is a good example of a modern large scale UI deployment.

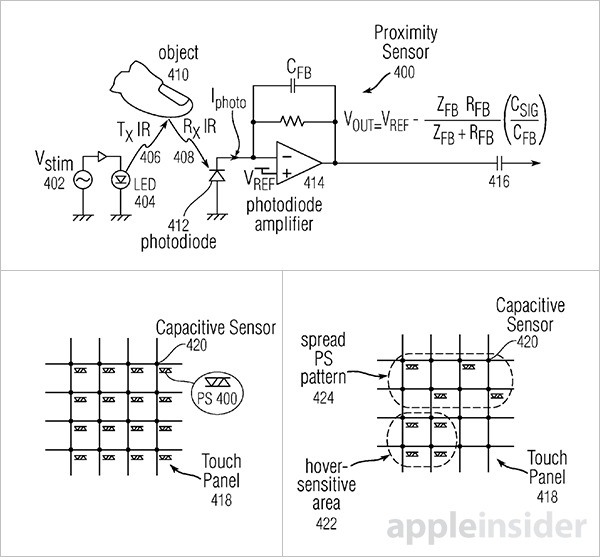

With today's patent, Apple proposes a more intimate solution optimized for use in iPhone, MacBook and other portable devices. Like current iOS devices, the invention includes in part a capacitive sensing element disposed throughout an LCD display with multiple piggybacked proximity sensors. This hybrid solution provides a more complete "image" of touch inputs by delivering hover gesture detection.

The document suggests use of infrared LEDs and photodiodes, much like the infrared proximity modules iPhone uses for head detection. Light generated by the LED bounces off a user's finger and is captured by the photodiode, which changes current output as a function of received light.

With multiple sensor arrays, installed on a per-pixel basis, in groups or in rows, the system can detect a finger, palm or other object hovering just above a display surface. Further, these photodiodes would be connected to the same analog channels as nearby physical touch sensors, thereby saving space and power.

Translating detected motion to a GUI, users can "push" virtual buttons, trigger functions without touching a display, toggle power to certain hardware components and more. With multiple photodiodes installed, Apple would also be able to do away with the IR proximity sensor currently located next to iPhone's ear speaker.

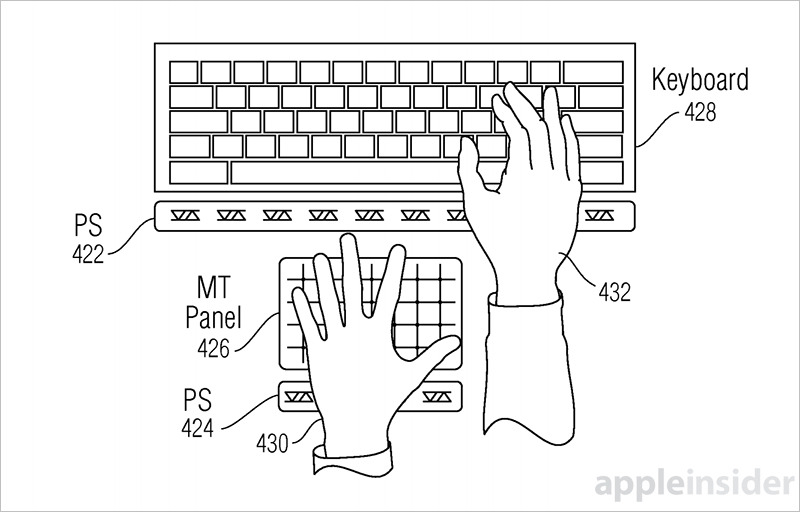

Apple outlines numerous alternative embodiments in its patent, including proximity sensor placement, ideal component types and sample GUI responses. A separate embodiment describes a MacBook implementation in which ancillary hover-sensing displays augment the typing and trackpad experience.

Enticing as it is, the technology is unlikely to make its way into a shipping device anytime soon. Apple is still looking for unique 3D Touch integrations for its first-party apps, while many third-party developers have yet to take advantage of the pressure-sensing iPhone 6s feature. Introducing yet another input method would muddy the waters.

Apple's hover-sensing display patent was first filed for in March 2015 and credits Steven P. Hotelling and Christoph H. Krah as its inventors.

Mikey Campbell

Mikey Campbell

Mike Wuerthele

Mike Wuerthele

Malcolm Owen

Malcolm Owen

Chip Loder

Chip Loder

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Michael Stroup

Michael Stroup

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele