It's easy to forget that the empires built by Steve Jobs, Bill Gates, and their peers were raised on foundations laid more than a century ago. On the day Apple's co-founder would have turned 61, let's take a moment to appreciate the pioneers who came before.

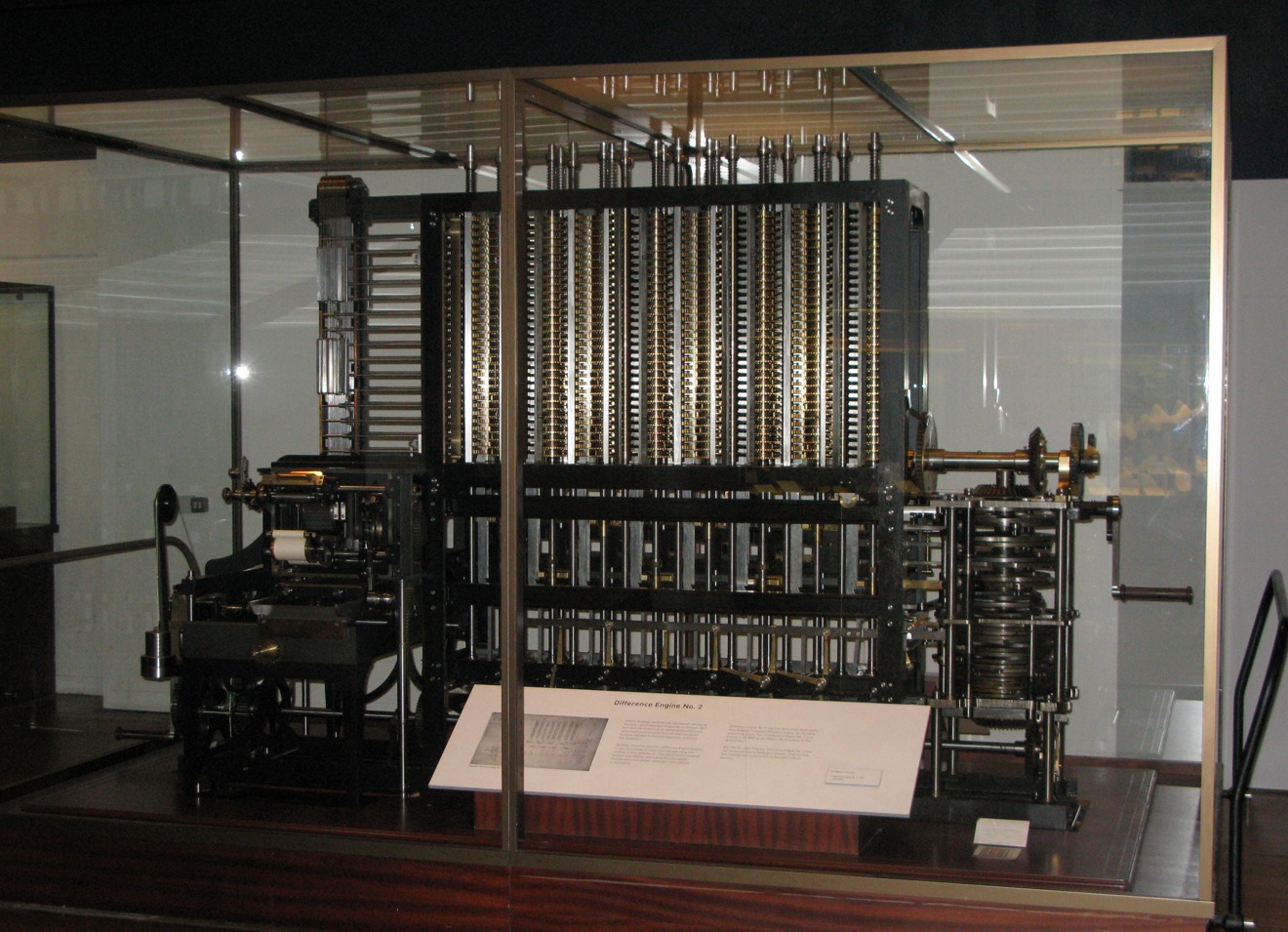

The modern concept of a programmable computer was created by British engineer Charles Babbage in the late 1800s. Babbage first envisioned what he called a "difference engine," a concept which he later refined into an "analytical engine" that could handle arithmetic, branching, and loops.

The analytical engine is the great-great-great-great-grandfather of the machines we think of today as computers. Everything from a Mac to an iPhone can trace its history to the analytical engine.

Around the same time that Babbage developed the analytical engine, the world found its first programmer: a woman named Ada Lovelace, who corresponded with Babbage and is widely credited with inventing the modern interpretation of the algorithm.

Mechanical computing plodded along for the next few years — as engineers and mathematicians like Herman Hollerith (whose company would later merge with others to become IBM), Bertrand Russel, and Raytheon founder Vannevar Bush developed many foundational principles of modern computer science — until things began to get interesting in the 1930s.

Bill Hewlett and David Packard helped create Silicon Valley and stoke the imagination of a young Steve Jobs.

The mid-to-late-30s saw the fruits of a partnership between Bill Hewlett and David Packard (from which a young Steve Jobs would find his calling) come to life, along with the nearly simultaneous invention of the relay-based computer by George Stibitz at Bell Labs and German engineer Konrad Zuse.

World War II brought the next breakthroughs, with the later-ostracized Alan Turing leading the development of the BOMBE to break German Enigma machine codes. This led more-or-less directly to the development of Colossus, also by British intelligence services, which is largely credited as the first real programmable digital computer.

Computers leapt further into the digital age with ENIAC at the University of Pennsylvania. Developed by John Mauchly and J. Presper Eckert, ENIAC was essentially the first version of what we know as a computer today.

Hardware continued to evolve, but it took Douglas Engelbart's 1968 Mother of All Demos to show what a computer could really do.

During that 90-minute presentation, Engelbart showed off the components that would form the foundation for the next 50 years of computing. Application windows, the mouse, hypertext, word processors — all of it can be traced back to TMoAD.

From there, the path is well-trodden: Jobs, Gates, and their deputies — Steve Wozniak, Andy Hertzfeld, Paul Allen, Rod Holt, Bill Fernandez, and others — forged ahead and created the computing world that we know now.

This isn't to say that the contributions of Jobs and Gates should be discounted; they were the right men in the right place at the right time, ready to push humanity down the path toward personal computing. Without them, the world would undoubtedly be a different place today.

Unfortunately, the inescapable march of history means that those who came before are often forgotten. Jobs himself was fond of quoting programming pioneer Alan Kay, using Kay's insightful remark that "people who are really serious about software should make their own hardware" to frame Apple's ever-growing vertical integration.

In that spirit, let's take Jobs's birthday to remember those who made it possible for him to make his own dent in the universe.

Sam Oliver

Sam Oliver

-m.jpg)

Malcolm Owen

Malcolm Owen

Amber Neely

Amber Neely

Christine McKee

Christine McKee

Chip Loder

Chip Loder

Marko Zivkovic

Marko Zivkovic

22 Comments

In that spirit, let's take Jobs's birthday to remember those who made it possible for him to make his own dent in the universe.

On the day Apple's co-founder would have turned 61, let's take a moment to appreciate the pioneers who came before.

Why? It's HIS birthday we're celebrating, not anyone else's. It's about Steve qua Steve.

We can save the pat on the back for everyone else, and those who came before, when it's APPLE'S birthday.

Thanks for a very good framing article. You're right that those early pioneers are often forgotten and their ground-floor contributions to today's computing devices not nearly as well known and appreciated as they should be.

Why does an Apple fan site go to such lengths to minimize the significance of Apple and the work they do, but will promote rivals like Google, Microsoft, and Samsung as if they can do no wrong, rarely pointing out their weaknesses. There must have been a change in ownership or management over the last few years because this is not the Appleinsider page I signed up for. I'm sure this will be deleted and my profile block, but at this point I don't care.

Why not write an article about how most successful tech company today exists because of Steve and Apple's vision including Google, Microsoft, Samsung etc. as they have taken Apple's vision and tech and in many cases gained access by being a non-competing partner to learn before they become a competitor.

Very nice summary! It does glide over the theoretical foundations of computing a bit. Alan Turing didn't just make a useful machine that sped up breaking the Enigma machine. He also laid the foundational theory of the programmable finite-state automaton that became known as a 'Turing Machine.' I'd also note the contributions of Alonzo Church, Kurt Gödel, John von Neumann, Noam Chomsky, Andrey Markov and others.

You mention Doug Engelbart's famous demo, but Ivan Sutherland and David Evan's pioneering work in computer graphics was equally influential in the development of modern human-computer interaction.

The software that powers our modern interfaces wouldn't be possible without the likes of Edsgar Dijkstra ("goto considered harmful"), Niklaus Wirth (Algol, Pascal), Donald Knuth ("The Art of Computer Programming", TeX and more) and many, many others.

This could go on all day -- we truly are standing on the shoulders of giants.