In what's considered the definitive overview of Apple's attempts to patent its multi-touch interface, a deluge of newly-public filings show Apple considering technology that hasn't yet been used in the iPhone or the Mac — including sensing the difference between body parts, explaining gestures through activities, and even responding differently to input from fingernails.

Many also credit the inventions to either John Elias or Wayne Westerman, both of whom founded FingerWorks. The company was one of the pioneers of multi-touch input and was subsumed into Apple in early 2005 along with Elias and Westerman.

While some of these patents relate to the basic operation of Apple's multi-touch devices as users already know them, others reveal directions that Cupertino, Calif.-based Apple has never taken with its current touch hardware.

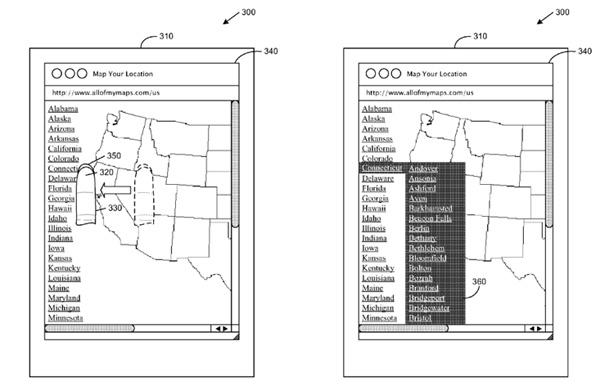

In some cases, this includes advancements the touch panel itself can recognize. One filing for input discrimination would compare the pixel values in images captured by the touchscreen and intelligently recognize different parts of the body. A device could change its behavior depending on not just its proximity to a user's face (by visualizing the ear) but to nearly any event: an iPhone could automatically wake itself on a finger clasp that takes the device out of a pocket, or ignore its owner's accidental palm presses.

An extra approach, described as a multi-event system, would also let Apple add context-sensitive menus, guides, and other information by detecting whether or not input comes from skin or an inanimate source. A user could tap items with the flesh of their finger for normal input but flip over to their fingernail and apply pressure for a separate menu, according to Apple.

Opening a menu using a fingernail.

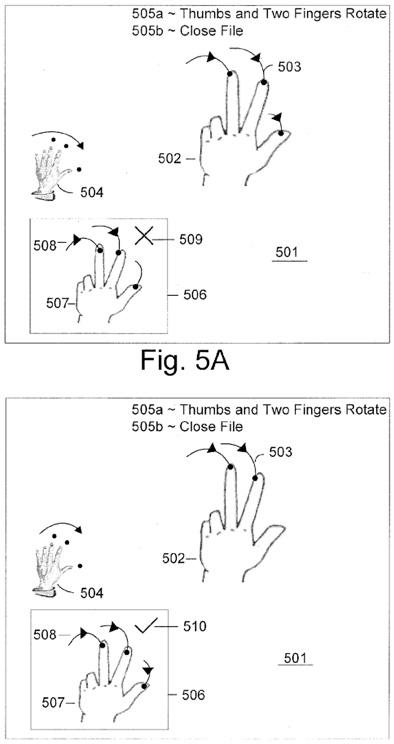

The electronics firm has also buffered itself against the possibility that multi-touch gestures may become too complex to be immediately intuitive. Instead of just providing video demos, as users see today for multi-touch trackpads on some MacBooks, a gesture learning system could guide the user through mimicking pinches, rotations, and swipes to understand how they work, including through color trails that follow fingers or the outline of a hand that performs the correct version of a gesture at the same time.

As in a test, the system could actively provide audiovisual or text feedback until the user performs the action correctly.

The patent filings reveal a more complex vision of multi-touch in Apple products than first thought and show that even the late stages of iPhone development produced inventions that never reached the finished product. However, it's unclear as to whether this is simply a snapshot of Apple's discovery process in January of last year or whether some, if any, of the described technology is ultimately part of a roadmap for future computers, media players, or phones.

All of Apple's directly touch-related patent filings revealed today are available below.

Projection scan multi-touch sensor array

Multi-Touch Input Discrimination

Error compensation for multi-touch surfaces

Double-sided touch-sensitive panel with shield and drive combined layer

Periodic sensor panel baseline adjustment

Double-sided touch sensitive panel and flex circuit bonding

Advanced frequency calibration

Master/slave mode for sensor processing devices

Full scale calibration measurement for multi-touch surfaces

Minimizing mismatch during compensation

Multi-touch surface stackup arrangement

Proximity and multi-touch sensor detection and demodulation

NOISE DETECTION IN MULTI-TOUCH SENSORS

FAR-FIELD INPUT IDENTIFICATION

SIMULTANEOUS SENSING ARRANGEMENT

PERIPHERAL PIXEL NOISE REDUCTION

IRREGULAR INPUT IDENTIFICATION

Noise reduction within an electronic device using automatic frequency modulation

Aidan Malley

Aidan Malley

-m.jpg)

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

17 Comments

http://digg.com/apple/New_Apple_touc...ngernail_input

PLEAAASSEEE. I love this phone but pleaasssee give us copy and paste.

PLEAAASSEEE. I love this phone but pleaasssee give us copy and paste.

Cut

, copy, and paste.

I firmly believe cut-copy-paste is coming, even though it doesn't seem to me ready right now. Apple has established the equivalent of right-button context menus on the iPhone: a touch-and-hold. That's how you save an image from the iPhone's browser, for instance.

I bet the same mechanism will be used for the clipboard (and maybe Undo/Redo too). Hopefully sooner rather than later. We'll have a ton of apps soon.... so give us a quick way to share data between them on the fly!

As for phones that can sense "body parts" other than fingertips... I think I'll pass

Cut, copy, and paste.

Can we get 'cut' on Mac OS X first?