Apple has publicly touted a significant new feature in OS X 10.9 Mavericks designed to maximize RAM, storage and CPU use while also boosting power efficiency: Compressed Memory.

More resources, fewer drawbacks

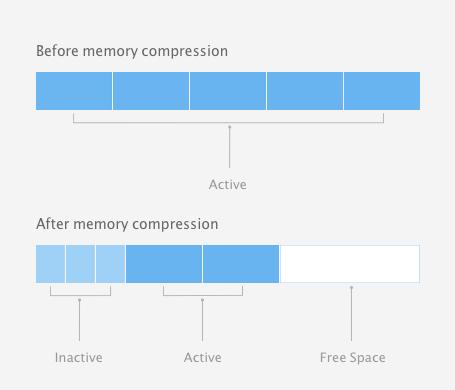

The new Compressed Memory feature sounds absolutely utopian: the operating system applies data compression to the least important content held in memory to free up more available RAM. And it does so with such efficiency that the CPU and storage devices work less, saving battery as well.

The new feature fits particularly well into Apple's design direction for its mobile products like the MacBook Air, which aims to deliver a long battery life via SSD storage (as opposed to a mechanical hard drive), but which also does not offer any aftermarket RAM expansion potential.

Apple's current crop of MacBook Air models now provide a minimum of 4GB RAM, with an at-purchase, $100 option to install 8GB. However, earlier models capable of running OS X Mavericks were sold into 2011 with only a paltry 2GB.To get the most use out of such limited RAM resources, Apple will be using dynamic Memory Compression to automatically shrink the footprint of content that has been loaded into RAM but is not immediately needed.

To get the most use out of such limited RAM resources, Apple will be using dynamic Memory Compression to automatically shrink the footprint of content that has been loaded into RAM but is not immediately needed.

OS X has always used Virtual Memory to serve a similar purpose; with Virtual Memory, the OS "pages" less important content to disk (the hard drive or SSD), then loads it back into active memory when needed. However, this requires significant CPU and disk overhead. The closer users come to running out of available memory, the more paging to disk swap storage the system has to do.

It turns out that the operating system can compress memory on the fly even more efficiently, reducing the need for active paging under Virtual Memory. That lets the CPU and drive power down more often, saving battery consumption.

Super speedy, super efficient compression

"The more memory your Mac has at its disposal, the faster it works," Apple notes on its OS X Mavericks advanced technology page.

"But when you have multiple apps running, your Mac uses more memory. With OS X Mavericks, Compressed Memory allows your Mac to free up memory space when you need it most. As your Mac approaches maximum memory capacity, OS X automatically compresses data from inactive apps, making more memory available."

Apple also notes in a Technology Overview brief that "Compressed Memory automatically compresses the least recently used items in memory, compacting them to about half their original size. When these items are needed again, they can be instantly uncompressed."

As a result, there's more free memory available to the system, which "improves total system bandwidth and responsiveness, allowing your Mac to handle large amounts of data more efficiently."

Apple is using WKdm compression, which is so efficient at packing data that the compression and decompression cycle is "faster than reading and writing to disk."

The new old technology of data compression

Memory and storage compression in general isn't new at all. In the 1980s, tools such as DiskDoubler allowed users to compress files on disk on the fly, as opposed to packing files into archives (which dates back to the beginning of computing). RAM Doubler did the same thing for memory, a technique that was essentially replaced by Virtual Memory in the late 90s.

Over time, the benefits of compressing files were largely outweighed by the overhead involved, particularly as storage grew cheaper and more plentiful and new techniques were built into the operating system. But the recent move toward mobile computing and the use of relatively expensive solid state storage (and often idle, but very fast CPU cores) have made compression popular again.

Beginning in OS X 10.6 Snow Leopard, Apple quietly added HFS+ Compression as a feature for saving disk space in system files. The benefits of this were limited by the fact that previous versions of OS X couldn't recognize these compressed files, and so the compression was not applied to files outside of the system.

Windows also uses file compression in NTFS, as does Linux' Btrfs, but in general these incur a performance penalty, making the primary benefit increased disk space at the cost of performance.Compressing the contents of volatile system memory, rather than disk storage, has remained even more experimental.

Compressing the contents of volatile system memory, rather than disk storage, has remained even more experimental. It is active by default in many virtualization products, such as VMWare's ESX. And it's also been studied for use in general computing.

Under Linux the Compcache project seeks to similarly compress memory via the LZO algorithm that would otherwise be expensively paged to disk. The benefits here too, however, were not always worth the overhead involved, or the additional complication introduced with doing things such as waking from hibernation.

Modern solutions to address new issues

Today however, the combination of speedy, often idle CPU cores; large data sets; more expensive SSD storage and the efficiency requirements of mobile computing have made memory compression a perfect solution to a variety of issues, if the compression technologies used fit the kinds of tasks being performed.

Research by Matthew Simpson of Clemson University and Dr. Rajeev Barua and Surupa Biswas, University of Maryland, examined the use of various types of memory compression a decade ago, particularly in regard to embedded systems where memory is likely to be more scarce.

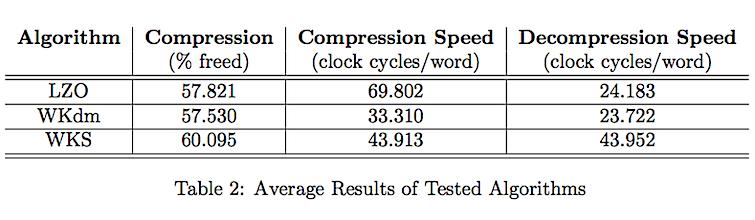

At issue then were the best compression techniques to use. The research noted that "dictionary-based algorithms tend to have slow compression speeds and fast decompression speeds while statistical algorithms tend to be equally fast during compression and decompression."

The WKdm compression Apple is now using (which uses a hybrid of both dictionary and statistical compression techniques) was found in their research to provide effective compression at both the fastest (and therefore most efficient) compression and decompression speeds.

Supercharged Virtual Memory

Apple's new Compressed Memory feature also works on top of Virtual Memory, making it even more efficient.

"If your Mac needs to swap files on disk [via Virtual Memory]," Apple explains, "compressed objects are stored in full-size segments, which improves read/write efficiency and reduces wear and tear on SSD and flash drives."

Apple states that its compression techniques "reduces the size of items in memory that haven’t been used recently by more than 50 percent," and states that "Compressed Memory is incredibly fast, compressing or decompressing a page of memory in just a few millionths of a second."

Compressed Memory can also take advantage of parallel execution on multiple cores "unlike traditional virtual memory," therefore "achieving lightning-fast performance for both reclaiming unused memory and accessing seldom-used objects in memory."

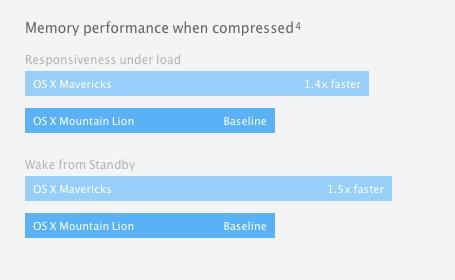

By improving the way Virtual Memory works, it's less necessary for the system to "waste time continually transferring data back and forth between memory and storage." As a result, Apple says Memory Compression improves both general responsiveness under load and improves wake from standby times, as depicted above.

The (4) footnote Apple references in the graphic notes that "testing conducted by Apple in May 2013 and June 2013 using production 1.8GHz Intel Core i5-based 13-inch MacBook Air systems with 256GB of flash storage, 4GB of RAM, and prerelease versions of OS X v10.9 and OS X v10.8.4. Performance will vary based on system configuration, application workload, and other factors."

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

31 Comments

That's a pretty neat feature indeed, I'm still hitting a wall on my MBP 17" late 2010 with 8GB Ram (maxed out). But it helps to have SSD, too bad Purge command no longer works with Mavericks.

This feature just made me remember about quarterdeck's QEMM, it had a feature called MagnaRAM, that did the exact same thing. Compressed memory to avoid physical paging. This idea is not new, but with the amount of waste all those XML structures have while in-memory the gains will be worth the CPU effort. Patents for these might be 20 or 30 years old.

That's a pretty neat feature indeed, I'm still hitting a wall on my MBP 17" late 2010 with 8GB Ram (maxed out). But it helps to have SSD, too bad Purge command no longer works with Mavericks.

Read and see if you qualify with 10.7.5+ to upgrade to 16GB RAM with the updated firmware: http://www.everymac.com/systems/apple/macbook_pro/macbook-pro-unibody-faq/macbook-pro-13-15-17-mid-2009-how-to-upgrade-ram-memory.html

The details are half-way down the article.

Looks like this is just going to compress suspended applications - note that other OS changes make apps suspend by default now when not in the foreground. When suspended for a while, rather than paging, it's going to compress the memory that is effectively idle, the idea being that compressed memory is faster to decompress than page out and page back in from disk, even an SSD disk.

Given the randomness of memory, the 50% compression stats must have some basis from testing a broad range of apps, but some apps will likely be incompressible, and others more compressible.. that's the nature of compression.

Nice concept. Will be interesting to see real life impact.

I can't pretend to understand the details of this so I have a question for those more informed than me.

If this reduces the number of page in/out to the disk, is it likely to impact the life of the hard disk or SSD?

If this is an idiotic question I apologise in advance for my ignorance.