Apple on Tuesday was awarded an interesting patent describing a graphical user interface that offers a solution to inadvertent input errors users may see when interacting with a touchscreen while moving.

The U.S. Patent and Trademark Office granted Apple U.S. Patent No. 8,631,358 for a "Variable device graphical user interface," which outlines a system where user movement is compensated for via software adjustments.

While not a particularly in-depth patent, the invention looks to solve a common problem many iPhone users face when using the device while moving. Due to its relatively small screen and touch-only interface, Apple's smartphone could be difficult to use when a user is in motion.

For example, the bobbing motion caused by walking could lead to errant screen touches. Running or other activities may exaggerate the problem to a point where interacting with an iPhone is next to impossible.

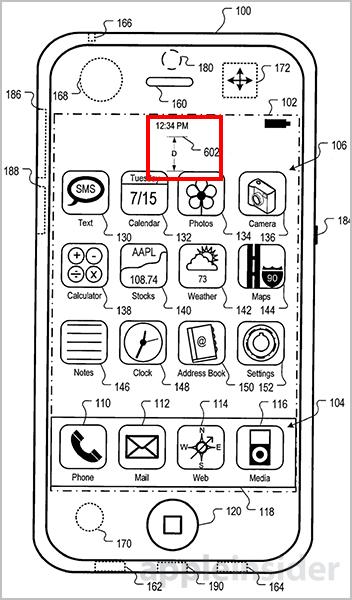

To compensate, one or more sensors can be used to detect device motion or patterns of motion, which will trigger dynamic UI changes like enlarging a virtual button's touch area or shifting visual assets around the screen. Apple mentions accelerometers, gyroscopes and more as adequate on-board sensors, most of which are already installed on current iPhone models.

Changes in metrics such as device acceleration and orientation can be used to interpret the motion of a device. More importantly, patterns can be detected and matched to a database of predetermined criteria. For example, if the device senses bobbing, it can be determined that the user is walking. An oscillating motion may denote running, while minute bounces could signal that a user is riding in a car.

In each of the above scenarios, the detected pattern of motion is mapped to one or more GUI adjustments. In some embodiments, user interface changes are based on type and magnitude of motion. Depending on what criteria the motion meets, a corresponding response will be applied to the GUI.

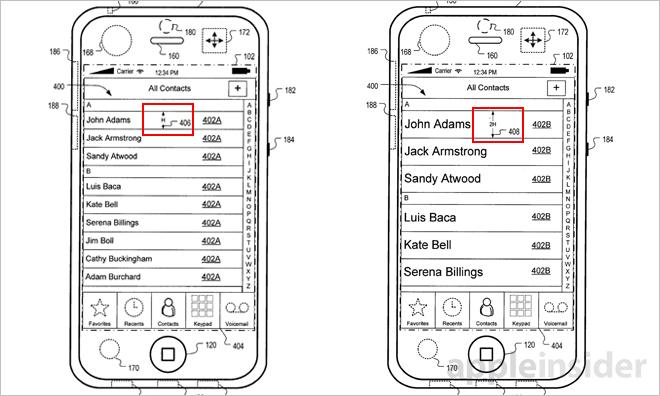

According to some implementations, the system will resize UI elements and touch areas to compensate for a certain motion. For example, the rows of a contact list may be enlarged by increasing the height of each entry. The touch area is also resized, affording the user a bigger target to hit while moving.

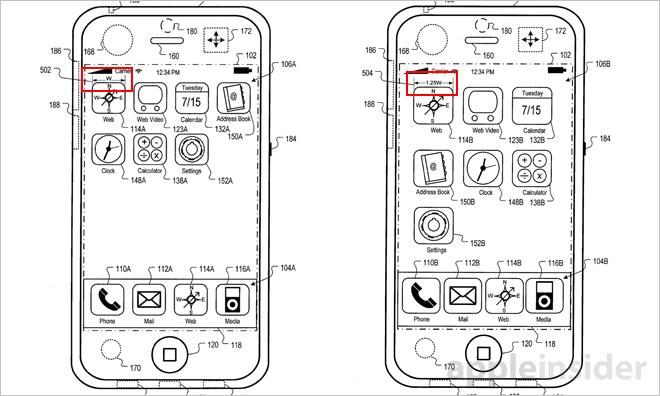

Another embodiment calls for graphical assets to be dynamically mapped to different positions on a screen in relation to device motion. In this case, a row of contacts may be shifted vertically or horizontally in a direction opposite to the detected motion, thereby simulating a stabilized display. Touch sensitivity can also be customized to help with accuracy.

In yet another embodiment, the UI can be skewed to compensate for angle of hold. Further, a "fisheye" effect can be mapped to certain elements, directing focus to more important assets while minimizing the size of others.

Finally, the system is able to "learn" particular characteristics of device motion and how a user's touch accuracy relates to said movement. This data can be stored and later retrieved to predict where the user will touch during a particular pattern of motion. The GUI can be remapped based on these predictions.

As with most Apple patents, this property may never be deployed in a consumer device. It is interesting to note, however, that the company is looking to expand the iPhone's potential as an activity monitor, a fact evidenced by the iPhone 5s' M7 motion coprocessor.

Apple's variable device GUI patent was first filed for in 2007 and credits John O. Louch as its inventor.

Mikey Campbell

Mikey Campbell

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

8 Comments

Sounds like stuff one would want on an "iBand" or "iWatch" to me.

Of course actual real-world use case scenarios would suggest otherwise: https://24.media.tumblr.com/tumblr_mbs77cDMT81qzdn5qo1_500.gif

https://24.media.tumblr.com/tumblr_mbs77cDMT81qzdn5qo1_500.gif

Yeah, I’m sure that image is proof of anything whatsoever.

Oh man, I had this idea years ago, but I thought about it mostly to stabilize text when you're reading in a bouncing vehicle. I hope they do it though--it would be fun to see.

[quote name="Retrogusto" url="/t/161568/apples-patented-gui-compensates-for-iphone-motion-minimizes-errant-touches#post_2457583"]Oh man, I had this idea years ago, but I thought about it mostly to stabilize text when you're reading in a bouncing vehicle. I hope they do it though--it would be fun to see.[/quote] That's not a bad idea. Develop it and make a reader app.