Two new Apple patent applications published Thursday shed light on the company's research into bringing three-dimensional imaging to mobile devices, as well as enabling more immersive ways to interact with laptop computers.

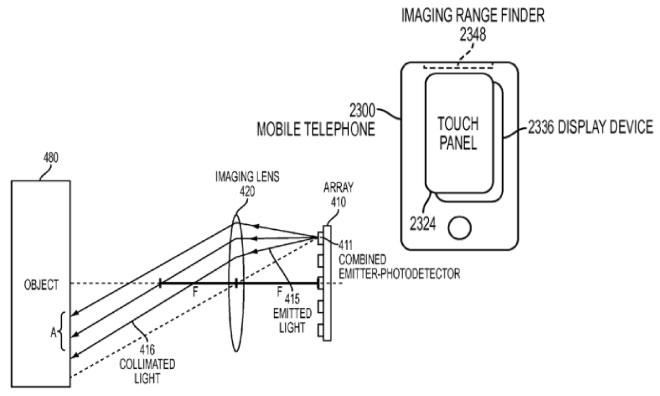

The first application, dubbed "Imaging Range Finding Device and Method," details a system similar to Microsoft's Kinect motion sensor. An array of light emitters and photodetectors are situated behind an optical lens, which is used to direct light toward a target object.

Light from the device that bounces back through the lens — as well as light emitted by the object itself — can be analyzed to determine the object's size and location. The addition of one or more moveable lenses increases the system's accuracy, according to the filing, and saves power while enabling use of the system in adverse environments such as rooms filled with smoke or fog.

Apple envisions a wide variety of uses for the range finder:

- to scan and map interior space; for 3D object scanning and pattern matching;

- as a navigation aid for the visually-impaired to detect landmarks, stairs, low tolerances, and the like;

- as a communication aid for the deaf to recognize and interpret sign language for a hearing user;

- for automatic foreground/background segmentation; for real-time motion capture and avatar generation;

- for photo editing;

- for night vision; to see through opaque or cloudy environment, such as fog, smoke, haze;

- for computational imaging, such as to change focus and illumination after acquiring images and video;

- for autofocus and flash metering; for same-space detection of another device;

- for two-way communication;

- for secure file transfers;

- to locate people or objects in a room;

- and to capture remote sounds.

The system is similar to technology developed by Israeli outfit PrimeSense, which Apple acquired last year for $360 million. Prior to the Apple sale, PrimeSense was working on a 3D sensor called the Capri 1.25 which was small enough to be integrated into a mobile device.

Apple credits Matthes Emanuel Last of Davis, Calif. for the invention of U.S. Patent Application No. 61698375.

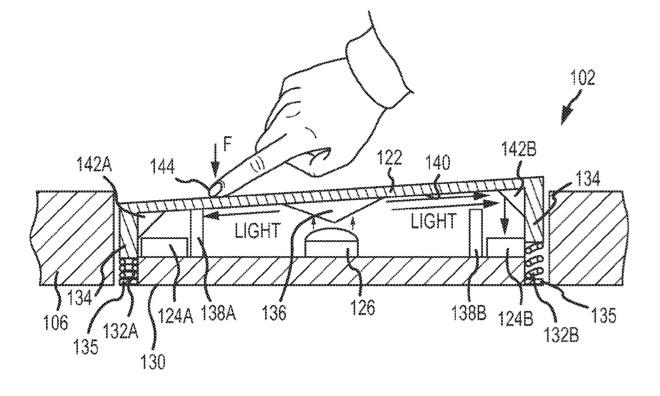

The second application, "Optical Sensing Mechanisms for Input Devices," reveals a new type of trackpad whose position is determined by reflected light. The trackpad would be allowed to move freely along multiple planes in a manner that could best be described as similar to a large, flat joystick.

An optical sensing mechanism would detect the trackpad's vertical, lateral, and angular displacement as well as the velocity, force, acceleration, and pressure of movements. Those parameters would be used to detect gestures and initiate actions based on different force velocities or pressures.

Users could, for instance, instruct the operating system to prioritize one task over others by clicking an icon "harder." They could also define different profiles that would allow the same gesture to perform multiple actions depending on the force with which the gesture was executed.

Additionally, combining the various input parameters could eliminate the need for multiple independent control surfaces, such as buttons on a gamepad:

Consider, for example, a user playing an auto racing simulation. A track pad, as described herein, may be used to control and/or coordinate several aspects of the game, generating simultaneous inputs. Lateral motion of the track pad may steer the vehicle. Force exerted on the track pad (downward force, for example) may control acceleration. Finger motion on a capacitive-sensing (or other touch-sensing) surface may control the view of the user as rendered on an associated display. Some embodiments may even further refine coordinated inputs. Taking the example above, downward force at a first edge or area of the track pad may control acceleration while downward force at a second edge or area may control braking.

Apple credits Joel S. Armstrong-Muntner of San Mateo, Calif. for the invention of U.S. Patent Application No. 61700767.

Shane Cole

Shane Cole

-m.jpg)

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

12 Comments

The patent applications will continue!

Seems like a new era in interfaces is on its way. Fingers will interact with light in 3D instead of capacitance in 2D. Precision in pointing and manipulating screen objects should increase considerably.

A new concept for online dating. Imagine the possibilities?

I hope Apple won't add any features that make us look like ecstatic kids flapping their arms about who have discovered a new trick.

Actually I never could understand the benefit of gestures (unless you want to look ridiculous). I couldn't understand why couldn't you just flick the screen to browse through images, rather than waving your hands about. I also couldn't understand why do you have to perform a ritual dance in front a TV to change a channel or change volume, when all that is required is a lazy touch of a button on the remote controller.

I really don't understand gesture (I really ask this honestly). Can someone kindly ruminate on this?

[quote name="crysisftw" url="/t/164028/apple-exploring-kinect-like-iphone-motion-sensor-force-detecting-macbook-trackpad#post_2486417"]I hope Apple won't add any features that make us look like ecstatic kids flapping their arms about who have discovered a new trick. Actually I never could understand the benefit of gestures (unless you want to look ridiculous). I couldn't understand why couldn't you just flick the screen to browse through images, rather than waving your hands about. I also couldn't understand why do you have to perform a ritual dance in front a TV to change a channel or change volume, when all that is required is a lazy touch of a button on the remote controller. I really don't understand gesture (I really ask this honestly). Can someone kindly ruminate on this? [/quote] Here's one rumination: We're talking about fingers over a sensor here, not arm waving. Buttons have the same disadvantage as they did for phones—hard to learn, locked-in, fiddle-y, non-reprogrammable. Also, they're two-dimensional, a minefield of error opportunities because they're spread out in a field. This gesture-based sensor technology promises a programmable language based on multidimensional imaginary tools, instead of hardware buttons.