Documents discovered on Thursday show Apple is hard at work on tech to take iPhone picture quality to new levels, specifically a "super-resolution" imaging engine that uses optical image stabilization to capture multiple samples that are then stitched together to form an incredibly high-density photo.

With the iPhone already one of the world's most popular digital consumer cameras, Apple is looking to build on its lead in the sector with new technology that significantly boosts picture resolution without the need for more megapixels.

According to a patent application published by the U.S. Patent and Trademark Office on Thursday covering "Super-resolution based on optical image stabilization," Apple is testing out alternative and unique uses for existing OIS tech, a much different path than rival smartphone makers.

In very basic terms, the invention uses an optical image stabilization (OIS) system to take a batch of photos in rapid succession, each at a slightly offset angle. The resulting samples are fed into an image processing engine that creates a patchwork super-resolution image.

Traditional OIS systems use inertial or positioning sensors to detect camera movement like shaking from an unsteady hand. Actuators attached to a camera's imaging module (CCD, CMOS or equivalent sensor), or in some cases lens elements, then shift the component in an equal and opposite vector to compensate for the unwanted motion.

Physical modes of stabilization usually produce higher quality images compared to software-based solutions. Whereas digital stabilization techniques compensate for shake by pulling from pixels outside of an image's border or running a photo through complex matching algorithms, OIS physically moves camera components.

Apple's filing takes the traditional OIS system and combines it with advanced image processing techniques to create what it calls "super-resolution" imaging.

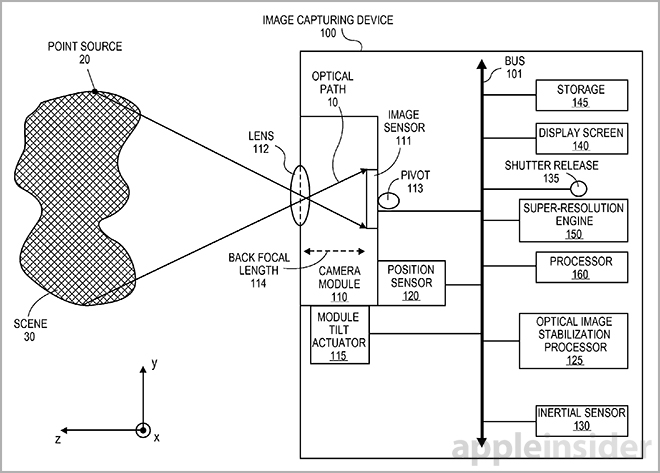

According to one embodiment, the system is comprised of a camera, an actuator for OIS positioning, a positioning sensor, an inertial sensor, an OIS processor and a super-resolution engine. A central processor, such as the iPhone 5s' A7 system-on-chip, governs the mechanism and ferries data between different components.

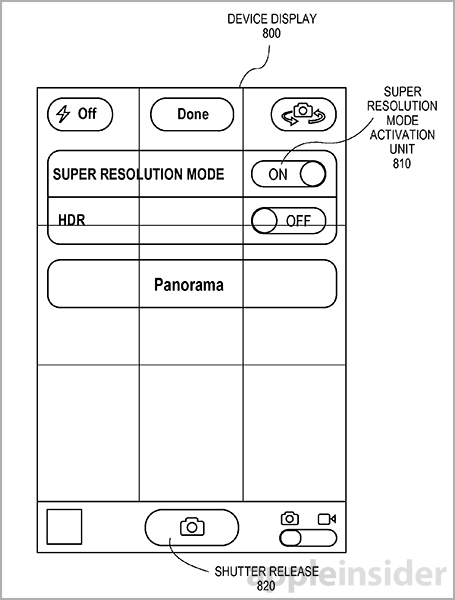

In practice, a user may be presented with a super-resolution option in a basic camera app. When the shutter is activated, either by a physical or on-screen button, the system fires off a burst of shots much like iOS 7's burst mode as supported by the iPhone 5s.

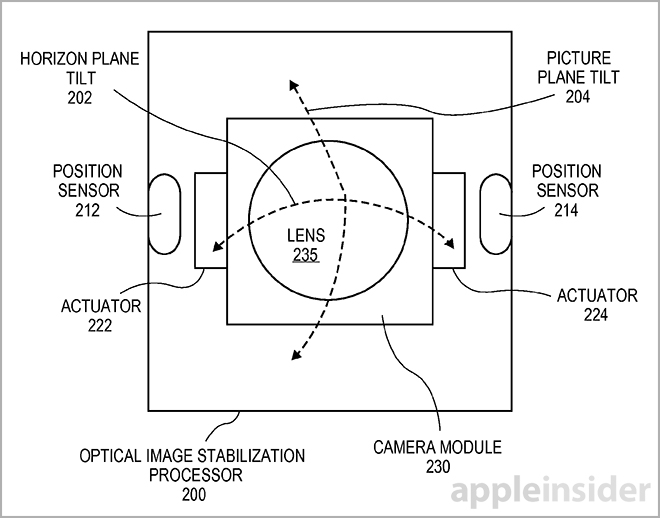

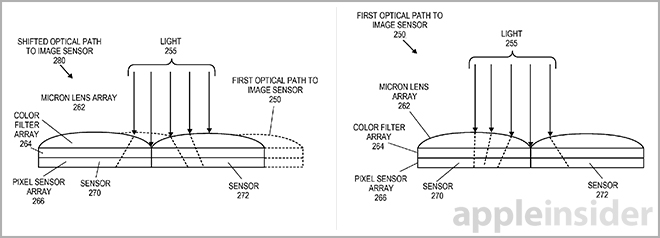

While taking the successive image samples, a highly precise actuator tilts the camera module in sub-pixel shifts along the optical path — across the horizon plane or picture plane. In some embodiments, multiple actuators can be dedicated to shift both pitch and yaw simultaneously. Alternatively, the OIS systems can translate lens elements above the imaging module.

Because the OIS processor is calibrated to control the actuator in known sub-pixel shifts, the resulting samples can be interpolated and remapped to a high resolution grid. The process is supported by a positioning sensor that can indicate tilt angle, further enhancing accuracy.

Considered a low-resolution sample, each successive shot is transferred to a super-resolution engine that combines all photo data to create a densely sampled image. Certain embodiments allow for the lower resolution samples to be projected onto a high-resolution grid, while others call for interpolation onto a sub-pixel grid.

Finally, the super-resolution engine can apply additional techniques like gamma correction, anti-aliasing and other color processing methods to form a final image. As an added bonus, the OIS system can also be tasked for actual stabilization duties while the super-resolution mechanism is operating.

The remainder of Apple's filing offers greater detail on system calibration, alternative methods of final image construction and key optical thresholds required for accurate operation. Also discussed are filters, sensor structures like micron lenses and specifications related to light and color handling.

It is unknown if Apple will choose to implement its super-resolution system in a near-future iPhone, but recent rumors claim the company will forego physical stabilization on the next-gen handset in favor of a digital solution. As OIS systems require additional hardware, the resulting camera arrays are bulky compared to a regular sensor module with software-based stabilization.

Apple's OIS-based super-resolution patent application was first filed for in 2012 and credits Richard L. Baer and Damien J. Thivent as its inventors.

Mikey Campbell

Mikey Campbell

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Andrew O'Hara

Andrew O'Hara

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

88 Comments

Wow, that's the biggest link I've ever seen. So it extends on the 28 megapixel panorama shots.

Yes please, implement this Apple! That, together with a f/1.8. [quote name="hill60" url="/t/179215/future-iphones-may-use-optical-image-stabilization-to-create-super-resolution-images#post_2529234"]Wow, that's the biggest link I've ever seen.[/quote] That happens every now and then while Huddle converts the article to the forum. Here's the actual link: [URL=http://appft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&u=%2Fnetahtml%2FPTO%2Fsearch-adv.html&r=2&p=1&f=G&l=50&d=PG01&S1=(348%2F208.5.CCLS.+AND+20140508.PD.)&OS=ccl/348/208.5+and+pd/5/8/2014&RS=(CCL/348/208.5+AND+PD/20140508)]http://appft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&u=%2Fnetahtml%2FPTO%2Fsearch-adv.html&r=2&p=1&f=G&l=50&d=PG01&S1=(348%2F208.5.CCLS.+AND+20140508.PD.)&OS=ccl/348/208.5+and+pd/5/8/2014&RS=(CCL/348/208.5+AND+PD/20140508)[/URL] [quote]So it extends on the 28 megapixel panorama shots.[/quote] Can you elaborate on that? As I understand it, the patent is on OIS.

It's funny/fascinating/strange/ironic how the term embodiment as used by the US Patent office is a forerunner of the concept of use cases.

OIS is always a welcome addition

Seeing is believing...