Starting with next week's launch of iOS 9, users will be prompted to speak a series of commands once enabling "Hey Siri," making the intelligent personal assistant more adept at understanding a person's unique voice.

Upon installing the golden master of iOS 9, "Hey Siri" functionality is turned off. If users delve into the Settings app, under General and then Siri, and turn "Hey Siri" back on, their device will begin a setup process.

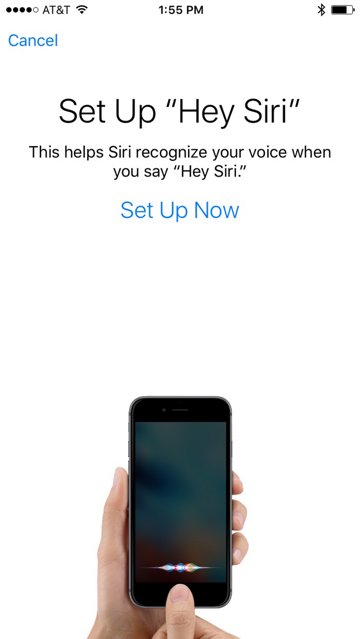

Apple informs users that the new setup will help Siri to recognize their voice when using they "Hey Siri" command.

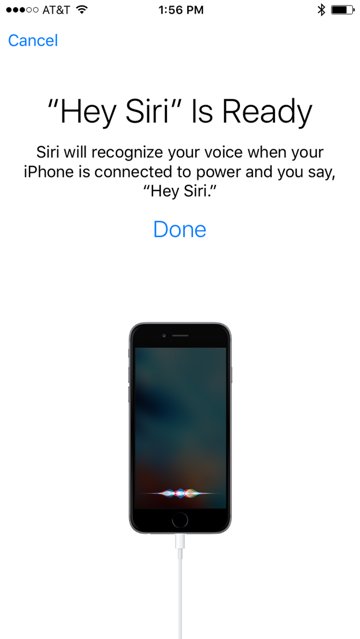

For current devices, "Hey Siri" requires the iPhone or iPad to be plugged into power. But with the forthcoming iPhone 6s series, as well as the iPad Pro, "Hey Siri" will be always listening, thanks to the M9 coprocessor built in to the A9 CPU.

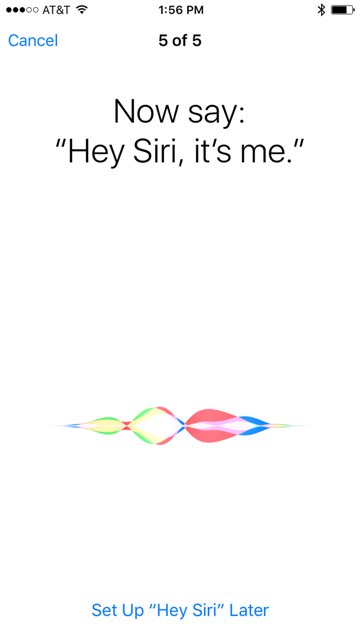

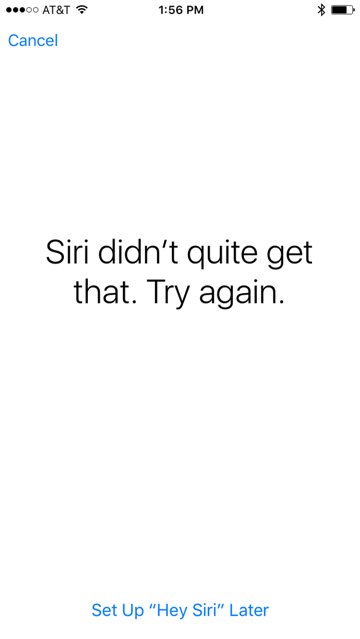

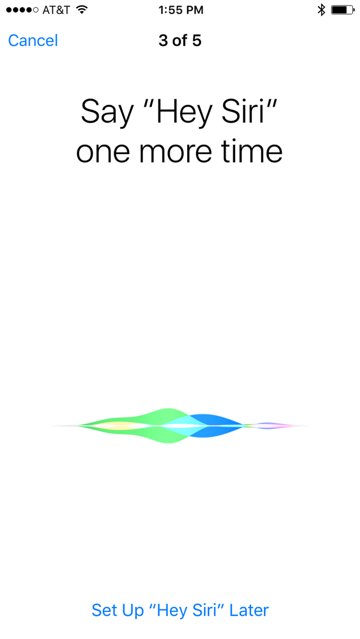

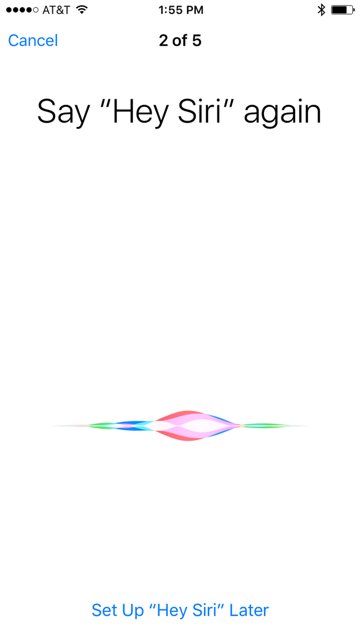

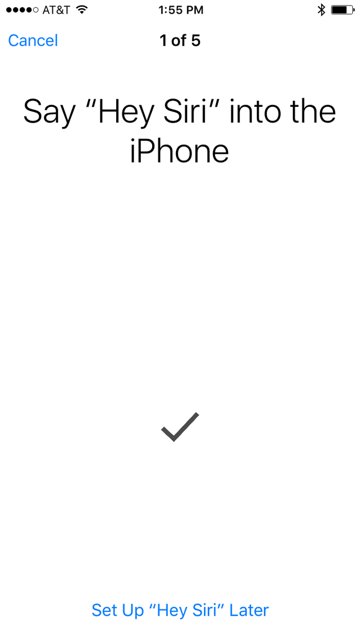

Setting up "Hey Siri" is a simple, five-step process where users must speak a number of commands. If the iPhone or iPad does not properly hear the user, they are instructed to speak again.

Users say the words "Hey Siri" three times, then "Hey Siri, how's the weather today?" followed by "Hey Siri, it's me." Once this is completed, iOS 9 informs the user that "Hey Siri" is ready to use.

Previously, in iOS 8, "Hey Siri" was enabled without a setup process. On occasion, the voice-initiated function would not work properly and took multiple tries. Presumably Apple's new setup process will address some of those issues from iOS 8.

Users will be able to test out the new and improved "Hey Siri" when iOS 9 launches to the public next Wednesday, Sept. 16. Always-on Siri support will debut in the iPhone 6s and iPhone 6s Plus when they launch on Sept. 25, while support for the iPad Pro will arrive when the jumbo-sized tablet debuts in November.

AppleInsider Staff

AppleInsider Staff

-m.jpg)

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

39 Comments

That's a disappointment, I had thought the necessity of training voice recognition systems was a thing of the past.

I kept trying to do this by asking variations of "Setup 'Hey Siri'." I would have expected this to work via Siri without having to tunnel into Settings.

[quote name="ascii" url="/t/188193/with-ios-9-hey-siri-gains-a-new-setup-process-tailored-to-your-voice#post_2774957"]That's a disappointment, I had thought the necessity of training voice recognition systems was a thing of the past.[/quote] This is the future if you want your digital personal assistance to respond only to your voice. I'd like Apple to take this even further with better training for people with different accents or even speak impediments so the system knows your speech pattern for every phoneme.

[quote name="SolipsismY" url="/t/188193/with-ios-9-hey-siri-gains-a-new-setup-process-tailored-to-your-voice#post_2774959"] This is the future if you want your digital personal assistance to respond only to your voice. I'd like Apple to take this even further with better training for people with different accents or even speak impediments so the system knows your speech pattern for every phoneme.[/quote] I[B] think[/B] some systems are doing that now. A few months back I had to have some very unexpected "alterations" to my mouth which negatively impacted my clarity of speech. For quite some time after Google misunderstood what I said somewhat frequently. In the past few weeks it's back to 90% or more accuracy. Could be me or could be Google learning my new speech patterns. I suspect it's Google.

The iPhone 6S and 6S Plus have extra hardware to support this feature without draining the battery. cirrus Logic new smart audio codec with voice processing embedded software. In addition the 6S and 6S Plus have a fourth microphone so if you lay your phone down or it's in a purse, etc it is more likely to not have all Mic's blocked. Earlier models do not have the latest cirrus logic hardware and require the device to be plugged in to avoid draining the battery