The 3D laser scanning module that may make an appearance on the "iPhone 8" raises a number of questions, and a new report attempts to shed some light on the subject, including what model of iPhone it might appear on this year.

According to Rod Hall from investment firm J.P. Morgan, the sensor expected to be used in this year's "iPhone 8" is aimed at a practical face scanning utility, and not solely or specifically for augmented reality application.

Apple's Touch ID sensor has the potential of a 1 in 50,000 error rate. In its earliest implementation, Hall believes a laser-based 3D sensor, versus an infrared one, will be far more accurate, boosting the already very secure Touch ID.

Basics of the technology

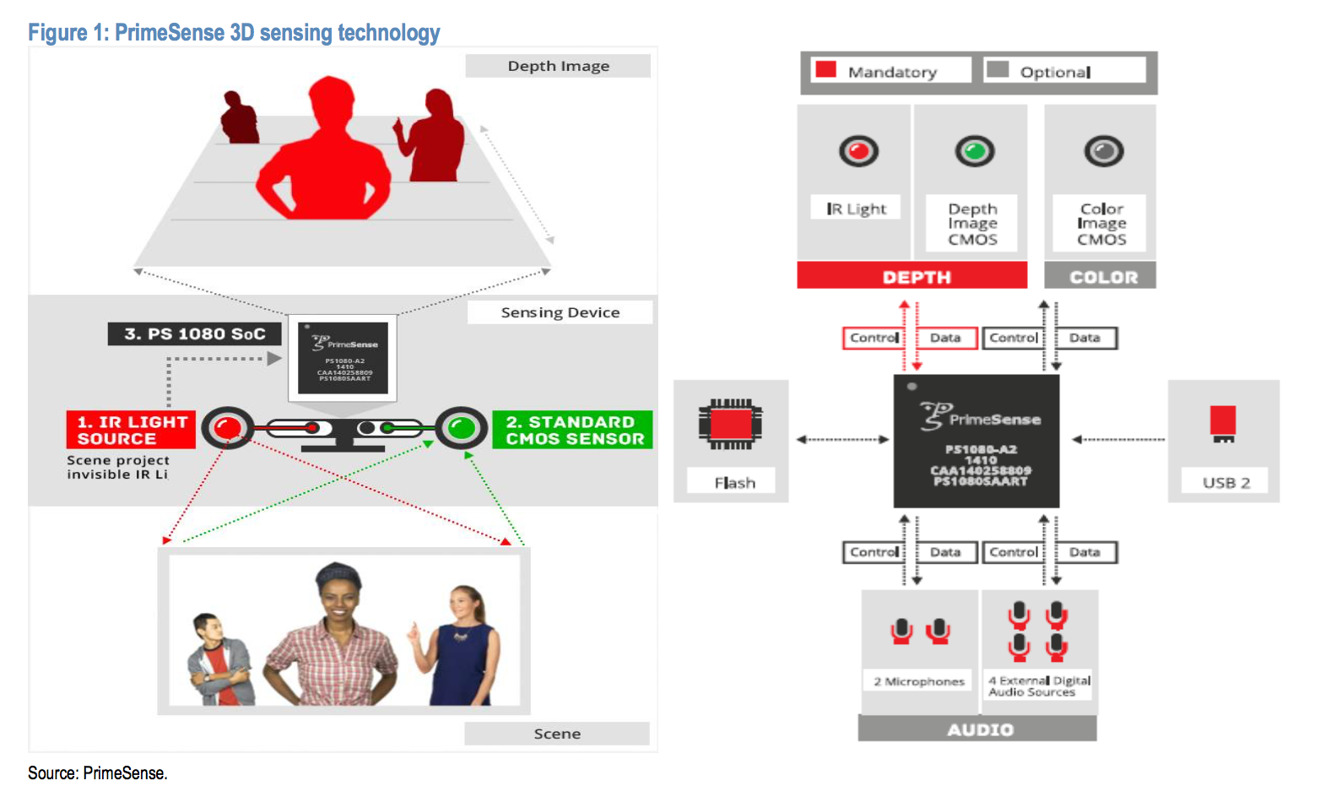

A 3D sensor needs a few key features — it needs a light emitter in the form of a LED or laser diode, a light filter for a noise-free scan, and a speedy image sensor coupled with a powerful signal processor. Each aspect has some implementation difficulties, which Hall believes have been rectified by Apple and partner Primesense in a relatively inexpensive package that should add not much more than 3 percent to the build cost of an iPhone.

At first glance, the technology described resembles a miniaturized version of a LIDAR mapper or rangefinder.

At present, at macro scale, LIDAR is used in many physical sciences, and heavily used by the military in ordnance guidance suites. Resolution and accuracy greatly depends on a combination of factors, not the least of which is the integration of the laser and the sensor — which Apple's acquisition of Primesense appears intended for.

Adding weight to the LIDAR comparison, a presentation in December by machine learning head Russ Salakhutdinov covered Apple's artificial intelligence research in "volumetric detection of LIDAR," which could be utilized in an iPhone, as well as in a larger platform.

Obviously, an iPhone won't have the power necessary to blanket a large geographical area with a laser for mapping, nor the processing power to deal with the data. However, a smaller volume such as that occupied by a user is easily covered by a very low power emitter.

Miniaturized hardware

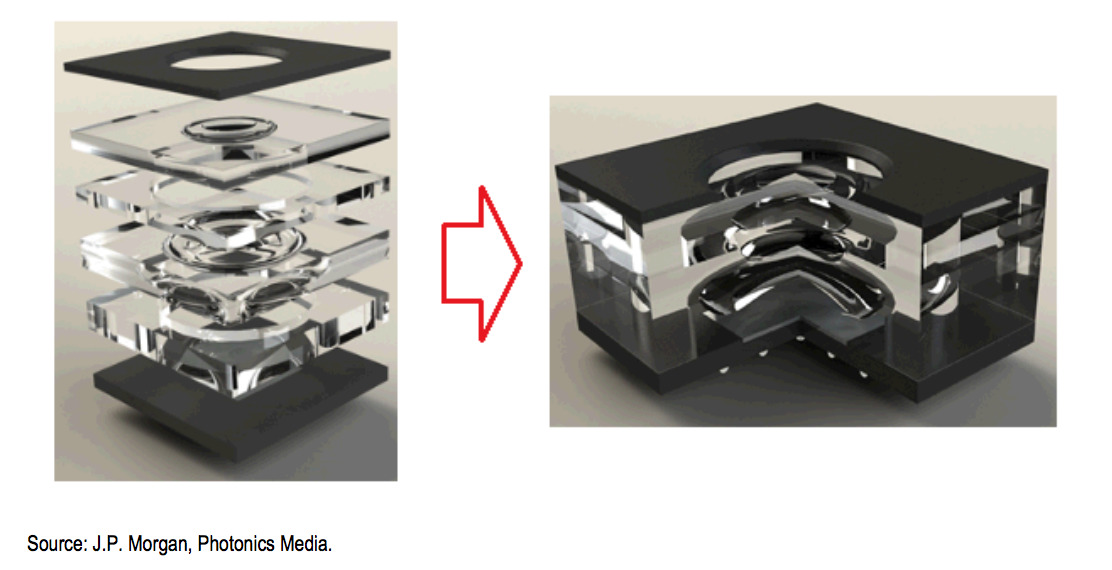

Any 3D laser scanning apparatus in a smartphone would likely be introduced in a wafer-level "stacked" optical column, rather than a conventional barrel lens.

While more complex, the stack occupies less volume in the phone, and is arguably more resistant to damage. However, optical diffraction can be a problem, as can lower yield rates in production, potentially driving the cost up, or being a supply-limiting factor in getting devices equipped with the stack to retail.

The technology has more use than facial recognition. A potential API release, which is probably not likely early in the hardware's life, could open up other uses like ultimate use in augmented reality and virtual reality headsets, clothing sizing, accurate distance measurements for home improvement, scanning for 3D printing, appliance and HomeKit integration, and other applications needing precise volumetric scans, or range-finding needs.

Outside Cupertino

Given's Apple's ownership of Primesense intellectual property, the company stands to gain from other industries implementation of the technology as well.

"Creating small, inexpensive, 3D scanning modules has interesting application far beyond smartphones. Eventually we believe these sensors are likely to appear on auto-driving platforms as well as in many other use cases," writes Hall. "Maybe a fridge that can tell what types of food you have stored in it isn't as far off as you thought."

While the "iPhone 8" is expected to be laden with all of Apple's newest technologies — including an OLED wrap-around display, fingerprint sensor embedded in the screen glass, and others — the 3D sensing technology may not be limited to just the high end. Hall believes that there is "more unit volume" for production of the sensor sandwich, opening up the possibilities that it will appear on the "iPhone 7s" series, in addition to the expected $1,000+ high-end "iPhone 8."

Apple purchased Israeli-based PrimeSense in lat 2013, for around $360 million. PrimeSense created sensors, specialized silicon and middle-ware for motion sensing and 3D scanning applications, and was heavily involved in the development of Microsoft's Kinect sensor technology before the deal.

Mike Wuerthele

Mike Wuerthele

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

William Gallagher

William Gallagher

14 Comments

Might this also have enhanced camera applications?

Many years ago someone created a meme called "the Apple product cycle" which was really about the Apple rumours cycle. It went through the early rumour stage fuelled by obscure publications in Asia, the grand product release, the line ups on launch day, shortages, delays in shipping dates, and snarky commentary from media about how real soon the x killer will be produced by a competitor and thus Apple is doomed, etc. We are clearly in that early stage where every theoretical and wondrous feature gets added to the yet to be revealed product. The point of the story was not every rumour gets added to the wondrous new product, and the rumour addicts get disappointed. To much wailing and mashing of teeth.

This is cool, but what I really want in my next iPhone is this:

https://en.m.wikipedia.org/wiki/Mosquito_laser