The U.S. Patent and Trademark Office on Tuesday granted Apple a patent for acoustic fingerprint imaging technology accurate enough to replace existing Touch ID optical readers, bolstering rumors that the iPhone home button is not long for this world.

As detailed in Apple's U.S. Patent No. 9,747,488 for an "Active sensing element for acoustic imaging systems," the IP describes a method of gathering biometric data, specifically a fingerprint, through ultrasonic transmission and detection.

Collected fingerprint images, once processed, can be applied to user authentication in much the same way as Touch ID. Unlike Apple's current fingerprint reading hardware, however, acoustic imaging technology does not require optical access to an evaluation target, meaning ultrasonic transducers can be placed beneath operating components like a display.

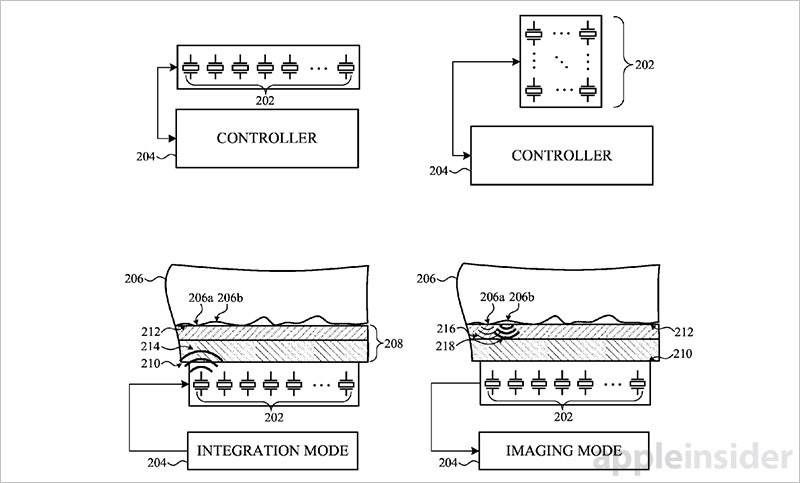

In practice, the acoustic imaging system described incorporates an array of piezoelectric transducers arranged in a pattern near the bottom surface of a given substrate. Configured to collect image data of an object engaging a top surface of the substrate, like a finger or stylus, these components send out an acoustic pulse toward the top substrate surface.

A portion of the acoustic pulse is reflected off the top substrate back toward the transducer array, where the returning acoustic waves can be parsed to determine the image of a target. More specifically, the pulse is reflected back as a function of whatever object is touching the top substrate.

For example, a finger introduces an acoustic impedance mismatch, otherwise known as an acoustic boundary, between itself and the top surface of a substrate. A fingerprint's ridges and valleys present different acoustic boundaries, soft tissue-substrate and air gap-substrate, respectively, and therefore produce distinct acoustic output to be detected by the sensing elements.

Once collected at the piezoelectric transducer array, reflected acoustic pulses are turned into electrical signals and analyzed. In some embodiments, electrical signals correspond to a single pixel of a larger sub-image. Applied to the example above, pixels corresponding to fingerprint ridges may be lighter than those assigned to valleys.

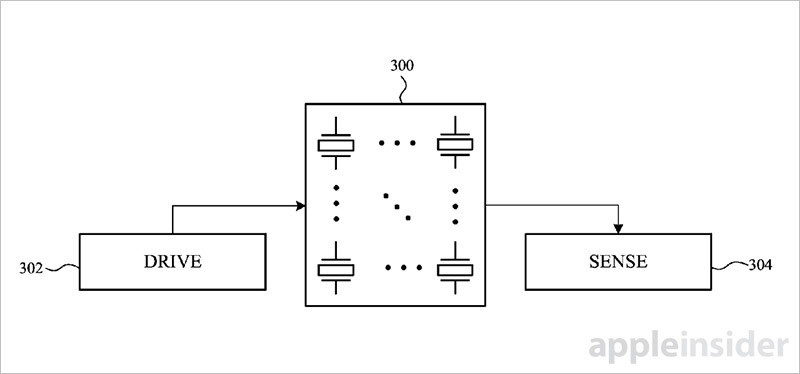

Apple in its invention notes traditional acoustic imaging systems suffer from a number of limitations. For example, driving piezoelectric elements might have substantially higher electrical requirements compared to sensing with those components. Apple estimates piezoelectric elements are driven by high voltage circuits ranging from 0 to 100 volts, while sensing is performed by low voltage circuits running at 0 to 3.3 volts.

Additionally, due to the commonly high capacitance materials used in manufacturing piezoelectric parts, driving these elements at high voltages can result in damaging current spikes.

To solve these and other potentially fatal architectural flaws, Apple proposes a system of integrated transducer controllers capable of independently operating both drive and sense modes. In some cases, sense/drive chips dedicate one or more sense and drive circuits to an individual transducer. This configuration allows the system granular control over a unit's drive signal, voltage bias and ground reference, effectively turning the transducer package into an "active sensor."

The remainder of today's patent discusses specifics like modes of operation, voltage tolerances, alternative embodiments and other details.

Whether Apple intends to implement the acoustic imaging technology into a shipping product is unclear, though rumors earlier this year suggested the company was looking to debut a sub-screen fingerprint reader in the forthcoming "iPhone 8" handset. An embedded solution would replace the years-old home button, affording space for a full-face OLED display. More recently, however, insiders claim the company has scrapped plans to integrate Touch ID into the next-generation smartphone altogether.

Instead of — or alongside — fingerprint authentication, Apple is widely expected to unveil advanced facial recognition technology powered by new depth-sensing camera hardware. Lines of code unearthed in recently leaked HomePod firmware suggest the so-called "FaceDetect" feature will inform a range of functions, from user authentication to payments.

Apple's acoustic imaging patent was first filed for in August 2015 and credits Mohammad Yeke Yazdandoost, Giovanni Gozzini and Jean-Marie Bussat as its inventors.

Mikey Campbell

Mikey Campbell

-m.jpg)

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

25 Comments

Is it way better than Qualcomm's Ultrasonic Biometric Fingerprint Scanner?

I wonder if its wet-finger authentication is any better than Touch ID's?

Could this be it? Is this the Touch ID solution for the new phone? Was this purposely held or granted later so it would be closer to an actual reveal? I love Touch ID but the physical button needs to go. It would be great if they developed a new way of doing it that put them years ahead of the competition.

Here's to hoping.