Home entertainment devices like the HomePod could be improved by analyzing the local environment and a user's actions, an Apple concept suggests, with monitoring of a room potentially able to improve gesture-based control of audio, as well as contextual responses like turning down the volume if the room is empty.

Granted by the U.S. Patent and Trademark Office on Thursday, the patent for "Multi media computing or entertainment system for responding to user presence and activity" discusses how a HomePod or another computing device could take advantage of real-time three-dimensional sensor data in a variety of ways.

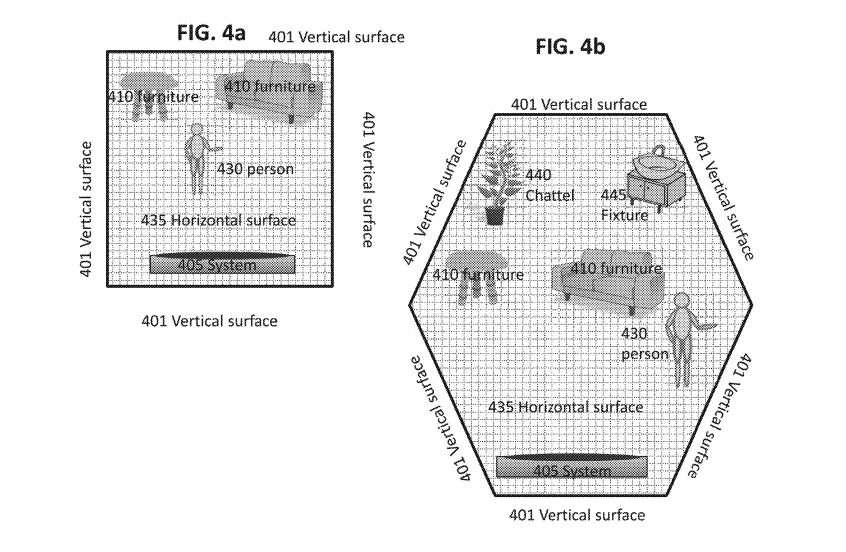

This map data could be used to create a depth map of the room, updated over time to cope with changes, such as moved or new furniture, to give the device knowledge of the room's layout and a background for future room scans to be compared against. In theory, this would give the system a blank slate to compare against live data, so it can know where users are.

Once detected, the depth map data could be used to detect a user's hands, segmenting the overall mapping area to narrow down to just the relevant section. The map can then improve on earlier results to more accurately detect the hand, as well as the arm and the rest of the user.

According to Apple, current gesture recognition systems can fail to detect individual sections of a hand, such as fingers and their orientation, making recognition challenging in many cases. The suggested system would theoretically be able to detect finger positions, making it more suitable for gesture recognition.

Notably, the patent also suggests the possibility of the proposed system using the context of a situation to determine actions it should take, including those that don't quite line up to a user's wishes. One example given is an older user's request for a higher volume, which the system could interpret as a request to emphasize the output of speech, amplifying the voice from media instead of increasing the average volume.

The patent also adds that, in some embodiments, the system could respond to a user's intent or desire that "may or may not be expressly directed at a system." Examples given include pausing music playback if the user is observed leaving the room, or if they return in a part of the environment with low acoustic reflectance, the volume could be adjusted to compensate.

Apple does file a large quantity of patent applications with the USPTO on a regular basis, and the discovery of filings isn't a guarantee that Apple will use the described concepts in a future consumer device.

One Apple product, the HomePod, does perform some analysis on a room, automatically adjusting its output based on feedback from its microphone array to create room-filling sound. While this is adaptive, making it able to adjust to a new environment when the HomePod is moved to a different location, it does so with sound, with the device incapable of recognizing hand gestures at all.

One potential way is to fit a HomePod-like device with technology used in the iPhone X's TrueDepth camera, which is capable of producing a depth map of the user's face for Face ID authentication. It is also capable of tracking parts of the user's face for functions, such as Animoji and some mask-based camera filters.

Malcolm Owen

Malcolm Owen

-m.jpg)

Charles Martin

Charles Martin

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

5 Comments

Turning down the music when the room is empty? I suppose that makes sense, but I could make a case for turning the volume up so you can hear it in the next room.

love both my homepods but i would settle for Siri hearing me when the music is playing...20% of the time she just ignores me

Surprised this wasn't in version 1.

With FaceID and Primesense, I'm hoping to see this in the next HomePod and especially(PLEASE!) Apple TV!!

Good luck to anyone doing their yoga in the same room.