Apple hopes to teach people how to use code to control robots and devices, by coming up with a concept for a system like Swift Playgrounds that guides users through putting together a kit of components, before providing tutelage on how to create a program that can use the assembled hardware.

The patent application titled "Adaptive assembly guidance system," published by the United States Patent and Trademark Office on Thursday, describes how coding lessons already involve users entering code that could be used to manipulate a virtual item in a virtual world, such as moving characters, but this could be expanded. It is a small step further to allow the same coding lessons to control a physical object, such as a robotic device.

While there are already robotic toys being used for educational purposes with Swift Playgrounds, such as products from Sphero, there is also the possibility of controlling constructive toys such as Lego with the educational tool — it is in this latter category that the patent application appears to be intended.

According to the application, the computing device used to teach coding would have some form of imaging sensor onboard, used to detect and identify physical items in an area that could be controlled by the software. The items in question could have a visual identifier on the casing to help with recognition.

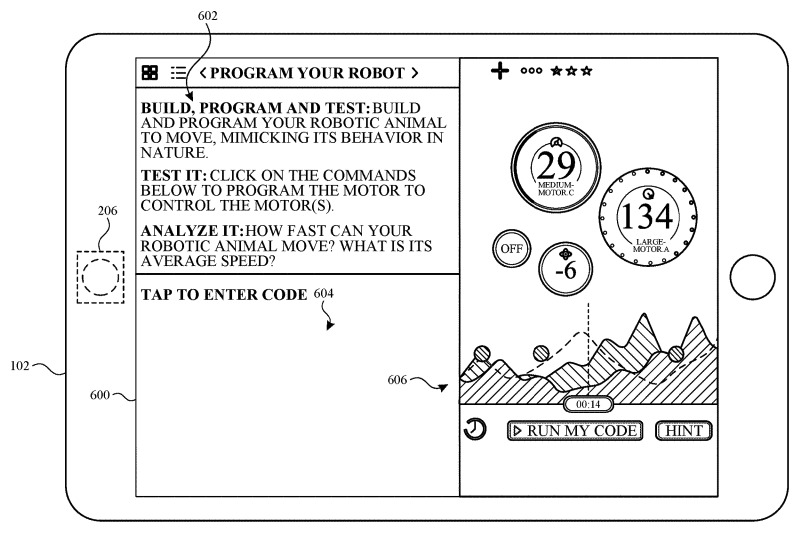

Once detected and identified, the coding application could load a library of functions that could be used by the learner to perform various actions on the physical object. An appropriate coding lesson would become available, teaching the user to enter in specific commands that would be relayed to the item and then performed.

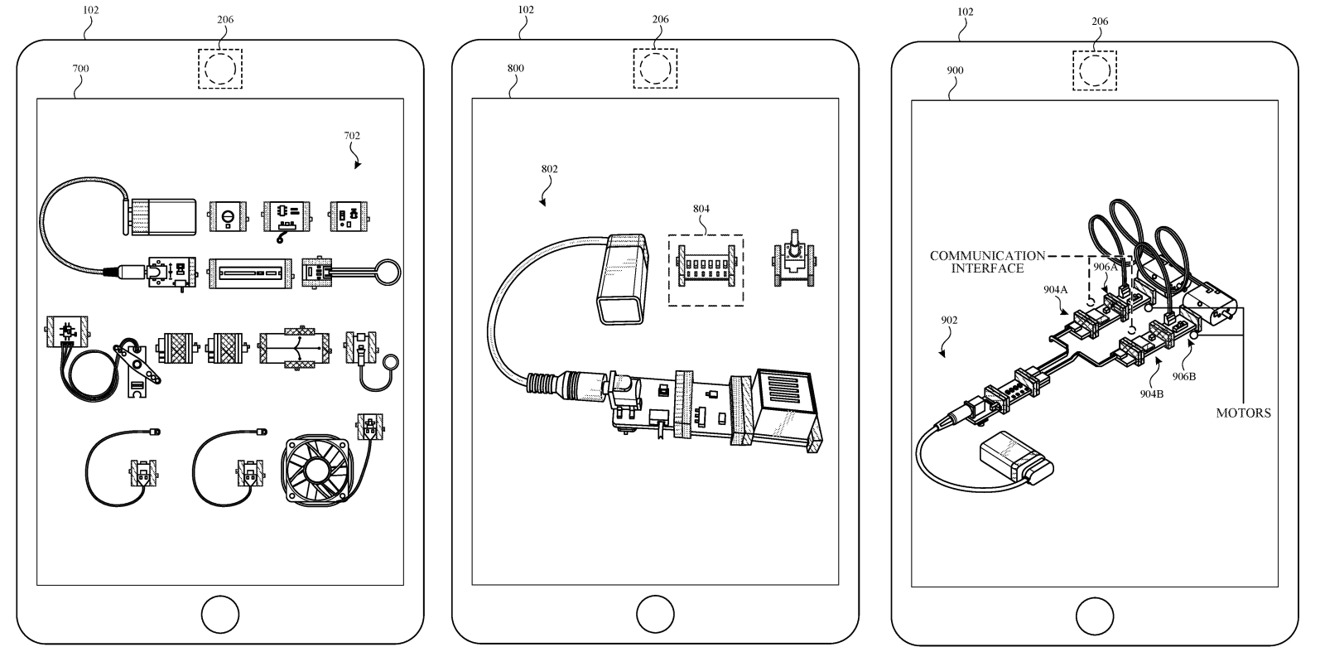

The recognition system would become invaluable for a second element, which uses kits like the named-in-application Lego Mindstorms EV3 set or a Little Bits kit. These kits typically include multiple components that could be assembled together, usually in a variety of different configurations, and have the necessary communication capabilities required to receive broadcast instructions.

The identification system again would identify individual components by various means, including color, shape, and other visual cues, with the presence of the items loading connected lessons in the application, and if necessary, re-establishing a data connection if one was previously present.

In a multi-part set, the app may provide guidance to assembling the kit in a particular configuration, identifying where particular parts connect to each other, then continuing with the coding lesson once construction is complete. If a kit is already assembled into the intended configuration, the construction element is skipped.

The system would also be able to detect if specific required elements are missing, such as a motor to drive a wheel for a vehicle or another sensor, in which case those libraries and coding lessons may be made unavailable until they are replaced.

The presence of a patent application filing is generally not a guarantee that the described concepts will make their way into an Apple product or service in the future. However, in this case, a lot of the elements are already in place that it could in fact become a reality.

Since Swift Playgrounds is already capable of controlling external hardware from an iPad, including the aforementioned Lego Mindstorms kit since mid-2017, this is half the battle.

It could also be argued the visual recognition element is partly there, as Apple does perform some computer vision tasks with its iOS device cameras, and can be made to recognize symbols and items of specific shapes and colors. It then becomes a case of making changes to Swift Playgrounds to include the recognition system, and working closely with producers of the kits and devices to include markers on their products to aid in recognition.

Malcolm Owen

Malcolm Owen

Thomas Sibilly

Thomas Sibilly

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Amber Neely

Amber Neely

-xl-m.jpg)

1 Comment

Kinda cool and with a lot of educational upside - especially if the software side can start to expose some basic concepts around behavioral modeling and digital twinning. This looks like it could be the basis of a fun toy for aspiring programmers, creators, and builders. At its most basic it is a 21st century version of the plastic/wood model kits some of us built as young kids (or may still build as old kids), but without the trees of molded plastic parts attached to sprue, teeny font parts identification and assembly diagrams, and of course the nearly unlimited opportunities to glue one's fingers together.