Sebastiaan de With, the developer behind popular manual photography app Halide, has been putting the iPhone XS camera system through its paces. In a comprehensive look into Apple's latest shooters, de With provides an overview of computational photography, and explains how noise reduction technology might generate selfie photos that appear artificially enhanced.

In the extensive deep dive, de With outlines why the new iPhone's images look so vastly different to those taken by the iPhone X, even though much of the hardware was carried over from last year. Notably, the developer offers an explanation for an alleged skin-smoothing effect that made headlines over the weekend.

Computational photography is the future

Apple long ago realized that iPhone's future is not necessarily in its hardware. That is why the company generally shies away from publicizing "tech specs" such as processor speed or RAM, and instead focuses on real-world performance.

The same holds true for the camera. Instead of simply cramming more pixels into its cameras each year, Apple focuses on improving existing components and, importantly, the software that drives them. As noted by de With, pure physics is quickly becoming the main obstacle as to how far Apple can take its pocket-sized camera platform. To push the art further, the company is increasingly reliant on its software making chops.

It is this software that has made such a substantial difference for iPhone XS.

SVP of worldwide marketing Phil Schiller during last month's iPhone unveiling discussed the lengths to which the Apple-designed image signal processor (ISP) goes when processing a photo.

With iPhone XS, the camera app starts taking photos as soon as you open the app, before a user even presses the shutter button. Once a photo is snapped, the handset gathers a series of separate images, from underexposed and overexposed frames to those captured at fast shutter speeds. An image selection process then chooses the best candidate frames and combines them together to create an incredibly high dynamic range photo while retaining detail. The process clearly has some unintended consequences.

Bring the noise

To back up all that computational photography, the iPhone XS' camera app needs to take a lot of photos, very fast.

In service of this goal, the new camera favors a quicker shutter speed and higher ISO. When the shutter speed is increased, less light is captured by the sensor. To compensate for the decrease in light, the camera app increases the ISO, which decides how sensitive the sensor is to light. So less light comes in, but the camera is more sensitive to light and is able to output a properly exposed image.

Sample RAW photos from iPhone X (left top and bottom) and iPhone XS RAW. | Source: Sebastiaan de With

Sample RAW photos from iPhone X (left top and bottom) and iPhone XS RAW. | Source: Sebastiaan de WithHowever, as the ISO increases, the more noise starts to appear in the photo. That noise needs to be removed somehow, which is where Apple's Smart HDR and computational photography comes in to play.

More noise reduction leads to a slightly more smooth-looking image. This is part of the issue seen in the selfie-smoothing "problem" people have been reporting.

The second reason has to do with contrast.

Less local contrast

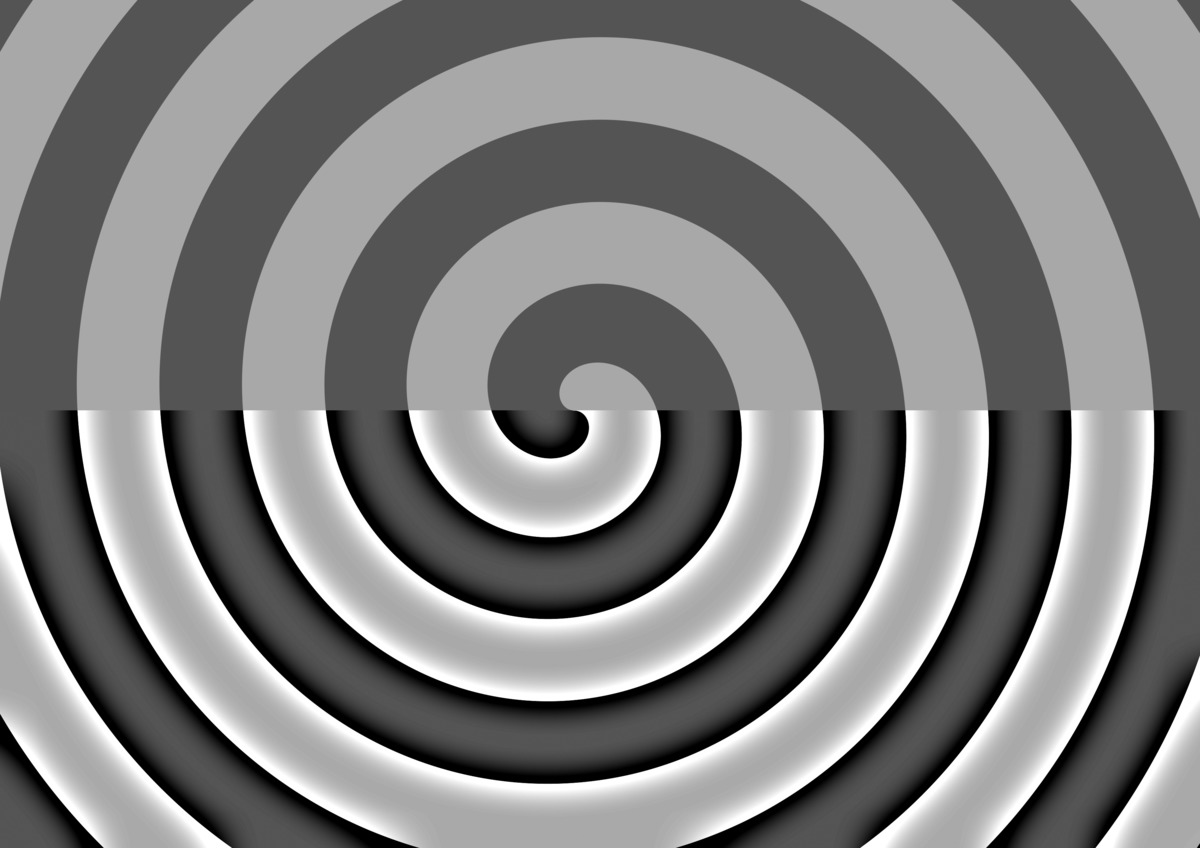

Local contrast is what most people recognize as sharpness in a photo.

Source: Wikipedia

Source: Wikipedia"Put simply, a dark or light outline adjacent to a contrasting light or a dark shape. That local contrast is what makes things look sharp," de With says.

When a sharpening effect is applied to a photo, no details are actually being added, but instead the light and dark edges are boosted, creating more contrast and thus the illusion that the image is now sharper.

Smart HDR on the iPhone XS and XS Max does a far better job at exposing an image, which decreases the local contrast and results in an image looking smoother than it otherwise would.

Cameras for the masses

The biggest issues with this method of photography arise when shooting RAW. De With hypothesizes that the iPhone XS still prioritizes shorter exposure times and higher ISO to get the best Smart HDR photo, even when shooting RAW — which gets no benefit from Smart HDR. This leaves overexposed and sometimes blown out photos with details being clipped and unreclaimable.

RAW shots don't get noise reduction applied either, and with the added noise from the higher ISO, this makes for extremely poor looking images. This means when shooting RAW, you must shoot in manual and purposefully underexpose the image.

Apple's apparent camera app decisions affect third-party apps — such as Halide — which is why de With says they have been working on an upcoming feature called Smart RAW. It uses a bit of their own computational magic to get more detail out of RAW photos while reducing noise. This new feature will be included in a forthcoming update to Halide.

While a lot of the analysis sounds critical, issues experienced at the hand of Apple's algorithms are so far outliers. Most selfies are taken in very unflattering light, and without the changes Apple has made, they would be poorly exposed and full of noise. Apple has likely erred on the side of caution by over-removing the noise and creating too-smooth images, but this can be pulled back.

More importantly, Apple has the ability to tinker with its firmware to solve the problems through subsequent updates if it so chooses.

As we saw in our recent iPhone XS vs X photo comparison, the iPhone XS and XS Max with Smart HDR have significantly improved photo taking capabilities, and the vast majority of users are already seeing the benefits.

Andrew O'Hara

Andrew O'Hara

-m.jpg)

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

William Gallagher

William Gallagher

-m.jpg)

22 Comments

The iPhone camera photos just seem to becoming more and more fake in my opinion. Reducing noise is one thing, but colours aren't even realistic either.

This video demonstrates the issue perfectly

https://youtu.be/Q3GGdtn9poo

Be interesting to see what dxomark make of the new IPhone's, as the premium android phones have outshone the iphone camera for a while now, iPhone X in 8th place.

https://www.dxomark.com/category/mobile-reviews/

A better headline would be

Photography expert explains iPhone XS 'beautygate,'

I don't see how this explanation debunks the problem

I have the X Max and it take beautiful photos. It’s incredible to have a camera like that in a smartphone. The photos are like the eye see. Natural. On few other smartphones , like galaxy, you can see what filter is. The photos appear like fake. Much disturb on the background , especially if you see the photos on big screen like a tv. That do not happen with iPhone . Tremendly high quality and reality response from the photos.