While Touch ID isn't being offered as a biometric authentication method in current-generation iPhones and the iPad Pro range, Apple is still considering the possibility of adding fingerprint recognition to its mobile devices, by using acoustic imaging on the display.

Face ID has been a successful replacement for Touch ID, boasting fewer false positives than the more physical biometric security system while still remaining fast to use. While it has brought some benefits, there are still some advantages to using Touch ID over Face ID, such as in situations where it isn't prudent to use Face ID or to unlock the iPhone while it is still in the user's pocket.

Even so, Touch ID has the major problem of requiring either a section of the front of the mobile device to be used for the fingerprint reader, reducing the potential screen size, or for it to be mounted on the back, a design choice Apple has yet to take. Based on a recently-granted patent, Apple has come up with a way to do Touch ID-style fingerprint reading, but without sacrificing the appearance of the iPhone itself.

The patent titled "Acoustic pulse coding for imaging of input devices," describes how sound could be applied to a surface in order to detect how another object comes into contact with it. In short, this could enable a fingerprint to be read when it is pressed onto a display.

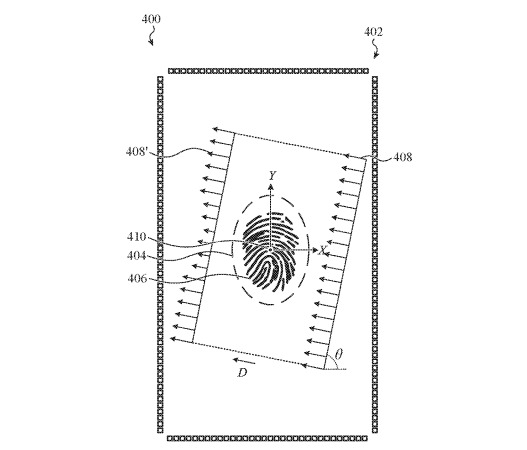

According to the patent, an array of acoustic transducers are positioned in contact with the surface, and can transmit a coded impulse signal, in response to a touch input. By monitoring the reflections from multiple coded signals, an image resolver can receive the reflection data and generate an image based on the input.

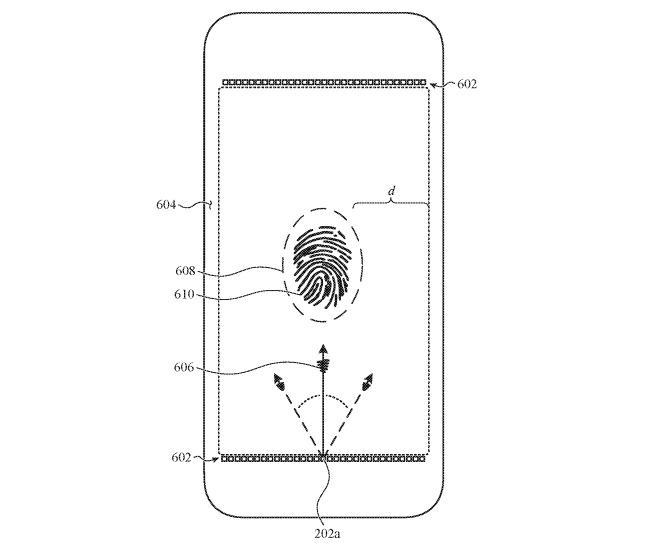

In effect, sound impulses are sent out and come into contact with the ridges of the user's fingerprint. These ridges interrupt the impulse's transit and are reflected, with these reflections then interpreted into a fingerprint image that can be analyzed.

Such a technique would provide a number of benefits compared to existing fingerprint reading technology, including a reduced thickness of the components required as the transducers can be placed on the edge and away from other components. There is also a lack of a need for electrodes to be placed on the display, which aside from reducing the complexity of display production, also means a potential speed boost for performing the authentication check in the first place, due to Apple suggesting it requires less processing power.

As it is based on sound transmittance, it could also feasibly allow for fingerprint reading to be performed anywhere on the display of the device, not just one specific point.

One version involves transducers surrounding the entire display area, potentially allowing for a more accurate scan by pinging from multiple sides.

One version involves transducers surrounding the entire display area, potentially allowing for a more accurate scan by pinging from multiple sides. Apple files numerous patents on a weekly basis, and while they do advise on areas of interest for the company, it doesn't necessarily mean the ideas disclosed will appear in a future product or service.

This is not the only time Apple has looked at alternative ways to perform fingerprint-based biometrics via the display, without using a designated sensor like Touch ID's Home button. In February 2017, a filing for an "Acoustic imaging system architecture" suggested a similar method of using acoustic pulses and waves from transducers to perform similar reflection-based analysis of objects in contact with the surface.

In August 2017, Apple was granted a patent for a sub-display fingerprint recognition technology that, again, used acoustic imaging. Again, it used transducers to transmit pulses and analyzed the reflections, but with a system of integrated transducer controllers capable of operating in both drive and sense modes, in order to solve architectural flaws relating to high voltage requirements.

Malcolm Owen

Malcolm Owen

-m.jpg)

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Mike Wuerthele

Mike Wuerthele

30 Comments

Being able to read a print anywhere on the screen would make under screen readers highly useful, and far superior to existing systems.

The obvious benefit is not requiring you to place your finger in a specific spot, which might work great for some people but not others based on size of their hands or how they hold their devices. Now you can use your device the way you like.

Another benefit would be automatic signing into Apps. If you hold your finger on an icon for a half second it not only launches the App but also reads your print at the same time for authorization. This would make it work like FaceID does now - launch the App and it authorizes/opens seamlessly.

The final one would be for security freaks. You could require two fingers to press the display to authorize certain functions (like turning off Find My iPhone or erasing a device). This would be much harder to spoof than a single print.

Face ID works so much better for me than Touch ID ever did. I sure hope they don't plan on ditching Face ID for Touch ID.

I thought I would prefer Touch ID, but once adjusting, the only place I really miss it is with Apple Pay...there are some times it’s just awkward to use Face ID with your phone on the payment terminal... in practically every other situation, Face ID has seemed to be quicker and easier. I guess when I’m trying to discretely peek at my phone it could be useful as well, but even then, I can usually get my face enough in view for Face ID to work.