Adventurous iPhone users wanting to use their devices to take photographs underwater may enjoy better results in the future, as Apple is exploring ways to automatically adjust an image when the smartphone is immersed in water to offer the best picture possible.

Underwater photography isn't a new concept, as it has been an idea that has been used by both professional and amateur photographers to produce some imaginative shots, as well as to show off things that simply cannot be seen above water, like fish swimming around a coral reef. As technology progressed, the number of water resistant devices has increased, making it easier for practically anyone to try and take a photograph while submersed.

Despite the ease of taking shots underwater, the fact that cameras are designed to work on the belief conditions are ideal, like in open air with bright lights, means the results from within water could be disappointing. The lower amount of light and reduced visibility, as well as differences in light color, may make for an image that is far below the expectations of the user, which Apple suggests could take the form of an "undesired greenish color cast."

In a patent application published by the US Patent and Trademark Office on Thursday titled "Submersible Electronic Devices with Imaging Capabilities," Apple proposes a system that can automatically detect when a photograph is being taken underwater, and for the device to make a set of changes to the image to improve its overall appearance.

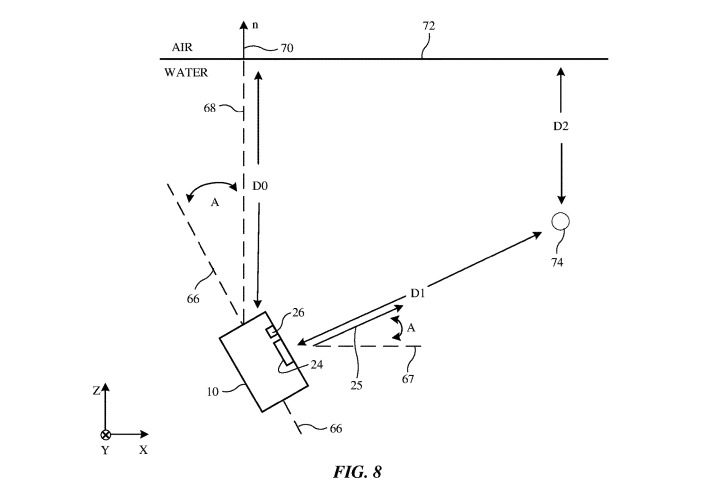

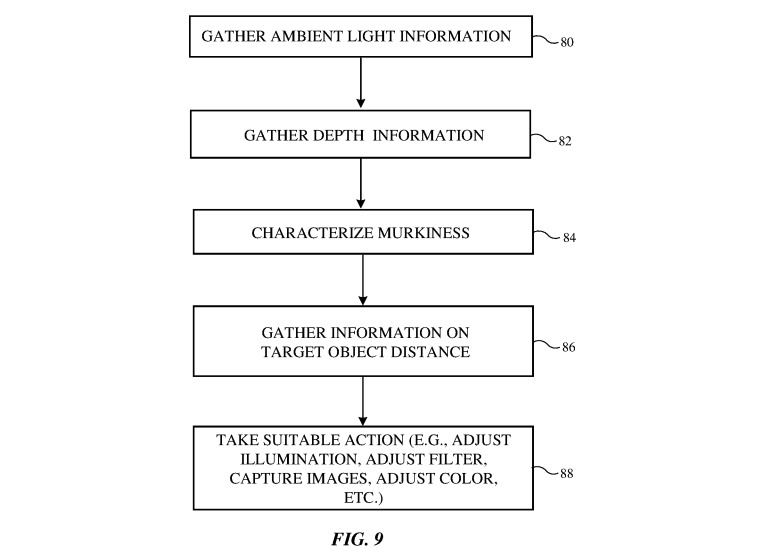

Once the device detects the user is underwater, an assortment of sensors are employed to determine what needs to be changed in an image. These can include a color ambient light sensor, which could be used to measure ambient light spectra above and under water, which can determine how much light is being absorbed by the water, affecting the shot.

Other sensors including depth, distance, pressure, and orientation sensors, can also be used to find out other items, like how murky the water is, how far below the surface the device is, and how far away the subject is from the lens. Backscattered light could also be used by a light detector to find out the water's murkiness.

Taking all of this data into account, the system could make adjustments to the image, including changing the color to mitigate light absorbed or reflected by the water's surface, the color of the water itself in natural locations, and to enhance the subject in cases where murky water can make it harder to see.

A selection of measuring points the proposed system could take into account in order to optimally filter the image when underwater

A selection of measuring points the proposed system could take into account in order to optimally filter the image when underwaterApple files numerous patent applications with the USPTO on a weekly basis, and though there is no guarantee the ideas posed will appear in future Apple products, the filings reveal areas of interest for the company.

In the case of this patent application, the array of sensors offered on an iPhone have the potential to perform many of its elements. Depending on Apple's implementation, it is entirely plausible for an underwater-mitigating image filtering system to be offered as part of a software update for iPhone models with water resistance ratings.

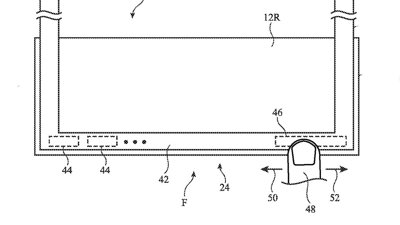

Sections such as distance detection, outside of computational photography or using the dual-lens camera system on some models, may be improved by the addition of a depth mapping-capable sensor on the rear. Current rumors suggest the 2019 iPhones could use a triple-camera system with a depth mapping-capable imaging sensor, one that could potentially be used for augmented reality applications and for improved Portrait photography.

Malcolm Owen

Malcolm Owen

-xl-m.jpg)

-m.jpg)

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Thomas Sibilly

Thomas Sibilly

10 Comments

What about warranty claims for water damage? This should be the first problem to be solved, before enabling advanced photography under water.

Aquaman is going to absolutely LOVE this.