Self-driving vehicles in Apple's "Project Titan" could reduce the amount of processing required to recognize objects and to understand the layout of the road ahead, using a "confidence" algorithm that can allow a car's computer to get just enough data from sensors to perform the processing.

Current efforts to produce self-driving vehicle systems typically rely on vast amounts of processing. Data collected from the wide array of sensors attached to a vehicle are capable of providing a full view of the road, but it is computationally expensive to not only make sense of the data, but to also determine objects of interest that may be worth paying more attention to, and changes in the road layout.

While one way to solve the problem is simply to put more processing power into the vehicle's self-driving system, this is an expensive route due to the increase of hardware required, as well as increased power consumption. Another way is for the driving system to be selective in where it uses up its resources, purposefully utilizing fewer cycles in some areas and saving the remainder for more important elements.

In a patent published by the US Patent and Trademark Office on Tuesday, Apple's "Depth perception sensor data processing" patent describes just how a system could perform selective processing on sensor data.

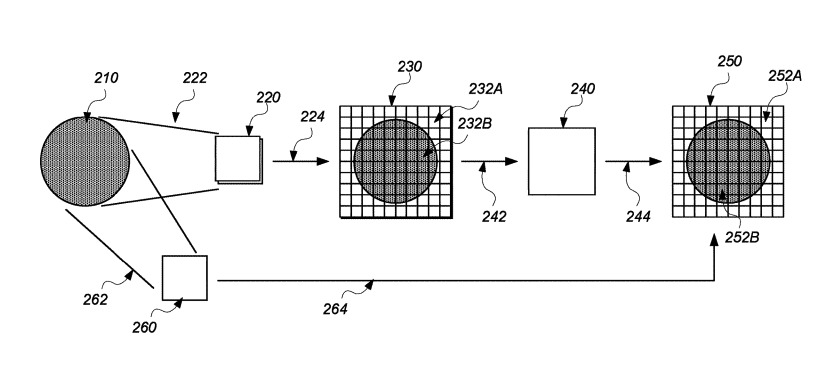

An illustration of a vehicle gaining passive sensor data, then comparing it with other sensor data for accuracy.

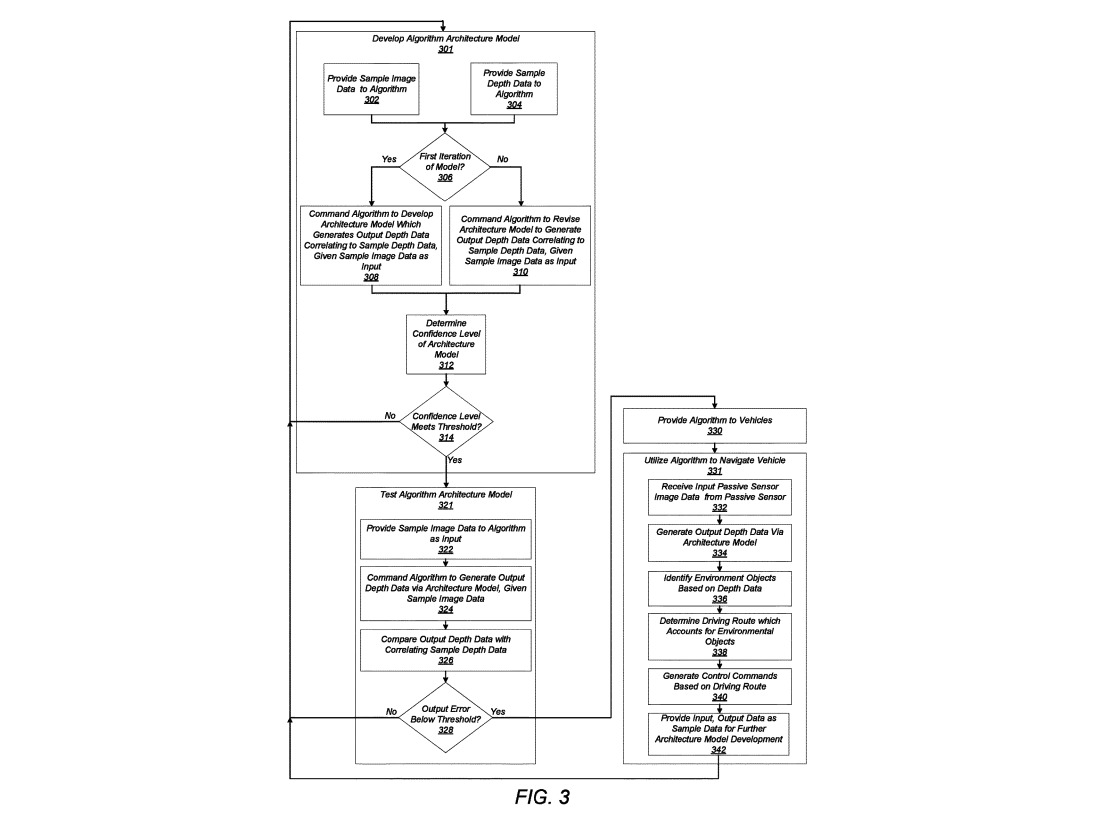

An illustration of a vehicle gaining passive sensor data, then comparing it with other sensor data for accuracy. According to the filing, a sensor data-processing system receives data from sensors on the vehicle and generates a depth data representation of an environment. The system is able to use one or more passive sensor devices, like cameras to generate an overall map for the entire environment, consisting of both image data and depth data, giving a basic understanding to the system of what is in the immediate area, the initial model.

A second set of data is then generated by one or more active sensor devices, more intensive hardware like LiDAR, and is compared with the model. The model is then iteratively adjusted using more active sensor data, and it continues until the "confidence" that the model is accurate is sufficient enough to be provided to other parts in the self-driving system.

The patent defines the confidence level as based on the "magnitude of revisions to the algorithm per iteration of adjustment." In short, it will continue adjusting the model with sensor data until the adjustments are negligible.

While this can be a resource-saving and cost-reducing exercise for self-driving systems, the proposed method also offers a performance benefit. By more quickly creating a model for the road, a system can get to a point where it can recognize objects and elements far earlier, which could result in a more responsive and potentially safer system.

Apple suggests the patent could also use a machine learning or deep learning algorithm to better improve the model refinement and object recognition processes. It is also suggested the sensor systems of multiple vehicles could be used, giving a wider field of the environment that could be monitored and extending the vehicle's effective "vision."

While Apple does file numerous patents and applications with the USPTO on a weekly basis, there is no guarantee that the concepts will make their way into commercial products or services, however they do indicate areas of interest for Apple's research and development efforts.

"Project Titan" is the name given for Apple's self-driving and automotive efforts, with it largely centering around vehicle-based computer vision and transit. Initially the project was thought to revolve around an Apple-branded car, but over the years it has changed focus towards self-driving vehicle systems, something currently being tested by the company via a fleet of vehicles in California.

Another sensor patent covered the possibility of using the LiDAR and proximity sensors to automatically capture points of interest for drivers, such as photographs of a location or a scan of an environment. While this could be useful for travelers who want to get images of things on the side of the road, it can equally be used to capture the scene of an accident which may be of interest to insurance companies or law enforcement.

Apple has also thought about how the use of sensors underneath a vehicle could be used to monitor the ground's speed and angle in relation to a vehicle's motion, which can advise a self-driving system that a vehicle could be skidding instead of moving in its intended direction.

Other related patents include the use of gesture controls to move vehicles, using augmented reality to display an obscured road ahead on a windscreen, inter-vehicle communications with other self-driving systems, and the ability to summon and pay for transit in a self-driving taxi using an iPhone or a similar mobile device.

Malcolm Owen

Malcolm Owen

-m.jpg)

Christine McKee

Christine McKee

Chip Loder

Chip Loder

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

-m.jpg)

4 Comments

Impressive, Apple's upcoming business model as a patent holder, having other doing the hard(ware) work to monetize Apple's R&D, with Apple reaping part of the benefits in the form of royalties.

The confidence algorithm seem like how human constant processing external data during driving with focus and intends to perceive relevant data. There could be information overload period that tend to overwhelm a human and accident occurs. For example, looking for exit sign and curve ratio on the exit ramp when ready to leave highway, a person often not good is a sudden cut in to the his lane by another car at the exit ramp before or slightly after the lines separate, especially if cut-in is sudden and without turn signal and gap between two cars are narrow. It is obvious a reckless and stupid move by cut-in car driver, but if Apple car without a lot of sensors and constant analyzing it , such unexpected cut-in could result an accident. For fully automated self-driving car, injure could still happen, (let alone legal challenge)

Does obvious or (open deur) ring a bell?

(by the way lidar is a complete dead end)