Progress is being made in the field of facial recognition by the use of multiple photograph databases consisting of subjects who were not aware of their misuse, a report claims, but while many companies and researchers are collecting and sharing the images and resulting data, Apple is not one of the sources.

The constant push to make facial recognition better has led to researchers collecting vast databases of images, each consisting of faces from a variety of different sources. On the need for acquiring more data for neural networks to learn from, these databases are also reportedly being shared between teams to create even bigger databases.

According to the New York Times, some of the images sourced for the databases are scraped from publicly-available images, ranging from social media posts to dating sites like OKCupid. In some cases, cameras in public locations are allegedly used to acquire facial data, including those from restaurants and college campuses.

As for the amount of databases in existence, the number is unknown, but there are image archives used for facial recognition training compiled by organizations such as Microsoft and Stanford University. As for their size, two databases were said to have over 10 million images and more than 2 million each.

For many of the images, the people appearing in them are unlikely to know that they are being used for facial recognition training or other purposes. Furthermore, it is unlikely that those who do know about the image's usage will be aware that it is being shared with other organizations.

Major tech companies like Facebook and Google are thought to have created the biggest data sets of human faces, but research papers advise the firms do not distribute the information to others. However, the report claims other smaller entities are more willing to share their data with others, including both private companies and governments.

The sharing of the data, as well as the discovery of activities such as Immigration and Customs Enforcement officials using facial recognition on motorist photos to identify undocumented immigrants to the United States, has led to critics complaining about the privacy implications for those featured in images.

In one example, a 2014 project by Stanford researchers called "Brainwash," used a camera to take more than 10,000 images over three days in a cade of the same name. The data from Brainwash was then shared by the researchers with others, including academics in China associated with the National University of Defense Technology, and surveillance technology firm Megvii.

In Microsoft's case, it created "MS Celeb," a database of over 10 million images of more than 100,000 people, largely consisting of celebrities and public figures, but also of privacy and security activists. Again, the database was found to have been distributed internationally.

Both Stanford and Microsoft removed their databases from distribution, following complaints by individuals over the potential breaches of privacy.

"You come to see that these practices are intrusive, and you realize that these companies are not respectful of privacy," said Liz O'Sullivan, an activist who left the New York-based AI startup Clarifai over their methods. "The more ubiquitous facial recognition becomes, the more exposed we are to being part of the process."

Clarifai started to use images from OkCupid due to one of the site's founders investing in the startup, as well as photographs via a deal with a social media company. The firm created a service that could identify ages, sex, and the race of faces, and was also reportedly working on a tool to collect images from the website "Insecam" to acquire more from public and private spaces that used insecure surveillance cameras, but efforts were shut down following employee protests.

According to founder and CEO Matt Zeiler, the firm intended to sell its facial recognition systems to governments, the military, and law enforcement officials under the right circumstances, and didn't see any reason to restrict technology sales to specific countries.

While the various databases used for the projects used publicly-sourced images and those gathered by other firms, none of the images came from Apple.

The company does perform artificial intelligence and machine learning research, and has been sharing some of its research since 2017, following years of the company actively preventing such sharing from taking place.

It is unclear what kind of data sets Apple uses for its research efforts, but it won't have been sourced from its customers. Apple is a staunch supporter of privacy, and works to build its products and services to minimize the amount of user data that is provided to Apple as far as possible.

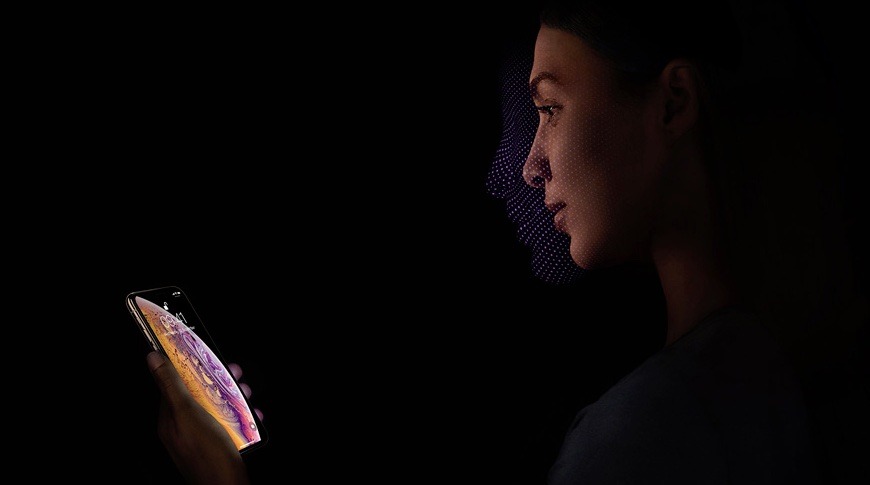

For example, Face ID does use facial recognition to unlock an iPhone, however the technology uses an algorithm on the depth map of the user and compares it with results of a previously-scanned face. At no point is an accurate map of the user's face stored on an iPhone, only the algorithmic results after a scan.

It would also be difficult for Apple to justify collecting any facial data for its own research purpose, given how Apple publicly advertises its privacy and security credentials.

Malcolm Owen

Malcolm Owen

-m.jpg)

Thomas Sibilly

Thomas Sibilly

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Amber Neely

Amber Neely

11 Comments

http://www.face-rec.org/databases/

...and a related story

https://www.nbcnews.com/tech/internet/facial-recognition-s-dirty-little-secret-millions-online-photos-scraped-n981921

https://www.ibm.com/blogs/research/2019/01/diversity-in-faces/

It has become obvious that stalking and privacy laws need to be vastly and internationally expanded to stop this predatory behaviour, at least without active user opt-in and aggressive transparency about where and how face data is collected, used, and sold to.

Proud of Apple for not being part of the problem.

What is not explained, as far as I can see, is what benefit the AI researchers obtain from the databases of faces. Yes, they can train the programs to recognize that there is, indeed, a face in the picture but it doesn’t appear that they have other data to match against it. They don’t know whose faces they are. What am I missing? What are the privacy problems that people are seeing?

Is any of this relevant in any number of situations? Will Apple data be some of the most verifiable (and valuable) on the planet because we 'trust' them ? Is this the stuff of of boiled frogs and trojan horses ? https://moneymaven.io/mishtalk/economics/micro-assassination-drones-fit-in-your-hand-IcoMKId1qUeR4hKnf11x9w/

Research in facial recognition is like machine vision research in "bin picking" many decades earlier-- a challenge, with many useful applications. You need raw actual data, rather than old yearbook pix. This time however the abuse potential should already have lawmakers looking at regulating databases, expirations, sharing, etc. This has been developing since early 2000s. Republicans dismantled the US Congressional Office of Technology Assessment about that time. Very shortsighted.