Steve Jobs launched iPhone as "a phone, a widescreen iPod and a breakthrough Internet device," but over the last decade, one of the primary features driving its adoption among new buyers and upgrades among existing users has been its camera. Here's a look at where Apple stands in mobile imaging technology, with a look at what's expected for iPhone 11 in September.

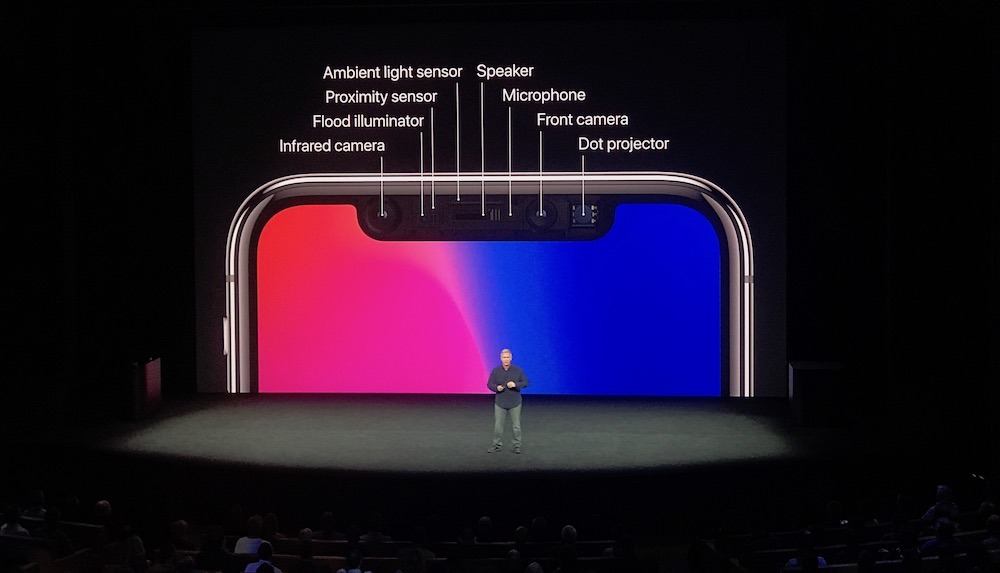

Apple's next iPhone— which appears set for unveiling within the next two weeks— is slated to deliver a new wide-angle lens and rear camera depth sensors capable of performing the same kind of advanced Augmented Reality and Visual Inertial Odometry that iPhone X introduced on its front-facing TrueDepth sensor.

Google and some of its Android partners previously experimented with such an arrangement in Project Tango, but gave up after it became obvious that Apple's front-facing approach was a much smarter and more useful application of the technology.

Now that Apple has fully exploited its front-facing TrueDepth to deliver Face ID, Portrait Lighting, Animoji, and Memoji in Messages and FaceTime, as well as advanced support for ARKit features to its third-party developers, the company is now positioned to expand outward.

That could explain why Apple acquired Danish startup Spektral, which appears to be the source of its human occlusion technology added to ARKit 3 this summer. The technology enables users to take an image or video and replace the background around a subject in realtime. It also enables users to realistically fit into, and in front of, the synthetic graphical elements making up an AR scene.

In addition to motion capture and multiple face tracking, Apple is also adding ARKit 3 support for mixing images from the front and back cameras to enable a user to, for example, interact with AR content layered on the rear camera view using their facial expressions. This also unlocks a series of new capabilities that pave the road forward toward a future for functional AR-enhanced glasses. And of course, Apple also rolled out new Reality Kit tools for macOS Catalina and iOS to help build AR worlds to explore.

These sophisticated uses of the camera, motion sensors, and custom silicon— including Apple's custom Graphics Processor Unit, Image Signal Processor and other specialized computational engines— go far beyond the notion of the iPhone camera as being merely a device to take photographs and videos.

In addition to expanding cameras beyond photography, Apple is building the tools to create Augmented Reality worlds

In addition to expanding cameras beyond photography, Apple is building the tools to create Augmented Reality worldsThe evolving iPhone camera

The idea that an iPhone could compete with a dedicated point-and-shoot camera or even handheld camcorders was commonly scoffed at several years ago. However, mobile sensors, lens assemblies, and computational imaging silicon have advanced dramatically over the past several years, largely driven by high-end sales of iPhones.

While dedicated cameras and video action cams can certainly claim various technical leads over the mobile camera and tiny lenses on an iPhone, the increasingly "good enough" nature of Apple's built-in cameras have disrupted the markets for secondary cameras for several years now.

Apple has also uniquely paired its camera advances with effortless iOS features for capturing, editing and sharing photos, videos, panoramas, and most recently Portraits. It has also exposed mobile photography to the network benefits of its vast iOS app platform, launching applications like Instagram and its own iCloud and Machine Learning-driven Photos and the effortless AirDrop

Camera competition

When iPhone first appeared, it couldn't even capture videos and its still camera was outmatched in various respects by existing camera phone makers. The technical leads of other smartphones held didn't stop buyers from upgrading, however.

Since then, other phone makers— notably Nokia— have rolled out ultra-high resolution sensors and advanced optics. Many experimented with 3D image capture and other novelties. But these approaches failed to catch on because they introduced more complexity, greater expense and other tradeoffs that were more significant than the benefits they delivered.

Apple's ability to attract nearly all of the world's sales of premium-priced phones above $600— and the vast scale of its high-end iPhone business— has enabled the company to continue to drive the state of the art in mobile imaging, at a price users will pay.

While pundits keep talking about the iPhone as a "fading" product category, the reality is that nobody comes close to selling 200 million smartphones every year at an average price well above $650.

Huawei and Samsung both sell large numbers of phones, but these hit an average unit price well below $300. That means they lack Apple's vast economies of scale in rolling out premium-class technology at an effective cost. It is iPhone's high-end volume that enables Apple to make long term, profitable bets on the future of imaging spanning custom silicon to advanced research in areas from Machine Learning to Augmented Reality. All of this has resulted in features like Portraits, Face ID, and Animoji that have caught rivals flatfooted.

Samsung, Huawei, and even Google have invested tremendous amounts into their imaging sensor silicon and related technologies, and in some cases have offered unique features— such as the dark mode capture on Google's Pixel and Huawei's Honor phones. However, they haven't cracked open a valuable enough set of camera features to justify selling similar volumes of high-end phones.

You might not get this impression from reading reviews applauding the "night mode" features on various Android phones, often hailed as a big and important feature that iPhones lack.

What's often left out is the fact that the processing needed to deliver these low light images requires that users hold their phone still for around six seconds, and then often ultimately results in a fake-looking picture anyway, as noted by Joshua Swingle, writing up a comparison of the Huawei P30 Pro and iPhone XS Max for Phone Arena.

Many Android fan sites instead just carefully take images of their junk drawer in dim lighting using a night mode Android and compare these against an iPhone. Google itself did this in its recent ad campaign for Pixel. But the reality is that most people are not trying to take photos of a still life in the dark.

iPhone realness

In contrast, Apple seems to have a better awareness of what people want to capture: flattering images of themselves and their friends and family. At nearly every one of its iPhone launch events, Apple has presented new technologies capable of capturing better pictures of people, rather than just still images in the dark.

That's included the warmer, more realistic TrueTone flash on iPhone 7, as well as iPhone 7 Plus Portrait images that not only blur out the background (a trick Google later copied computationally for Pixel) but also use a zoom lens to capture a more flattering face shot.

Apple has also long optimized for realistic imagery in its imaging processing. Android licensees have paid much less attention to accuracy. Google got reviewers to fawn over its Pixel cameras, but it takes pictures that are yellow and often artificial-looking, and its displays have been atrocious. Samsung has similarly long pushed its OLED screen technology, but without much concern for color accuracy.

And most all Androids compensate for lower quality displays and limited camera processing by simply exaggerating image saturation, giving the impression they are delivering "rich color" when in reality they are just masking cheaper components and making it more difficult to edit, correct or enhance photos afterward.

There are some high-end Androids with camera and display specifications competitive with Apple's iPhones. However, as noted earlier, these models are not selling in large volumes. Samsung has historically sold about half of the world's Androids, yet only about a tenth of its phones were its higher-end Galaxy S models.

That product mix has been disastrous for the Samsung IM business unit, recently forcing it to trend downward to focus on the middle tier with cheaper Galaxy A series models. That kills its ability to showcase the best technology it has. And that's why it has often reserved its best components for Apple, knowing that the iPhone maker can not only design high-end devices, but reliably sell them in high volumes.

Similarly Sony, which makes Apple's camera sensors, also builds its smartphones with advanced cameras, but only sells relatively small volumes of these devices. Without Apple's business, neither Sony nor Samsung could justify driving the development of high-end mobile displays and cameras as fast as they currently are.

The luxury of high end

Even with a drop from peak iPhone shipments caused by delayed upgrades in China, Apple continues to drive massive scale in high-end phone sales. And rather than scaling downward in quality and price the way Samsung has, Apple continues to design luxury-class devices that sell to large audiences of mainstream buyers.

The media narrative that regular buyers wouldn't be attracted to $1,000 iPhones was pretty clearly an embarrassing assumption based on feelings, not facts. Over a two year lifespan, iPhone X costs its buyers about a $1.40 per day, making it one of the easiest to afford luxuries even for buyers on a budget.

In reality, Apple's rapid pace of updates and high-end advancements have created a high quality installed base of users, driven at the high end by affluent buyers who effectively subsidize the second-hand market with good quality phones.

And because iPhones stay in use and are supported by Apple for years, the installed base of iPhones is growing even if unit sales aren't. That's important to peripheral sales of other products, including Apple Watch and AirPods, as well as Services ranging from Apple Music to the upcoming Apple Arcade and TV+.

Nobody is being forced to buy high-end iPhones or subscribe to any services from Apple or its third-party partners. But the economic activity Apple's ecosystems are generating is creating a lush environment that benefits all iPhone users, even those content with using a model a couple of years old.

That's all good news for anyone interested in taking great photos and capturing high-quality video, or in exploring the future of computational photography and the worlds of AR and Virtual Reality— places where privacy will become increasingly important.

And within the next few weeks, we should see even more about where Apple plans to take digital imaging with its latest generation.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Andrew Orr

Andrew Orr

Wesley Hilliard

Wesley Hilliard

Oliver Haslam

Oliver Haslam

Christine McKee

Christine McKee

Amber Neely

Amber Neely

39 Comments

A few big problems with this argument: just because Apple's cameras are a big selling feature on the iPhone doesn't mean that A) those locked into the Apple ecosystem would leave for a better camera, B.) That having a great camera will automatically draw lots of sales (that's more a product of marketing as evidence by Samsung selling a ton with a great camera, but Google (arguably the best camera) not selling much), and C) That Apple even still has a "mobile imaging lead" as the HL claims. iPhones take the best video, Pixels take the best photos, but none of that even matters once your social network of choice compresses the hell out of the file.

While I’d like to believe this is all true, I find some of it in error, or just a stretch.

tango was abandoned for several reasons. One was that the technology was complex. Another was that it was expensive. The last was that it was, technologically, too early.

a problem with Android is that the average selling price of the phones, worldwide, is about $230. That doesn’t allow much room for expensive technologies. Overall, while Apple has just a small share of the market, which has been shrinking, it has an outsized portion of the high end market. For example, while Apple will sell about 200 million high end phones in the next 12 months, Samsung will sell about 30 million. Huawei will sell less, and other manufacturers, even less. Google just sells about 3 million phones as a high estimate.

because of that, it’s difficult to develop a high end technology that will need to be applied to a large number of devices for third party developers to take part. Even Apple can’t force that. An example is 3D Touch, which Apple themselves never applied terribly strategically, and which third party developers for the most part, ignored.

so Google gave it up.

as far as Apple efforts in photography go, there are other cameras that outdo Apple’s work in individual areas, but not overall. Going to computer sites, or channels that are run by people who are not photographically savvy, note the few areas in which Google and even Samsung and Huawei outdo Apple in one area or another, but fail to note that overall, Apple’s cameras are better in reliability and consistently. But go to sites and channels run by professional photographers, and it’s rare to find one that uses something other than an iPhone, or who has anything good, overall, to say about them. Take a thousand pictures with phones, and iPhones will return a much higher percentage of good shots than any other.

and yes, I’m familiar with night modes. And yes, I know that Apple doesn’t have one yet. And while they excelled in getting a photo out of trash, they really aren’t good photos. Low resolution, and other problems abound. But getting the photo has excited those who use the feature, and so the other problems have been ignored. Is that why Apple hasn’t come out with a competitive mode yet? Very likely. Generally, Apple’s cameras have better normal low light quality, but that doesn’t compare to a low resolution comparison on the web. Maybe this year, we’ll finally get an improved version from Apple.

but, my concern is that we need substantially better sensors to begin with. It’s not impossible. But current sensors are already getting most photons to image, so conventional sensors can’t just leap to a much better level, particularly in the tiny sizes we already have.we. We need something new. There is the Foveon sensor, introduced shortly after Digital photography got going. I was given one of the early model cameras. Low resolution when compared to a beyer sensor, but sharper per pixel. I had high hopes for that, but it fizzled. Sigma, the lens maker, bought the technology some years ago, and has made advances in the resolution, and put them into some rather idiosyncratic cameras with some pretty awful software. They’ve gone nowhere. While the technology allows for high per pixel sharpness, color quality is compromised. So that’s not the answer. I have some ideas, but who knows what Apple, Canon and Sony are working on?

I’m just hoping that Apple isn’t going to come out with more of the same this year. The third camera is nice, but none I've seen so far have advanced the state if the art in any way.