Mobile device users who have trouble holding an iPhone steadily could be assisted by the smartphone to view images and other content, by compensating for hand movements to keep what is being viewed steady and in one place regardless of the motion.

While the iPhone and iPad can be used to view videos, to read text, or to look at photographs or other images, some users have trouble doing so without the aid of a stand. The elderly as well as injured or disabled people may not have the capability to hold the device still enough to enjoy the content, with the smartphone or tablet moving due to hand shakes or other physical ailments.

These can be solved with the use of an aforementioned stand, but this doesn't solve the problem in settings where placing the device down is practical. For example, this could be in the middle of a shop, with an iPhone used to display a shopping list.

There is also the everyday usage to consider for most users, such as looking at a device screen while walking, or reading from an iPad in a car on a bumpy road.

In the patent application published by the US Patent and Trademark Office on Thursday titled "Dynamic Image Stabilization Using Motion Sensors," Apple aims to solve the problem by measuring the movements and using that data to keep the image as still as possible for the user.

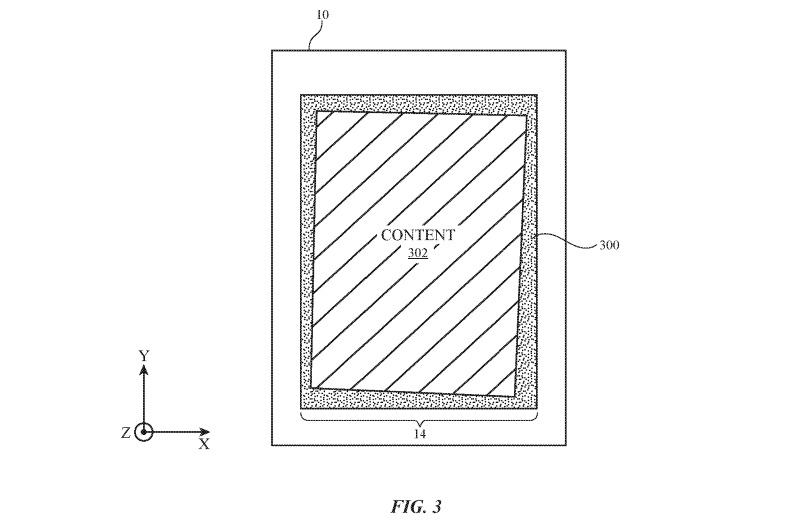

In the proposal, the mobile device uses dynamic image stabilization circuity to compensate for sudden movements, to keep the content aligned with the user's gaze. Motion sensors are used to detect the level of displacement of the device during such shakes or jolts.

The system then uses these movements to work out how far the device has moved and in which direction, and shifts the on-screen content with the intention of keeping it roughly where the user was looking before the movement took place. Subsequent movements would force similar calculations, and similar shifts.

A short time after the unwanted movements occur, the system can then reset, by gradually drifting the content back to the center of the display, ready for another set of movements if required.

Aside from the dynamic movement detection, Apple's suggestion also involves the use of a "usage scenario detection circuit" to determine what kind of predetermined scenario the device is being used within. This system would inform the motion compensation of the kinds of corrections it should apply to the content on behalf of the user.

These other adjustments can involve creating a margin around image content to minimize the chance of clipping during adjustments, shifting content on the plane of the display, and magnifying or minifying the content. In one situation, the ability to track the user's head could help provide more data on what the system should do to compensate, even to the level where it could make changes based on the user's sudden head movements rather than that of the device.

While the system could be entirely self-contained, it is feasible that other devices could help provide more data for it to use. The application mentions the use of "a set of earbuds, a wrist watch, a pair of glasses, a head-mounted device, etc." This could infer motion data from Apple Watch and a version of AirPods with fitness tracking functionality could be used, as well as possible smart glasses or rumored augmented reality headset.

Apple files numerous patent applications on a weekly basis, but while the filings are an indication of areas of interest for Apple's research efforts, they aren't a guarantee the concept will make an appearance in a future product or service.

The idea is feasibly something that can be accomplished by current-generation technology already included within iPhones and iPads, as they all include motion sensors and processors capable of determining changes in physical position in 3D space. The use of the TrueDepth camera on the iPhone also lends itself to face tracking, to further assist with content viewing corrections.

Given Apple's habit of making its hardware and software as accessible as possible, it is entirely plausible for the company to add such a feature in a future iOS update, if it so chooses.

Malcolm Owen

Malcolm Owen

-m.jpg)

Charles Martin

Charles Martin

Stephen Silver

Stephen Silver

Amber Neely

Amber Neely

5 Comments

Call me very skeptical of this one. If done perfectly, if could be amazing, but I doubt it can be done perfectly. I expect it would be more likely to have unintended consequences, such as inducing a motion-sickness like reaction. Our brain is wired to infer a lot of things based on visual input and having the visual content of the device jiggle around independently of the device itself would probably be jarring. When you are reading a book in a car, you expect the book to be bouncing around a little. How weird would it be for the words on the page to be staying still while everything else is moving slightly? I expect you'd perceive that as the words dancing around on the page.

Kudos for Apple for trying things, but I will be amazed if this ever sees the light of day in a shipping product.

While it could be applied to a phone, this seems more likely to be useful on the rumored AR headset:

https://forums.appleinsider.com/discussion/comment/3182978

Also consider Apple has included variable rate rasterization in Metal on iOS 13. That's one of the parts needed for foveated rendering.

I always want to read iPad while in car but movement makes it difficult so this would be so amazing if it can do that. I cannot imagine how it can be that precise enough to make it "stable."

This isn't a new patent. I remember reading about this a few years ago. Is this article about the patent finally being granted?