An iPhone or iPad may help ensure a user's privacy by monitoring their gaze, displaying sensitive information only in areas of the display where they are looking, while observers are simultaneously left seeing an indecipherable screen.

One of the problems with using mobile devices like an iPhone or iPad is the need to keep the contents of a display private. Sensitive information such as financial data or medical details may need to be looked at by the user, but in a public location, it is hard to prevent others from glimpsing any of the data displayed on the screen.

While it is plausible to do so by hiding the screen with physical barriers, or by actively blocking another's view with a hand, the nature of doing so attracts further unwanted attention. There is also the possibility of using screen filters that block light at extreme viewing angles, though this may make the overall visual quality for the main user worse.

In a patent application published by the U.S. Patent and Trademark Office on Thursday titled "Gaze-dependent display encryption," Apple proposes a way to manipulate the contents of a display so that only the active user knows exactly what is shown, while deceiving others with false data.

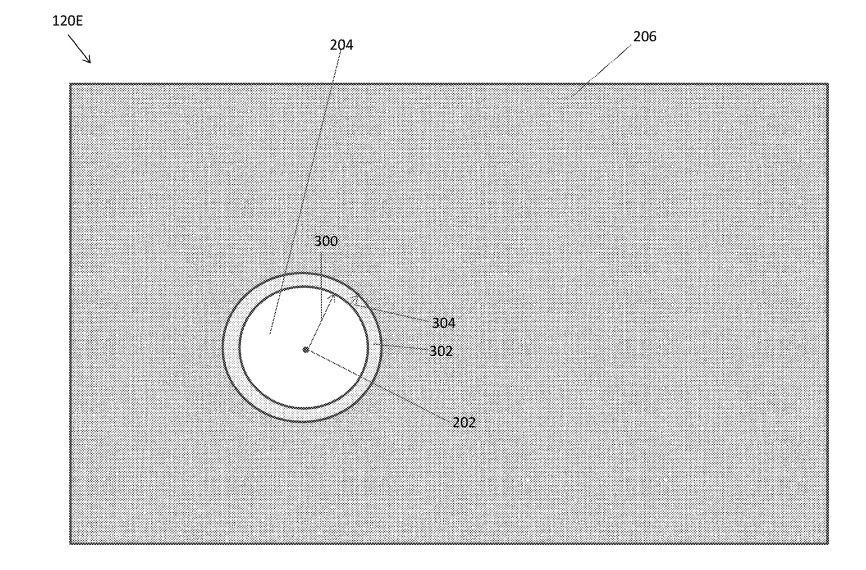

The system centers around Apple detecting the gaze of the user on the device's screen. By doing so, the device will know exactly where on the display it needs to show a real and unobscured part of the graphical interface or viewed content.

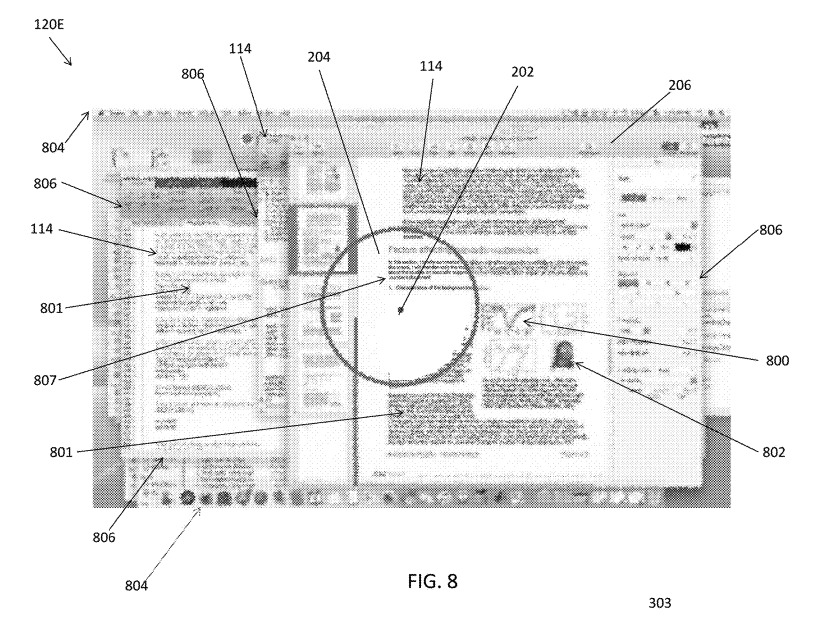

An illustration of a selected area of the display where the user is looking at is clear, while the rest is obscured

An illustration of a selected area of the display where the user is looking at is clear, while the rest is obscuredKnowing where the user is looking also informs of where the user isn't paying attention, which the system uses to its advantage. In the remainder of the display that isn't actively being looked at by the user, the system still displays an image, but one that contains useless and unintelligible information that cannot be understood by observers.

When the user changes where they are looking, the screen updates to uncover the new gazed-upon region, as well as covering up the previously-seen data with bogus content. By doing so, the user will always see their intended content, with the data only partially viewable and therefore difficult to actively read or understand by outsiders.

Furthermore, Apple suggests the unreadable portions of the display may contain content that visually matches the rest of the display, but the information within may be bogus. By making it visually similar to the real information, this helps further disguise where the user is currently looking on the screen, as well as minimizing the chance of an onlooker realizing there is some sort of visual encryption at play.

The filing lists its inventors as Mehmet Agaoglu, Cheng Chen, Harsha Shirahatti, Zhibing Ge, Shih-Chyuan Fan Jiang, Nischay Goel, Jiaying Wu, and William Sprague.

Apple files numerous patent filings on a weekly basis, but while the applications and patents indicate areas of interest for Apple's research and development efforts, it does not guarantee the idea will appear in a future product or service.

Given Apple's history surrounding encryption and privacy in general, it makes sense for something in the same field to come out of Apple's research teams. Unlike many other patent filings, it seems quite plausible for Apple to introduce something based on the idea for its current devices.

In terms of feasibility, current iPhones and iPads have the processing capability to modify text or to blur the display in some fashion to obscure content at specific points. Arguably the obfuscation process is the easiest element of the entire concept.

The bigger challenge would be gaze detection, which Apple does perform in a some manner with Face ID's Attention Awareness feature. ARKit also includes elements for eye tracking, which could potentially lead to gaze tracking in future frameworks.

Aside from the TrueDepth camera array, efforts have also been made over the years to use normal cameras to perform gaze detection, which may also assist in this endeavor. Getting it to work on a normal user-facing camera could allow such a feature to work on iPads, which do not include such advanced camera systems.

There are also other patent filings relating to gaze detection, including one from 2013 using a front-facing camera. Other examples include an eye tracking system for a head-mounted AR display using holographic elements, and ways to make VR and AR gaze detection faster and more accurate.

Malcolm Owen

Malcolm Owen

-m.jpg)

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

William Gallagher

William Gallagher

12 Comments

That’s cool. I hope it pans out to be more than just an idea. If Apple implements it it’ll likely be surprisingly good.

This is as futuristic as the iPhone itself.

"Furthermore, Apple suggests the unreadable portions of the display may contain content that visually matches the rest of the display, but the information within may be bogus. By making it visually similar to the real information, this helps further disguise where the user is currently looking on the screen, as well as minimizing the chance of an onlooker realizing there is some sort of visual encryption at play."

Fu**ing SH**!!

Turning off the display when the user isn't looking would have been enough but this is next level stuff.

Oh hey! More discussion of foveated rendering!

https://forums.appleinsider.com/discussion/comment/3182978#Comment_3182978

While this would be cool on iPhones, the same techniques are enormously important for mass-market VR.

The patent graphics shows it working on a Mac. What if people are working side by side?

It's a great idea and by providing bogus information that actually looks similar to the real content, should be capable of thwarting casual peeks.

I wonder how it will handle porn, though. :-)