Rather than attempting to manipulate AR objects through gloves or a game controller, a peripheral like a trackpad could allow users to select and manipulate what they see in front of them.

Alongside headsets like Apple Glasses which lets users see augmented reality, there's a need to interact and manipulate virtual objects. This is often done by the user having to also wear a type of glove, but Apple is looking at creating a physical surface that can detect touch. It's considering a large trackpad for controlling computer-generated environments (CGR) through ARKit.

"CGR environments are environments where some objects displayed for a user's viewing are generated by a computer," says Apple in US Patent No. ">20200104025, called "Remote Touch Detection Enabled by Peripheral Device."

"A user can interact with these virtual objects by activating hardware buttons or touching touch-enabled hardware," it continues. "However, such techniques for interacting with virtual objects can be cumbersome and non-intuitive for a user."

Apple's proposed solutions include a variety of "techniques for remote touch detection using a system of multiple devices," but the principle one is a "peripheral device that is placed on a physical surface such as the top of a table."

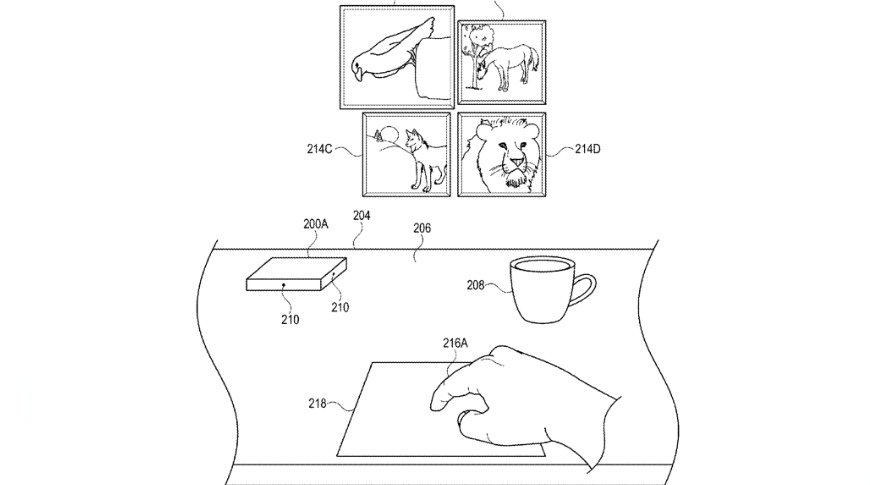

While a headset is showing a user a composite image of the real world around him or her, including this trackpad-style device, it can also be displaying virtual objects. Just as with a trackpad and screen on a MacBook Pro, the user can be looking at the virtual object while their fingers perform touch gestures on the trackpad.

A camera in the headset can locate the trackpad, and combined with that device's touch sensitive surface, calculate what virtual objects the user is attempting to manipulate.

Drawings in the patent show a user selecting from a choice of images by tapping at a corresponding part of the pad. They show the user rotating objects, or resizing them, using familiar touch gestures and movements.

Most of the patent describes methods of registering touches, and of displaying the composite AR and real image. Part of the latter suggests using holograms projected onto surfaces.

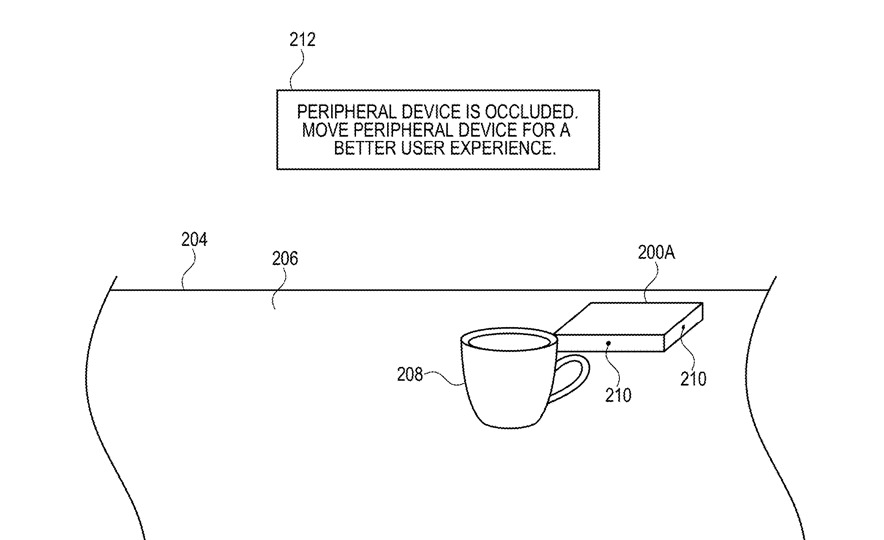

Key to the patent application, though, is how the use of the trackpad-like device can change depending on the AR images being presented, and where the device is in relation to the user. The combination of the trackpad and the headset working together mean that the user can be told to reposition the peripheral touch device.

"For optimal operation, peripheral device can request additional space near or around itself such that camera sensor(s) are free from occlusions (e.g., obstructions)," it says. An error message can be displayed in the headset and cleared by the user moving the trackpad into a better position.

The top message is an error displayed on the user's AR headset, and is cleared by the user moving the trackpad-like device to a better position

The top message is an error displayed on the user's AR headset, and is cleared by the user moving the trackpad-like device to a better positionThat chiefly means being in view of the headset camera and not obscured by other items, but can also be as specific as requiring the device be turned a certain way.

"In some embodiments, the set of operating conditions includes a requirement that peripheral device is in a suitable orientation (e.g., a surface of the peripheral device is approximately parallel with respect to the ground, is horizontal, is vertical, so forth)," it concludes.

The patent is credited to four inventors, Samuel L. Iglesias, Devin W. Chalmers, Rhit Sethi, and Lejing Wang. The latter has recently been credited on a similar patent regarding accurate handling of real and virtual objects, while Chalmers is listed on one about having digital assistants guiding users through AR and VR.

William Gallagher

William Gallagher

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->