Apple's iOS may get enhanced accessibility features that allow a device to audibly describe a scene captured by the camera, or present tactile feedback to enable people with low or no vision to take good photos.

While Apple's Camera app was revamped for the iPhone 11, iPhone 11 Pro, and iPhone 11 Pro Max, it remains chiefly of use to people with fair to good eyesight. New plans from Apple show that the app, and future iPhones, may be adapted to mean vision-impaired users can utilize the camera much more.

"Devices, Methods, and Graphical User Interfaces for Assisted Photo-Taking," US Patent No ">20200106955, broadly covers two related approaches to this.

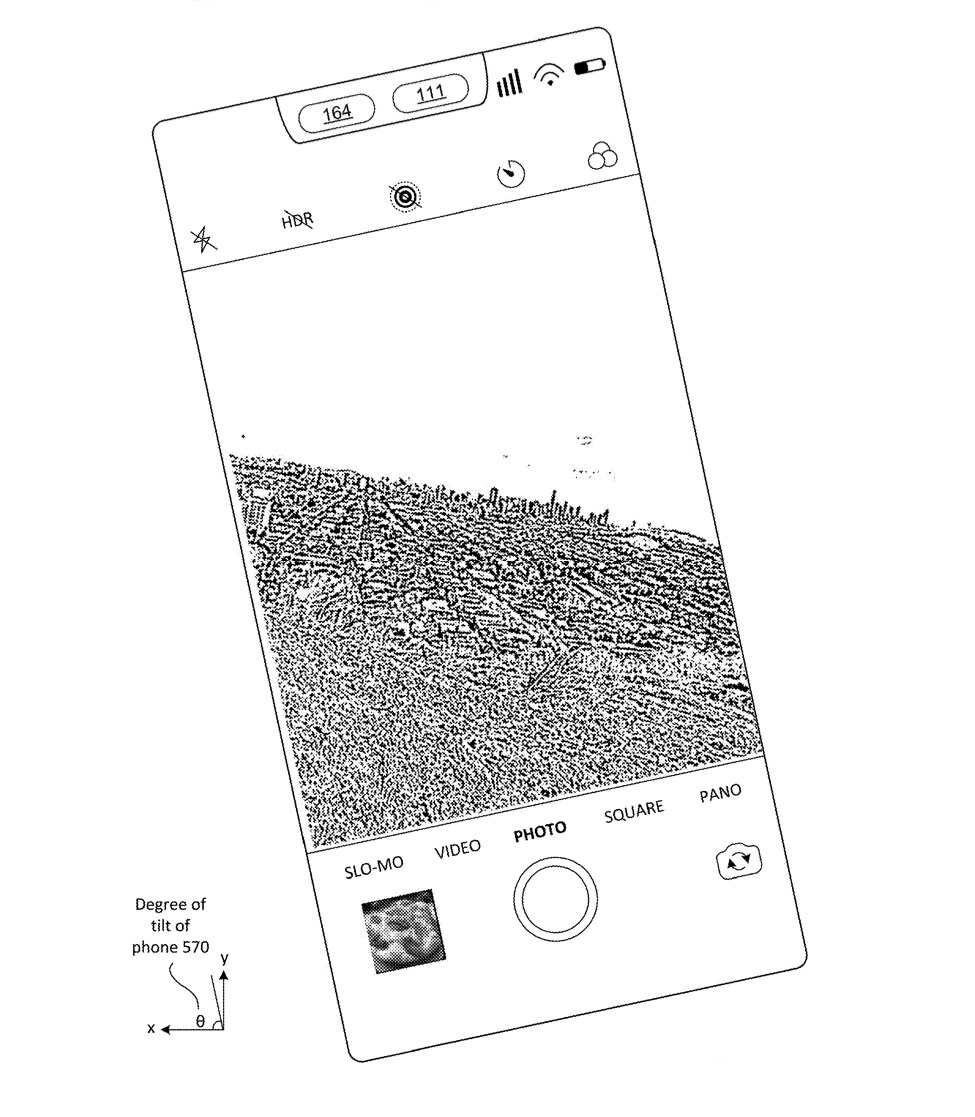

In its more than 30,000 words, the new Apple patent application chiefly details methods by which an iPhone — or another device with a camera — can speak to the user. That can be when they're reviewing a photo, or more usefully it can be as they line up the camera to take a new one. It can detect when the camera is not level, or when it has been moved, and provide "tactile and/or audible feedback in accordance with a determination that the subject matter of the scene has changed."

It's not just the camera that can move and spoil a shot, though. The subjects being photographed have an impact. "For example, [the] device provides tactile and/or audible feedback in accordance with a determination that a person (or other object or feature) has entered (or exited) the live preview," says the patent."

That feedback can the iPhone vibrating in specific ways, and it can be the device speaking aloud to tell the user what is happening. The features are meant to enable photography for people with a wide range of vision impairments, including blindness.

"[The iPhone may provide] an audible description of the scene," says the patent. "[The] audible description includes information that corresponds to the plurality of objects as a whole (e.g., 'Two people in the lower right corner of the screen,' or 'Two faces near the camera')."

It can be much more specific than that, though. In the same what that faces can be detected and users can tag them with names in the Photos app, or on services such as Facebook, so could a Camera app with these new features. The Camera app could tie in to the user's other information on the phone, such as Contact details, or recognized faces from Photos.

"[The device could access] a multimedia collection that includes one or more photographs and/or videos that have been tagged with people," continues the patent. "[Then] the audible description of the scene identifies the individuals in the scene by name (e.g., states the name of individual as tagged in the multimedia collection, e.g., 'Samantha and Alex are close to the camera in the bottom right of the screen').

There is a problem, however, when a potential photo contains very many people, or is any other way a particularly busy image. This planned future Camera app can assign priorities, such as concluding that the three people closest to the camera are the intended subjects even in a crowd of a dozen.

"[The device may allow] the user to interact only with objects that are above a prominence threshold," continues the patent, "so as not to overwhelm the user by cluttering the scene with detected objects."

However, calculating that priority or in any way simplifying the descriptions provided to the user, is one thing. Conveying that information is another, and this is where the range of both audio and tactile options become important.

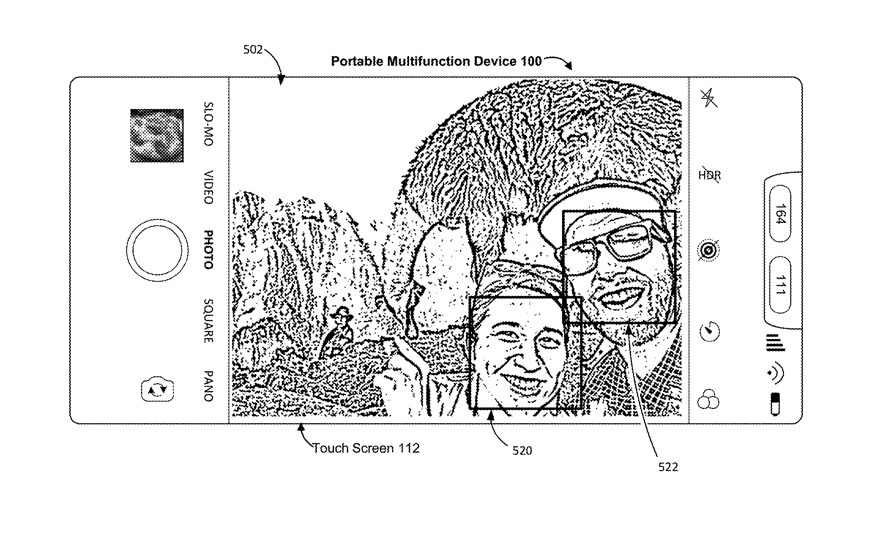

Initially the software would determine which parts of the image may be important, or "above a prominence threshold," and mark them with what Apple refers to as a "bounding box." But then the device has to be able to indicate to the user where these boxes are on the screen.

If the user has low or poor vision, they may still be sufficiently able to determine the position of the bounding box and touch it. When they tap in the box, a second, fuller, audio description could plau

"[A] second audible description of the respective object [could be triggered]," says the patent, "(e.g., 'A smiling, bearded man near the camera on the right edge of the image,' or 'A bearded man wearing sunglasses and a hat in the lower right corner of the screen,' or 'Alex is smiling in the lower right part of the screen,' etc.)."

Where the first description might name the person, if possible, this second one would describe "one or more characteristics (e.g., gender, facial expression, facial features, presence/absence of glasses, hats, or other accessories, etc.) specific to the respective object or individual."

When the user cannot see the bounding box, the iPhone or other device could use tactile feedback more. It might react to the user swiping a finger across the screen, for example responding when their figure crosses into the bounding box.

The patent suggests that when the camera is in what it calls "accessibility mode," that regular gestures such as tapping to focus, be replaced by more specific ones for the vision-impaired.

The proposed new Camera app could also use tactile feedback to tell a user when a shot is or isn't level. There's no mention yet of feedback to tell them that landscape is better.

The proposed new Camera app could also use tactile feedback to tell a user when a shot is or isn't level. There's no mention yet of feedback to tell them that landscape is better."[Such as allowing] user to select objects by moving (e.g., tracing) his or her finger over a live preview when an accessibility mode is active more efficiently customizes the user interface for low-vision and blind users," it says.

The patent is credited to four inventors, Christopher B. Fleizach, Darren C. Minifie, Eryn R. Wells, and Nandini Kannamangalam Sundara Raman. Fleizach is also listed on related patents such as "Voice control to diagnose inadvertent activation of accessibility features," while Minifie is credied on a patent regarding "Interface scanning for disabled users."

William Gallagher

William Gallagher

-m.jpg)

Amber Neely

Amber Neely

Andrew Orr

Andrew Orr

Wesley Hilliard

Wesley Hilliard

Oliver Haslam

Oliver Haslam

Christine McKee

Christine McKee

5 Comments

Is it fair to ask where is my knowledge navigator if they can do this? Siri won’t even assist with a text conversation well or assist with email responses to mom or co workers.

Apple has already made strides with this. My iPhone tells me when the camera is level, and it also tells me if a face is centered or not. No, it’s not as advanced as what is described here, but it’s already a start.

Hopefully this also means better autogenerated photo descriptions. Right now, I find them more annoying than useful and wish I could turn them off. Nothing will be as good as a well tagged photo described by the person who posted it.

Microsoft has a iOS app I played around with that already did this pretty well. You’d take a picture and it would describe the content “dog laying on floor”, “man smiling”.