Apple's new iPhone SE is the company's first — and thus far, only — iPhone to solely rely on machine learning for Portrait Mode depth estimation.

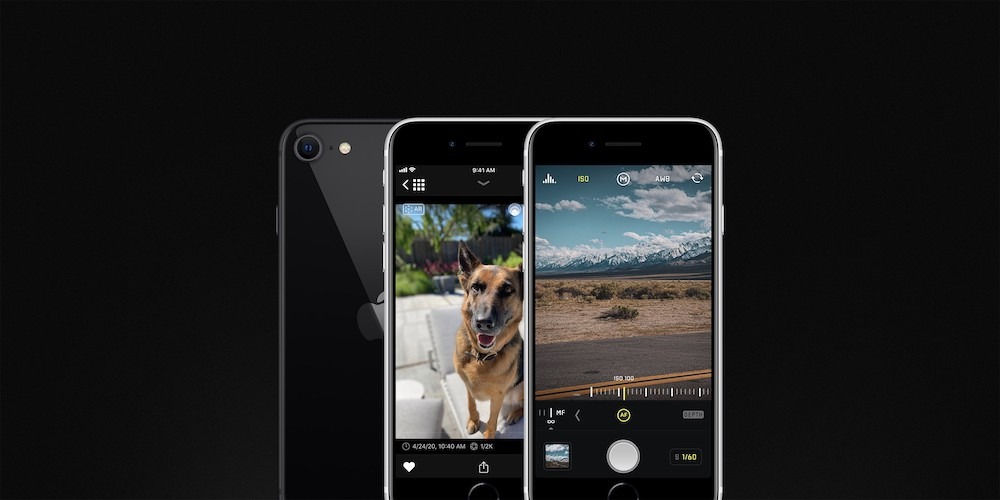

The iPhone SE, released in April, appears to be largely a copy of the iPhone 8, right down to the single-lens monocular camera. But, under the hood, there's much more going on for depth estimation than any other iPhone before it.

According to a blog post from the makers of camera app Halide, the iPhone SE is the first in Apple's lineup to use "Single Image Monocular Depth Estimation." That means it's the first iPhone that can create a portrait blur effect using just a single 2D image.

In past iPhones, Portrait Mode has required at least two cameras. That's because the best source of depth information has long been comparing two images coming from two slightly different places. Once the system compares those images, it can separate the subject of a photo from the background, allowing for the blurred or "bokeh effect."

The iPhone XR changed that, introducing Portrait Mode support through the use of sensor "focus pixels," which could produce a rough depth map. But while the new iPhone SE has focus pixels, its older hardware lacks the coverage requisite for depth-mapping purposes.

"The new iPhone SE can't use focus pixels, because its older sensor doesn't have enough coverage," Halide's Ben Sandofsky wrote. An iFixit teardown revealed on Monday that the iPhone SE's camera sensor is basically interchangeable with the iPhone 8's.

Instead, the entry-level iPhone produces depth maps entirely through machine learning. That also means that it can produce Portrait Mode photos from both its front- and rear-facing cameras. That's something undoubtedly made possible by the top-of-the-line A13 Bionic chipset underneath its hood.

The depth information isn't perfect, Halide points out, but it's an impressive feat given the relative hardware limitations of a three-year-old, single-sensor camera setup. Similarly, Portrait Mode on the iPhone SE only works on people, but Halide says the new version of its app allows bokeh effects on non-human subjects on the iPhone SE.

Mike Peterson

Mike Peterson

-m.jpg)

William Gallagher

William Gallagher

Thomas Sibilly

Thomas Sibilly

Andrew O'Hara

Andrew O'Hara

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

Malcolm Owen

Malcolm Owen

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

6 Comments

The core difference between this and Google's implementation is that the iPhone doesn't rely on a HDR+ shot nor the subtle variation in the background across the different areas of the same lens. What this means is that, with an iPhone SE, you can take a photo of an old printed photograph and still get the portrait effect - that's where the A13 becomes useful in keeping this process usably fast and power efficient.

However that said, all smartphone portrait modes have mixed results, sometimes they're good, sometimes they suck, and there are somethings that can't be properly simulated (e.g. when photographing objects that have lensed the background or a curved reflection.)