To bring the ability to track where a wearer's gaze and attention are focused, without requiring costly processing or battery-draining performance, Apple is having to develop a whole new system of eye tracking technology for "Apple Glass."

Future Apple AR devices are not going to have any problem figuring out when you've turned your head to the left or right. What's going to be much more difficult is figuring out when your head is stationary but your eyes are moving.

So if an AR device is presenting a book to you, it would be good if it knew when you'd reached the bottom of the page. In a game when you're hunting for treasure, it's going to have to be pretty big treasure unless there's a way for the device to know precisely where your eyes are looking.

"Method and Device for Eye Tracking Using Event Camera Data", a newly revealed patent application, describes the fact that this feature is needed — but then also what problems it brings. Apple is not reinventing the wheel with eye tracking, but it is trying to make that wheel much smaller.

"[In typical systems," a head-mounted device includes an eye tracking system that determines a gaze direction of a user of the head-mounted device," says Apple. "The eye tracking system often includes a camera that transmits images of the eyes of the user to a processor that performs eye tracking."

"[However, transmission] of the images at a sufficient frame rate to enable eye tracking requires a communication link with substantial bandwidth," continues Apple, "and using such a communication link increases heat generation and power consumption by the head-mounted device."

So current eye-tracking technology would necessarily make the AR device's battery life shorter. Given that there is already a trade off between the lightweight single-device design that Jony Ive wanted and the more powerful two-device system Apple had previously preferred, power is at a premium.

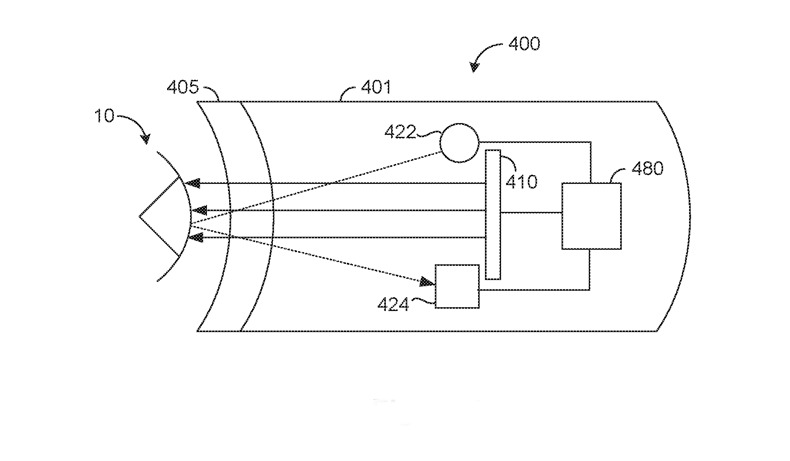

Apple's proposed solution is to cut down on the processing needed to track a user's gaze, and to do that by changing what exactly is tracked. "[One] method includes emitting light with modulating intensity from a plurality of light sources towards an eye of a user," says Apple.

"The method includes receiving light intensity data indicative of an intensity of the emitted light reflected by the eye of the user in the form of a plurality of glints," it continues. "The method includes determining an eye tracking characteristic of the user based on the light intensity data."

So while the AR headset can't exactly shine floodlights into a user's eyes, it could project some amount of light. A system would know exactly how much light was used and where it was directed.

"[Then] a plurality of light sensors at a plurality of respective locations [could detect] a change in intensity of light and indicating a particular location of the particular light sensor," says Apple. "The method includes determining an eye tracking characteristic of a user based on the plurality of event messages."

This proposal of using reflected light to calculate gaze is a development of a previous Apple patent to do with using an infrared emitter. Separately, Apple has also previously explored different tracking systems in order to assess a user's eye dominance.

William Gallagher

William Gallagher

-m.jpg)

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

10 Comments

Plurality of Glints would be a really bad name for a band. Always have a chuckle and be amazed by the weird language needed to describe inventions in these patent applications.

I presume Apple’s glasses will be called the iEye.

Oh, come on! You give reading a book as an example of why eye tracking would be useful in AR??? That's just about the dumbest application of AR there is - how is reading *augmenting* reality?

It would be nice if Appleinsider stopped displaying the hideous over-hyped Magic Leap glasses every time they report about Apple glasses. I am pretty sure Apple glasses will never look anything like Magic Leap's, which look like a nightmarishvision of some insect eyes.