With a combination of radar, and the LiDAR now present in the iPad Pro and iPhone 12 Pro, "Apple Glass" could sense the environment around the wearer when the light is too low for them to see clearly.

We've been slowly learning what Apple plans for the LiDAR sensor in devices such as the iPhone 12 Pro, which uses it to help with autofocus. That focusing assistance, though, is particularly useful in low-light environments — and Apple has designs on exploiting that ability to help "Apple Glass" wearers.

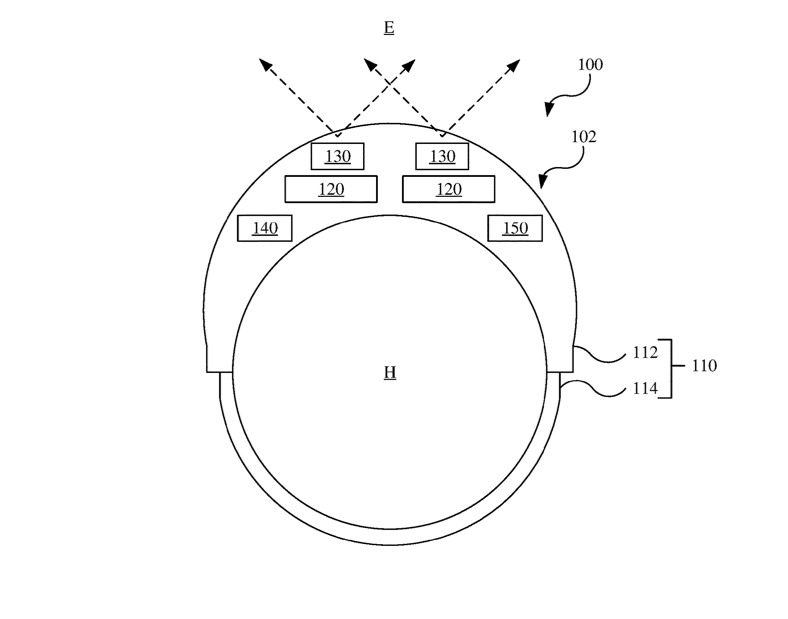

"Head-Mounted Display With Low Light Operation," is a newly-revealed patent application, which describes multiple ways of sensing the environment around the wearer of a head-mounted display (HMD).

"Human eyes have different sensitivity in different lighting conditions," begins Apple. It then details many different forms of human vision from photopic to mesopic, where differing types or amounts of light rely on different "cone cells of the eye."

Photopic vision is described as how the eye works during "high levels of ambient light... such as daylight." Then Apple cautions that mesopic or other forms of vision, fare poorly in comparison.

"As compared to photopic vision, [these] may result in a loss of color vision, changing sensitivity to different wavelengths of light, reduced acuity, and more motion blur," says Apple. "Thus, in poorly lit conditions, such as when relying on scotopic vision, a person is less able to view the environment than in well lit conditions."

Apple's proposed solution uses sensors in a HMD, such as "Apple Glass," which register the surrounding environment. The results are then relayed back to the wearer in unspecified "graphical content."

Key to registering the environment is the ability to sense distances between objects, the ability to detect depth. "The depth sensor detects the environment and, in particular, detects the depth (e.g., distance) therefrom to objects of the environment," says Apple.

"The depth sensor generally includes an illuminator and a detector," it continues. "The illuminator emits electromagnetic radiation (e.g., infrared light)... into the environment. The detector observes the electromagnetic radiation reflected off objects in the environment."

Apple is careful not to limit the possible detectors or methods that its patent application describes, but it does offer "specific examples." One is using time of flight and structured light — where a known pattern is projected out into the environment, and the time taken to recognize that pattern gives depth detals.

"In other examples, the depth sensor may be a radar detection and ranging sensor (RADAR) or a light detection and ranging sensor (LIDAR)," says Apple. "It should be noted that one or multiple types of depth sensors may be utilized, for example, incorporating one or more of a structured light sensor, a time-of-flight camera, a RADAR sensor, and/or a LIDAR sensor."

Apple's HMD could also use "ultrasonic sound waves," but regardless of what is produced by the headset, the patent application is all about accurate measurement of surroundings — and relaying that information to the wearer.

"The [HMD's] controller determines graphical content according to the sensing of the environment with the one or more of the infrared sensor or the depth sensor and with the ultrasonic sensor," says Apple, "and operates the display to provide the graphical content concurrent with the sensing of the environment."

This patent application is credited to four inventors, each of whom have related previous work. That includes Trevor J. Ness, who has credits on AR and VR tools for face mapping.

It also includes Fletcher R. Rothkopf, who has a previous patent to do with providing AR and VR headsets for "Apple Car" passengers — without making them ill.

That's an issue for all head-mounted displays, and alongside this low-light patent application, Apple has now a related one to do with using gaze tracking to reduce possible disorientation and consequently motion sickness.

Gaze tracking for mixed-reality images

"Image Enhancement Devices With Gaze Tracking," is another newly-revealed patent application, this time focusing on presenting AR images when a user may be moving their head.

"It can be challenging to present mixed reality content to a user," explains Apple. "The presentation of mixed-reality images to a user may, for example, be disrupted by head and eye movement."

"Users may have different visual capabilities," it continues. "If care is not taken, mixed-reality images will be presented that cause disorientation or motion sickness and that make it difficult for a user to identify items of interest."

The proposed solution is, in part, to use what sounds like a very similar idea to the LiDAR assistance in low-light environments. A headset, or other device, "can also gather information on the real-world image such as information on content, motion, and other image attributes by analyzing the real-world image."

That's coupled to what the device knows of a user's "vision information such as user visual acuity, contrast sensitivity, field of view, and geometrical distortions." Together with any preferences the user may set, the mixed-reality image can be presented in a way that minimizes disorientation.

"[As] an example, a portion of a real-world image may be magnified for a low vision user only when a user's point of gaze is relatively static to avoid inducing motion sickness effects," says Apple

This patent application is credited to four inventors, including Christina G. Gambacorta. She has previously been listed on a related patent to do with using gaze tracking in "Apple Glass" to balance image quality with battery life by altering the resolution of what a wearer is looking at.

William Gallagher

William Gallagher

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Andrew O'Hara

Andrew O'Hara

Mike Wuerthele

Mike Wuerthele

Bon Adamson

Bon Adamson

-m.jpg)

1 Comment

Always wanted the ability to see in the dark like a cat. ;)