Apple's augmented reality headset or a future iPhone could use light from a display to track the movement of nearly any surface, while finger devices may be able to provide detail to the system about what kind of objects a user may be touching.

Apple has been rumored to be working on a form of VR or AR headset for quite some time, and various reports and patent filings have indicated it could take a number of directions. In a pair of patents granted by the US Patent and Trademark Office on Tuesday, two more ways the system could function have been revealed.

Light-based AR object tracking

One of the advantages of AR headsets is the inclusion of cameras in the setup. Usually employed to take an image of a scene and for object recognition, the same hardware could be used to perform object tracking, seeing how an item changes position in real time relative to the headset's position.

The benefits of object tracking for AR generally boil down to being able to apply digital graphic overlays to the video feed around the object. This could include app-specific status indicators on a controller that would otherwise not be visible in the real-world view, for example.

However, the resources required for object recognition and object tracking, complete with determining the orientation, can be quite heavy on a system. In an area where a massive amount of processing is required to give an optimal experience, any methods to reduce the resource usage is welcomed.

In the patent titled "AV/VR controller with event camera," Apple offers the idea that the camera system may not necessarily need to keep track of all of the pixels relating to a tracked object at all times, but instead could cut the number down considerably to a bare minimum when only cursory checks are needed.

Apple suggests that the system selects specific pixels in an image that relate to a tracked object, which in turn provides readings for light intensity and other attributes. In the event the camera or object moves or alters position, the light intensity for those few pixels will change beyond a set limit, triggering the entire system to start searching for how the object has changed using more resources.

Given the system uses light, it could also be feasible that instead of trying to detect the object, the system could simply search for the same light points. If there are a plurality of light points on the object, and the system tracks just those points, it could use very few resources to determine the position and orientation changes.

While these points of light could easily be reflections of environmental lighting on an object's enclosure, it seems Apple is considering other object-generated light sources could be used, such as LED indicators or the display showing a pattern. With the latter, this would provide the system with ample positioning and orientation data, so long as the screen was visible.

Such light-based reference points don't necessarily have to be for visible light, as Apple suggests the illumination pattern could include non-visible wavelengths. This would offer an unobtrusive system that wouldn't be seen by users, and potentially one that could invisibly use different light wavelengths to allow the system to work with multiple users or objects.

Given it could use non-human-visible light, it would also be feasible for Apple to employ another technology it's already familiar with. LiDAR, as used in the TrueDepth camera and the back of the iPhone 12 Pro range, involves the emission of small points of infrared light, which reflect back to an imaging sensor and are used for mapping the depth of objects.

While not the object itself emitting its own light, the light point reflections would handle effectively the same task, with the added benefit of enabling the tracking of non-electronic or biological items, such as a user's hands.

The patent lists its inventor as Peter Meier, and was filed on January 31, 2019.

Object property sampling

The second patent relates to data gathering, specifically covering how a system can acquire information about what a user is touching.

Typically a visual and auditory medium, AR and VR can benefit from other elements being introduced, to sell the illusion of digital objects being real to the user. Such a system needs to know how to recreate sensations a user may feel when touching a real-world item, to allow it to mimic the effect with haptic feedback tools.

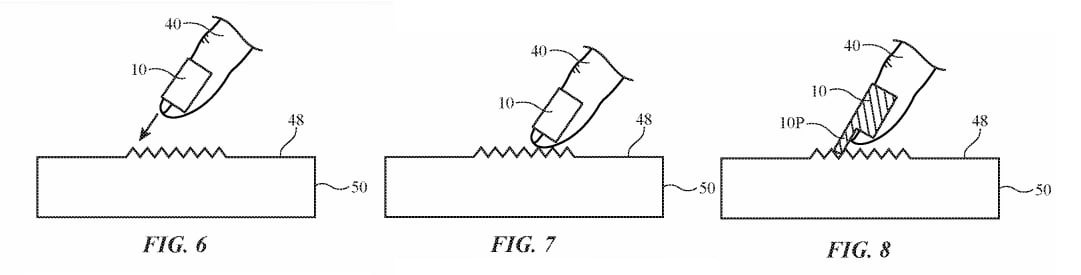

In the patent titled "Computer systems with finger devices for sampling object attributes," Apple proposes the use of finger-worn devices, which are used in two ways. While they can be used to offer haptic feedback to the user, the more important element for the patent is its ability to detect the characteristics of real-world objects the user may contact.

Sensors within each of the finger devices are able to read the effects of a surface of a real-world object the user is touching, such as the surface contour, textures, object colors and patterns, acoustic attributes, and weight. When combined with other elements, such as cameras on a head-mounted display, the system would be able to collect a lot of data about an object, which it could then offer back to the user.

These sensors could include a strain gauge, an ultrasonic sensor, direct contact sensors, thermometers, light detectors, ranging sensors, accelerometers, gyroscopes, a compass, and capacitive sensors, among others.

While worn on the end of the finger, the patent suggests users would still be able to experience the sensation of touching objects, as the finger pad would remain unobstructed. The sensors may detect by coming into contact in protrusions extending out beyond the fingertip, or by detecting changes in the way the user's finger is affected by touching the object.

Data collected about the object could then be compiled and filed away for later use by the system, and potentially shared as part of a wider library of data. This information could then be used with haptic feedback elements to simulate touching the object to the user, or other users.

The patent lists its inventor as Paul X. Wang, and was filed on April 22, 2019.

Previous developments

Apple files numerous patent applications on a weekly basis, but while the existence of a patent filing indicates areas of interest for Apple's research and development efforts, they do not guarantee the ideas will appear in a future product or service.

Object recognition and tracking has been an important part of Apple's ARKit, a toolkit for developers wanting to add AR content to their apps. This already extends to tracking buildings and other fixed-in-place objects that aren't moving, to allow digital items to appear floating or installed in the camera's view of the world.

ARKit is also able to perform motion capture, which entails a level of object tracking, as well as the ability for others within the world view to occlude virtual objects.

Finger-worn devices have previously appeared in patent applications, such as 2019's "Systems for modifying finger sensations during finger press input events." As part of that idea, a device would attach to the end of the finger but leave the finger pad open to the elements.

Intended to give a tactile sensation for typing on a display-based keyboard, the device would lightly squeeze the sides of the finger when the pad is about to make contact with a display. The squeeze pushes the finger pad into a more proud position, increasing the cushioning when the finger taps the surface.

Malcolm Owen

Malcolm Owen

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->