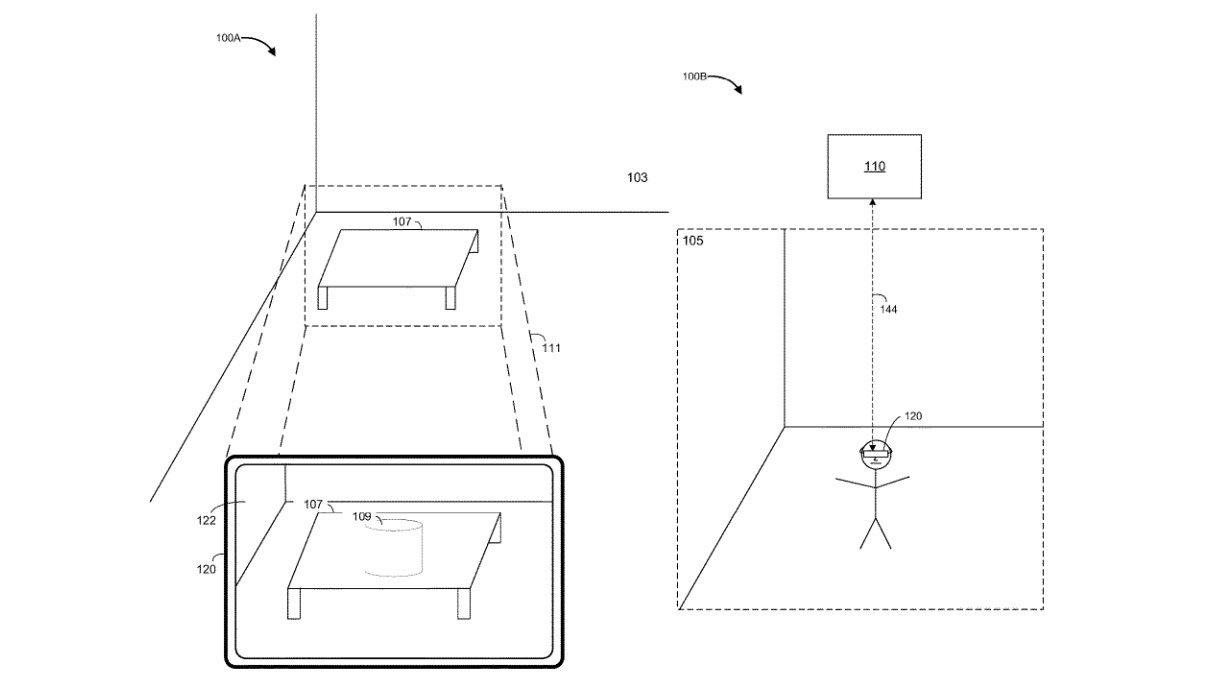

Apple is developing a system that could generate mixed reality or virtual reality environments from flat video content, letting users walk through and explore clips that would otherwise only be two-dimensional.

Apple's research on the topic was published on Thursday by the U.S. Patent and Trademark Office, for a "Method and device for generating a synthesized reality reconstruction of flat video content." The patent kicks off by defining synthesized reality, or SR, as digitally generated objects and environments. SR is essentially a broad category that Apple says includes virtual reality, augmented reality, and mixed reality.

Users can take part in a SR experience on a device, whether a handheld device like an iPhone or iPad or a head-mounted device like the rumored "Apple Glass." They could interact with the SR setting through one or more senses, including sight, taste, smell, and touch.

The bulk of the patent, however, focuses on a system that could generate SR characters, objects, and environments from flat video content. In other words, it could turn a TV show into an VR environment that a user could explore with the aforementioned devices.

"A user may wish to experience video content (e.g., a TV episode or movie) as if he/she is in the scene with the characters. In other words, the user wishes to view the video content as an SR experience instead of simply viewing the video content on a TV or other display device," the patent reads.

As Apple notes, most SR content is "painstakingly created" ahead of time and added to a library a user can choose from. However, the patent describes a method for generating on-demand SR reconstructions of video content by "leveraging digital assets" to "seamlessly and quickly" port flat content into an SR experience.

The system works by identifying a "plot-effectuator" in a scene, synthesizing a scene description, and generating a reconstruction of the scene by "driving a first digital asset associated with the first plot-effectuator according to the scene description for the scene."

As Apple points out, digital assets could correspond to a video game model for "plot-effectuators" — which it defines as characters or actors in the video content.

While the system could generate an SR reconstruction based on the video content, it could also draw information from associated text content and external data. That could be photos of actors in the video clips, height and measurements of the actors, various views of a scene, and other data.

"In some implementations, the digital assets are generated on-the-fly based at least in part on the video content and external data associated with the video content. In some implementations, the external data associated with the video content corresponds to pictures of actors, height and other measurements of actors, various views (e.g., plan, side, perspective, etc. views) of sets and objects, and/or the like," the patent reads.

Interestingly, Apple also notes that a similar SR generation system could create interactive scenes based on audio and associated content — including audiobooks, radio dramas, and other audio-only content.

Several portions of the patent also detail the distinction between action objects — or objects a character interacts with — and inactionable ones. That distinction could allow the SR system to know ahead of time what type of objects in a scene need to be interactive.

The patent lists Ian M. Richter, Daniel Ulbricht, Jean-Daniel E. Nahmias, Omar Elafifi, and Peter Meier as inventors. Of them, Meier has worked on an AR patent covering moving objects in real time, and Richter has been named as the inventor of a head-mounted system that could recognize head gestures.

Apple files numerous patents on a weekly basis, and there's no guarantee that the technology described within them will ever make it to market. Similarly, patent applications don't give any indication as to when Apple may release a new product with the technology.

Mike Peterson

Mike Peterson

Malcolm Owen

Malcolm Owen

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Oliver Haslam

Oliver Haslam

-m.jpg)

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->